{

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# Validating and Importing User-Item-Interaction Data \n",

"\n",

"In this notebook, you will choose a dataset and prepare it for use with Amazon Personalize.\n",

"\n",

"1. [How to Use the Notebook](#usenotebook)\n",

"1. [Introduction](#intro)\n",

"1. [Choose a Dataset or Data Source](#source)\n",

"1. [Configure an S3 bucket and an IAM role](#bucket_role)\n",

"1. [Create dataset group](#group_dataset)\n",

"1. [Create the Interactions Schema](#interact_schema)\n",

"1. [Create the Items Schema](#items_schema)\n",

"1. [Create the Users Schema](#users_schema)\n",

"1. [Import the Interactions Data](#import_interactions)\n",

"1. [Import the Items Metadata](#import_items)\n",

"1. [Import the User Metadata](#import_users)\n",

"1. [Storing Useful Variables](#vars)\n",

"\n",

"## How to Use the Notebook \n",

"\n",

"The code is broken up into cells like the one below. There's a triangular Run button at the top of this page that you can click to execute each cell and move onto the next, or you can press `Shift` + `Enter` while in the cell to execute it and move onto the next one.\n",

"\n",

"As a cell is executing you'll notice a line to the side showcase an `*` while the cell is running or it will update to a number to indicate the last cell that completed executing after it has finished exectuting all the code within a cell.\n",

"\n",

"Simply follow the instructions below and execute the cells to get started with Amazon Personalize using case optimized recommenders.\n",

"\n",

"\n",

"## Introduction \n",

"[Back to top](#top)\n",

"\n",

"In Amazon Personalize, you start by creating a dataset group, which is a container for Amazon Personalize components. Your dataset group can be one of the following:\n",

"\n",

"A Domain dataset group, where you create preconfigured resources for different business domains and use cases, such as getting recommendations for similar videos (VIDEO_ON_DEMAND domain) or best selling items (ECOMMERCE domain). You choose your business domain, import your data, and create Recommenders. You use Recommenders in your application to get recommendations.\n",

"\n",

"Use a [Domain dataset group](https://docs.aws.amazon.com/personalize/latest/dg/domain-dataset-groups.html) if you have a video on demand or e-commerce application and want Amazon Personalize to find the best configurations for your use cases. If you start with a Domain dataset group, you can also add custom resources such as solutions with solution versions trained with recipes for custom use cases.\n",

"\n",

"A [Custom dataset group](https://docs.aws.amazon.com/personalize/latest/dg/custom-dataset-groups.html), where you create configurable resources for custom use cases and batch recommendation workflows. You choose a recipe, train a solution version (model), and deploy the solution version with a campaign. You use a campaign in your application to get recommendations.\n",

"\n",

"Use a Custom dataset group if you don't have a video on demand or e-commerce application or want to configure and manage only custom resources, or want to get recommendations in a batch workflow. If you start with a Custom dataset group, you can't associate it with a domain later. Instead, create a new Domain dataset group.\n",

"\n",

"You can create and manage Domain dataset groups and Custom dataset groups with the AWS console, the AWS Command Line Interface (AWS CLI), or programmatically with the AWS SDKs.\n",

"\n",

"## Define your Use Case \n",

"[Back to top](#top)\n",

"\n",

"There are a few guidelines for scoping a problem suitable for Personalize. We recommend the values below as a starting point, although the [official limits](https://docs.aws.amazon.com/personalize/latest/dg/limits.html) lie a little lower.\n",

"\n",

"* Authenticated users\n",

"* At least 50 unique users\n",

"* At least 100 unique items\n",

"* At least 2 dozen interactions for each user \n",

"\n",

"Most of the time this is easily attainable, and if you are low in one category, you can often make up for it by having a larger number in another category.\n",

"\n",

"The user-item-iteraction data is key for getting started with the service. This means we need to look for use cases that generate that kind of data, a few common examples are:\n",

"\n",

"1. Video-on-demand applications\n",

"1. E-commerce platforms\n",

"\n",

"Defining your use-case will inform what data and what type of data you need.\n",

"\n",

"In this example we are going to be creating:\n",

"\n",

"1. Amazon Personalize ECOMMERCE Domain recommender for the [\"Frequently bought together\"](https://docs.aws.amazon.com/personalize/latest/dg/ECOMMERCE-use-cases.html#frequently-bought-together-use-case) use case.\n",

"1. Amazon Personalize ECOMMERCE Domain recommender for the [\"Recommended for you\"](https://docs.aws.amazon.com/personalize/latest/dg/ECOMMERCE-use-cases.html#recommended-for-you-use-case) use case.\n",

"1. Amazon Personalize Custom Campaign for a personalized ranked list of items, for instance shelf/rail/carousel based on some information (category, colour, style etc...) \n",

"\n",

"All of these will be created within the same dataset group and with the same input data.\n",

"\n",

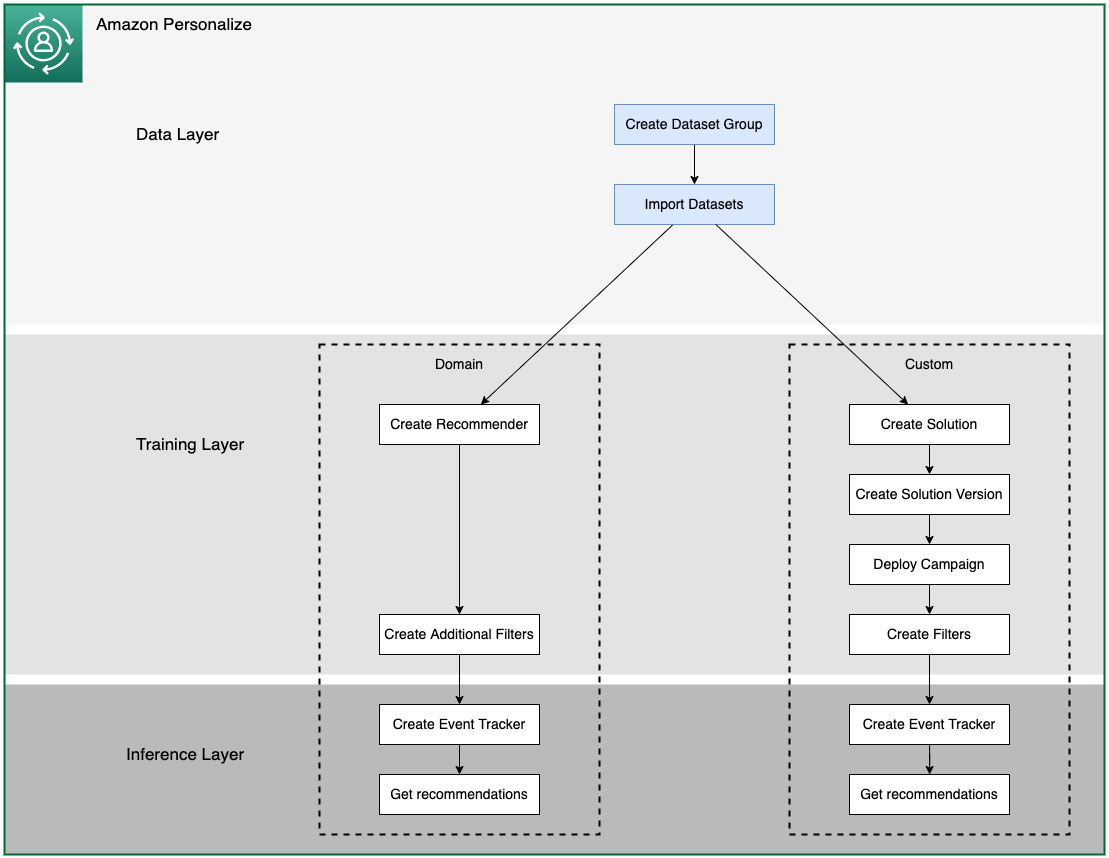

"The diagram bellow shows an overview of what we will be building in this wokshop.\n",

"\n",

"\n",

"\n",

"In this notebook we will be working on the Data Layer, Creatig a Dataset Group and importing the Datasets. \n",

"\n",

"\n",

"## Choose a Dataset or Data Source \n",

"[Back to top](#top)\n",

"\n",

"Regardless of the use case, the algorithms all share a base of learning on user-item-interaction data which is defined by 3 core attributes:\n",

"\n",

"1. **UserID** - The user who interacted\n",

"1. **ItemID** - The item the user interacted with\n",

"1. **Timestamp** - The time at which the interaction occurred\n",

"\n",

"Generally speaking your data will not arrive in a perfect form for Personalize, and will take some modification to be structured correctly. This notebook looks to guide you through all of that. \n",

"\n",

"Amazon Personalize has certain required fieds and reserved keywords, and you need to format your data to meet these requirements. \n",

"\n",

"Correctly formated data will allow you to work with filters as well as supporting the [Recommended for you](https://docs.aws.amazon.com/personalize/latest/dg/ECOMMERCE-use-cases.html#recommended-for-you-use-case) Domain Recommender, and complying with the [ECOMMERCE domain dataset and schema requirements](https://docs.aws.amazon.com/personalize/latest/dg/ECOMMERCE-datasets-and-schemas.html#ECOMMERCE-dataset-requirements)."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Open and Explore the Simulated Retail Interactions Dataset\n",

"\n",

"For this example we are using a synthetic datace generated when you deployed your working environment via CloudFormation.\n",

"\n",

"Python ships with a broad collection of libraries and we need to import those as well as the ones installed to help us like [boto3](https://aws.amazon.com/sdk-for-python/) (AWS SDK for python) and [Pandas](https://pandas.pydata.org/)/[Numpy](https://numpy.org/) which are core data science tools."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# Import Dependencies\n",

"\n",

"import boto3\n",

"import json\n",

"import pandas as pd\n",

"import seaborn as sns\n",

"import matplotlib.pyplot as plt\n",

"import time\n",

"import csv\n",

"import yaml\n",

"\n",

"%matplotlib inline\n",

"\n",

"# Setup the Amazon Personalzie client\n",

"personalize = boto3.client('personalize')"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# We will upload our training data from these files:\n",

"raw_items_filename = \"../../automation/ml_ops/domain/Retail/data/Items/items.csv\" # Do Not Change\n",

"raw_users_filename = \"../../automation/ml_ops/domain/Retail/data/Users/users.csv\" # Do Not Change\n",

"raw_interactions_filename = \"../../automation/ml_ops/domain/Retail/data/Interactions/interactions.csv\" # Do Not Change\n",

"items_filename = \"items.csv\" # Do Not Change\n",

"users_filename = \"users.csv\" # Do Not Change\n",

"interactions_filename = \"interactions.csv\" # Do Not Change"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"First let us see a few lines of the raw CSV data:\n",

"\n",

"- An ITEM_ID column of the item interacted with\n",

"- A USER_ID column of the user who interacted\n",

"- An EVENT_TYPE column which can be used to train different Personalize campaigns and also to filter on recommendations.\n",

"- The custom DISCOUNT column which is a contextual metadata field, that Personalize reranking and user recommendation campaigns can take into account to guess on the best next product.\n",

"- A TIMESTAMP of when the interaction happened"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"interactions_df = pd.read_csv(raw_interactions_filename)\n",

"interactions_df.head()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Chart the counts of each `EVENT_TYPE` generated for the interactions dataset. We're simulating a site where visitors heavily view/browse products and to a lesser degree add products to their cart and checkout."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"categorical_attributes = interactions_df.select_dtypes(include = ['object'])\n",

"\n",

"plt.figure(figsize=(16,3))\n",

"chart = sns.countplot(data = categorical_attributes, x = 'EVENT_TYPE')\n",

"plt.xticks(rotation=90, horizontalalignment='right')\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Explore the Users Dataset"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"users_df = pd.read_csv(raw_users_filename)\n",

"pd.set_option('display.max_rows', 5)\n",

"\n",

"users_df.head()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We can also leverage some syntectic user metadata to get some additional information about our users. This file was also generated when you deployed your working environment."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"user_metadata_file_name = '../../automation/ml_ops/domain/Retail/metadata/Users/users.json'\n",

"zipped_file_name = user_metadata_file_name+'.gz'\n",

"\n",

"# this will delete the zipped file. -k is not supported with the current version of gzip on this instance.\n",

"!gzip -d $zipped_file_name\n",

"\n",

"user_metadata_df = pd.read_json (user_metadata_file_name)\n",

"user_metadata_df"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# zipping the metadata file\n",

"!gzip $user_metadata_file_name"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Explore the Items Dataset\n",

"\n",

"Let us look at the items dataset."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"products_df = pd.read_csv(raw_items_filename)\n",

"pd.set_option('display.max_rows', 5)\n",

"\n",

"products_df.head()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We are going to load some additional metadata about each item that will help make the recommended items more readable in this workshop. This is data you'd typically find in an item catalog system/database."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# load the item meta data\n",

"item_metadata_file_name = '../../automation/ml_ops/domain/Retail/metadata/Items/products.yaml'\n",

"with open(item_metadata_file_name) as f:\n",

" item_metadata_df = pd.json_normalize(yaml.load(f, Loader=yaml.FullLoader))[['id', 'name', 'category', 'style', 'featured']]\n",

" \n",

"display (item_metadata_df)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"This function will be used to lookup the name for a product given its ID."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def get_item_name_from_id ( item_id ):\n",

" item_name = item_metadata_df [item_metadata_df ['id'] == item_id]['name'].values[0]\n",

" return item_name"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's test the function above by adding an `ITEM_NAME` column to a dataframe that is populated with the name of each product."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"test = products_df.copy()\n",

"\n",

"test['ITEM_NAME'] = test.apply(\n",

" lambda row:\n",

" get_item_name_from_id(row['ITEM_ID'] ), axis=1\n",

" )\n",

"display(test.head())"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Note the `ITEM_NAME` column has been added to the above data frame. We'll use this function later."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Configure an S3 bucket and an IAM role \n",

"[Back to top](#top)\n",

"\n",

"So far, we have downloaded, manipulated, and saved the data onto the Amazon EBS instance attached to instance running this Jupyter notebook. \n",

"\n",

"By default, the Personalize service does not have permission to acccess the data we uploaded into the S3 bucket in our account. In order to grant access to the Personalize service to read our CSVs, we need to set a Bucket Policy and create an IAM role that the Amazon Personalize service will assume. Let's set all of that up.\n",

"\n",

"Use the metadata stored on the instance underlying this Amazon SageMaker notebook, to determine the region it is operating in. If you are using a Jupyter notebook outside of Amazon SageMaker, simply define the region as a string below. The Amazon S3 bucket needs to be in the same region as the Amazon Personalize resources we have been creating so far."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"with open('/opt/ml/metadata/resource-metadata.json') as notebook_info:\n",

" data = json.load(notebook_info)\n",

" resource_arn = data['ResourceArn']\n",

" region = resource_arn.split(':')[3]\n",

"print('region:', region)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Amazon S3 bucket names are globally unique. To create a unique bucket name, the code below will append the string `personalizepocvod` to your AWS account number. Then it creates a bucket with this name in the region discovered in the previous cell."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"s3 = boto3.client('s3')\n",

"account_id = boto3.client('sts').get_caller_identity().get('Account')\n",

"bucket_name = account_id + \"-\" + region + \"-\" + \"personalize-immersion-day-retail\"\n",

"print('bucket_name:', bucket_name)\n",

"try: \n",

" if region == \"us-east-1\":\n",

" s3.create_bucket(Bucket=bucket_name)\n",

" else:\n",

" s3.create_bucket(\n",

" Bucket=bucket_name,\n",

" CreateBucketConfiguration={'LocationConstraint': region}\n",

" )\n",

"except s3.exceptions.BucketAlreadyOwnedByYou:\n",

" print(\"Bucket already exists. Using bucket\", bucket_name)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Set the S3 bucket policy\n",

"Amazon Personalize needs to be able to read the contents of your S3 bucket. So add a bucket policy which allows that."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"s3 = boto3.client(\"s3\")\n",

"\n",

"policy = {\n",

" \"Version\": \"2012-10-17\",\n",

" \"Id\": \"PersonalizeS3BucketAccessPolicy\",\n",

" \"Statement\": [\n",

" {\n",

" \"Sid\": \"PersonalizeS3BucketAccessPolicy\",\n",

" \"Effect\": \"Allow\",\n",

" \"Principal\": {\n",

" \"Service\": \"personalize.amazonaws.com\"\n",

" },\n",

" \"Action\": [\n",

" \"s3:GetObject\",\n",

" \"s3:ListBucket\",\n",

" \"s3:PutObject\"\n",

" ],\n",

" \"Resource\": [\n",

" \"arn:aws:s3:::{}\".format(bucket_name),\n",

" \"arn:aws:s3:::{}/*\".format(bucket_name)\n",

" ]\n",

" }\n",

" ]\n",

"}\n",

"\n",

"s3.put_bucket_policy(Bucket=bucket_name, Policy=json.dumps(policy));"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Create an IAM role\n",

"\n",

"Amazon Personalize needs the ability to assume roles in AWS in order to have the permissions to execute certain tasks. Let's create an IAM role and attach the required policies to it. The code below attaches very permissive policies; please use more restrictive policies for any production application."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"iam = boto3.client(\"iam\")\n",

"\n",

"role_name = account_id+\"-PersonalizeS3-Immersion-Day\"\n",

"assume_role_policy_document = {\n",

" \"Version\": \"2012-10-17\",\n",

" \"Statement\": [\n",

" {\n",

" \"Effect\": \"Allow\",\n",

" \"Principal\": {\n",

" \"Service\": \"personalize.amazonaws.com\"\n",

" },\n",

" \"Action\": \"sts:AssumeRole\"\n",

" }\n",

" ]\n",

"}\n",

"\n",

"try:\n",

" create_role_response = iam.create_role(\n",

" RoleName = role_name,\n",

" AssumeRolePolicyDocument = json.dumps(assume_role_policy_document)\n",

" );\n",

" \n",

"except iam.exceptions.EntityAlreadyExistsException as e:\n",

" print('Warning: role already exists:', e)\n",

" create_role_response = iam.get_role(\n",

" RoleName = role_name\n",

" );\n",

"\n",

"role_arn = create_role_response[\"Role\"][\"Arn\"]\n",

" \n",

"print('IAM Role: {}'.format(role_arn))\n",

" \n",

"attach_response = iam.attach_role_policy(\n",

" RoleName = role_name,\n",

" PolicyArn = \"arn:aws:iam::aws:policy/AmazonS3FullAccess\"\n",

");\n",

"\n",

"role_arn = create_role_response[\"Role\"][\"Arn\"]\n",

"\n",

"# Pause to allow role to be fully consistent\n",

"time.sleep(30)\n",

"print('Done.')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Upload data to S3\n",

"\n",

"Now that your Amazon S3 bucket has been created, upload the CSV file of our user-item-interaction data. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"boto3.Session().resource('s3').Bucket(bucket_name).Object(users_filename).upload_file(raw_users_filename)\n",

"boto3.Session().resource('s3').Bucket(bucket_name).Object(items_filename).upload_file(raw_items_filename)\n",

"boto3.Session().resource('s3').Bucket(bucket_name).Object(interactions_filename).upload_file(raw_interactions_filename)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Create dataset group \n",

"[Back to top](#top)\n",

"\n",

"The highest level of isolation and abstraction with Amazon Personalize is a *dataset group*. Information stored within one of these dataset groups has no impact on any other dataset group or models created from one - they are completely isolated. This allows you to run many experiments and is part of how we keep your models private and fully trained only on your data. \n",

"\n",

"Before importing the data prepared earlier, there needs to be a dataset group and a dataset added to it that handles the interactions.\n",

"\n",

"Dataset groups can house the following types of information:\n",

"\n",

"* User-item-interactions\n",

"* Event streams (real-time interactions)\n",

"* User metadata\n",

"* Item metadata\n",

"\n",

"We need to create the dataset group that will contain our three datasets."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Create Dataset Group\n",

"The following cell will create a new dataset group with the name personalize-immersion-day-retail\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"create_dataset_group_response = personalize.create_dataset_group(\n",

" name = 'personalize-immersion-day-retail',\n",

" domain='ECOMMERCE'\n",

")\n",

"dataset_group_arn = create_dataset_group_response['datasetGroupArn']\n",

"print(json.dumps(create_dataset_group_response, indent=2))\n",

"\n",

"print(f'DatasetGroupArn = {dataset_group_arn}')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Wait for Dataset Group to Have ACTIVE Status"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Before we can use the dataset group, it must be active. This can take a minute or two. Execute the cell below and wait for it to show the ACTIVE status. It checks the status of the dataset group every 60 seconds, up to a maximum of 3 hours."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"status = None\n",

"max_time = time.time() + 3*60*60 # 3 hours\n",

"while time.time() < max_time:\n",

" describe_dataset_group_response = personalize.describe_dataset_group(\n",

" datasetGroupArn = dataset_group_arn\n",

" )\n",

" status = describe_dataset_group_response[\"datasetGroup\"][\"status\"]\n",

" print(\"DatasetGroup: {}\".format(status))\n",

" \n",

" if status == \"ACTIVE\" or status == \"CREATE FAILED\":\n",

" break\n",

" \n",

" time.sleep(15)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Now that you have a dataset group, you can create a dataset for the interaction data."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Create the Interactions Schema \n",

"[Back to top](#top)\n",

"\n",

"Now that we've loaded and prepared our three datasets we'll need to configure the Amazon Personalize service to understand our data so that it can be used to train models for generating recommendations. Amazon Personalize requires a schema for each dataset so it can map the columns in our CSVs to fields for model training. Each schema is declared in JSON using the [Apache Avro](https://avro.apache.org/) format. \n",

"\n",

"First, define a schema to tell Amazon Personalize what type of dataset you are uploading. There are several reserved and mandatory keywords required in the schema, based on the type of dataset. More detailed information can be found in the [documentation](https://docs.aws.amazon.com/personalize/latest/dg/how-it-works-dataset-schema.html).\n",

"\n",

"Here, you will create a schema for interactions data, which requires the `USER_ID`, `ITEM_ID`, and `TIMESTAMP` fields. These must be defined in the same order in the schema as they appear in the dataset."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Interactions Dataset Schema\n",

"\n",

"The interactions dataset has three required columns: `ITEM_ID`, `USER_ID`, and `TIMESTAMP`. The `TIMESTAMP` represents when the user interated with an item and must be expressed in Unix timestamp format (seconds). For this dataset we also have an `EVENT_TYPE` column that includes multiple common eCommerce event types (`View`, `AddToCart`, `Purchase`, and so on).\n",

"\n",

"The interactions dataset supports metadata columns. Interaction metadata columns are a way to provide contextual details that are specific to an interaction such as the user's current device type (phone, tablet, desktop, set-top box, etc), the user's current location (city, region, metro code, etc), current weather conditions, and so on. For this dataset, we have a `DISCOUNT` column that indicates whether the user is interacting with an item that is currently discounted (`Yes`/`No`). It's being used to learn a user's affinity for items that are on sale or not."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"interactions_schema = {\n",

" \"type\": \"record\",\n",

" \"name\": \"Interactions\",\n",

" \"namespace\": \"com.amazonaws.personalize.schema\",\n",

" \"fields\": [\n",

" {\n",

" \"name\": \"ITEM_ID\",\n",

" \"type\": \"string\"\n",

" },\n",

" {\n",

" \"name\": \"USER_ID\",\n",

" \"type\": \"string\"\n",

" },\n",

" {\n",

" \"name\": \"EVENT_TYPE\", # \"View\", \"Purchase\", etc.\n",

" \"type\": \"string\"\n",

" },\n",

" {\n",

" \"name\": \"TIMESTAMP\",\n",

" \"type\": \"long\"\n",

" },\n",

" {\n",

" \"name\": \"DISCOUNT\", # This is the contextual metadata - \"Yes\" or \"No\".\n",

" \"type\": \"string\",\n",

" \"categorical\": True,\n",

" },\n",

" ],\n",

" \"version\": \"1.0\"\n",

"}\n",

"\n",

"try:\n",

" create_schema_response = personalize.create_schema(\n",

" name = \"personalize-immersion-day-retail-schema-interactions\",\n",

" schema = json.dumps(interactions_schema),\n",

" domain='ECOMMERCE'\n",

" )\n",

" print(json.dumps(create_schema_response, indent=2))\n",

" interactions_schema_arn = create_schema_response['schemaArn']\n",

"except personalize.exceptions.ResourceAlreadyExistsException:\n",

" print('You aready created this schema.')\n",

" schemas = personalize.list_schemas(maxResults=100)['schemas']\n",

" for schema_response in schemas:\n",

" if schema_response['name'] == \"personalize-immersion-day-retail-schema-interactions\":\n",

" interactions_schema_arn = schema_response['schemaArn']\n",

" print(f\"Using existing schema: {interactions_schema_arn}\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Create the interactions dataset\n",

"\n",

"With a schema created, you can create a dataset within the dataset group. Note that this does not load the data yet, but creates a schema of what the data looks like. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"dataset_type = \"INTERACTIONS\"\n",

"create_dataset_response = personalize.create_dataset(\n",

" name = \"personalize-immersion-day-dataset-interactions\",\n",

" datasetType = dataset_type,\n",

" datasetGroupArn = dataset_group_arn,\n",

" schemaArn = interactions_schema_arn\n",

")\n",

"\n",

"interactions_dataset_arn = create_dataset_response['datasetArn']\n",

"print(json.dumps(create_dataset_response, indent=2))"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Create the Items schema\n",

"[Back to top](#top)\n",

"\n",

"First, define a schema to tell Amazon Personalize what type of dataset you are uploading. There are several reserved and mandatory keywords required in the schema, based on the type of dataset. More detailed information can be found in the [documentation](https://docs.aws.amazon.com/personalize/latest/dg/how-it-works-dataset-schema.html).\n",

"\n",

"The items dataset schema requires an `ITEM_ID` column and at least one metadata column. Up to 50 metadata columns can be added to the items dataset.\n",

"\n",

"For this dataset we have three metadata columns: `PRICE`, `CATEGORY_L1`, `CATEGORY_L2`, `PRODUCT_DESCRIPTION`, and `GENDER` (see schema definition in the cell below). \n",

"\n",

"We mapped the `category` and `style` fields from the items catalog to the `CATEGORY_L1` and `CATEGORY_L2` columns to indicate category levels. The `gender_affinity` field used to indicate Women's and Men's products (clothing, footwear, etc) has been mapped to the `GENDER` column in the schema. Note too that `CATEGORY_L1`, `CATEGORY_L2`, and `GENDER` are annotated as being categorical (`\"categorical\": true`). This tells Personalize to interpret the column value for each row as one or more categorical values where the `|` character can be used to separate values. For example, `value1|value2|value3`. The `PRODUCT_DESCRIPTION` column is annotated as being a text column (`\"textual\": true`). This tells Personalize that this column contains unstructured text. A natural language processing (NLP) model is used to extract features from the textual column to use as features in the model. Including a textual column in your items dataset can significantly enhance the relevancy of recommendations. Currently only one textual column can be included in the items dataset and the text must be in English. For more information, please refer to [the documentation](https://docs.aws.amazon.com/personalize/latest/dg/items-datasets.html#text-data)."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"items_schema = {\n",

" \"type\": \"record\",\n",

" \"name\": \"Items\",\n",

" \"namespace\": \"com.amazonaws.personalize.schema\",\n",

" \"fields\": [\n",

" {\n",

" \"name\": \"ITEM_ID\",\n",

" \"type\": \"string\"\n",

" },\n",

" {\n",

" \"name\": \"PRICE\",\n",

" \"type\": \"float\"\n",

" },\n",

" {\n",

" \"name\": \"CATEGORY_L1\",\n",

" \"type\": \"string\",\n",

" \"categorical\": True,\n",

" },\n",

" {\n",

" \"name\": \"CATEGORY_L2\",\n",

" \"type\": \"string\",\n",

" \"categorical\": True,\n",

" },\n",

" {\n",

" \"name\": \"PRODUCT_DESCRIPTION\",\n",

" \"type\": \"string\",\n",

" \"textual\": True\n",

" },\n",

" {\n",

" \"name\": \"GENDER\",\n",

" \"type\": \"string\",\n",

" \"categorical\": True,\n",

" },\n",

" ],\n",

" \"version\": \"1.0\"\n",

"}\n",

"\n",

"try:\n",

" create_schema_response = personalize.create_schema(\n",

" name = \"personalize-immersion-day-retail-schema-items\",\n",

" schema = json.dumps(items_schema),\n",

" domain='ECOMMERCE'\n",

" )\n",

" items_schema_arn = create_schema_response['schemaArn']\n",

" print(json.dumps(create_schema_response, indent=2))\n",

"except personalize.exceptions.ResourceAlreadyExistsException:\n",

" print('You aready created this schema.')\n",

" schemas = personalize.list_schemas(maxResults=100)['schemas']\n",

" for schema_response in schemas:\n",

" if schema_response['name'] == \"personalize-immersion-day-retail-schema-items\":\n",

" items_schema_arn = schema_response['schemaArn']\n",

" print(f\"Using existing schema: {items_schema_arn}\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Create Items Dataset\n",

"With a schema created, you can create a dataset within the dataset group. Note that this does not load the data yet, but creates a schema of what the data looks like. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"dataset_type = \"ITEMS\"\n",

"create_dataset_response = personalize.create_dataset(\n",

" name = \"personalize-immersion-day-dataset-items\",\n",

" datasetType = dataset_type,\n",

" datasetGroupArn = dataset_group_arn,\n",

" schemaArn = items_schema_arn\n",

")\n",

"\n",

"items_dataset_arn = create_dataset_response['datasetArn']\n",

"print(json.dumps(create_dataset_response, indent=2))"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Create the Users schema\n",

"[Back to top](#top)\n",

"\n",

"First, define a schema to tell Amazon Personalize what type of dataset you are uploading. There are several reserved and mandatory keywords required in the schema, based on the type of dataset. More detailed information can be found in the [documentation](https://docs.aws.amazon.com/personalize/latest/dg/how-it-works-dataset-schema.html).\n",

"\n",

"Here, you will create a schema for user data, which requires the `USER_ID`, and an additonal metadata field. For this dataset we have metadata columns for `AGE` and `GENDER`. These must be defined in the same order in the schema as they appear in the dataset."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"users_schema = {\n",

" \"type\": \"record\",\n",

" \"name\": \"Users\",\n",

" \"namespace\": \"com.amazonaws.personalize.schema\",\n",

" \"fields\": [\n",

" {\n",

" \"name\": \"USER_ID\",\n",

" \"type\": \"string\"\n",

" },\n",

" {\n",

" \"name\": \"AGE\",\n",

" \"type\": \"int\"\n",

" },\n",

" {\n",

" \"name\": \"GENDER\",\n",

" \"type\": \"string\",\n",

" \"categorical\": True,\n",

" }\n",

" ],\n",

" \"version\": \"1.0\"\n",

"}\n",

"\n",

"try:\n",

" create_schema_response = personalize.create_schema(\n",

" name = \"personalize-immersion-day-retail-schema-users\",\n",

" schema = json.dumps(users_schema),\n",

" domain='ECOMMERCE'\n",

" )\n",

" print(json.dumps(create_schema_response, indent=2))\n",

" users_schema_arn = create_schema_response['schemaArn']\n",

"except personalize.exceptions.ResourceAlreadyExistsException:\n",

" print('You aready created this schema, seemingly')\n",

" schemas = personalize.list_schemas(maxResults=100)['schemas']\n",

" for schema_response in schemas:\n",

" if schema_response['name'] == \"personalize-immersion-day-retail-schema-users\":\n",

" users_schema_arn = schema_response['schemaArn']\n",

" print(f\"Using existing schema: {users_schema_arn}\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Create Users Dataset"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"dataset_type = \"USERS\"\n",

"create_dataset_response = personalize.create_dataset(\n",

" name = \"personalize-immersion-day-dataset-users\",\n",

" datasetType = dataset_type,\n",

" datasetGroupArn = dataset_group_arn,\n",

" schemaArn = users_schema_arn\n",

")\n",

"\n",

"users_dataset_arn = create_dataset_response['datasetArn']\n",

"print(json.dumps(create_dataset_response, indent=2))"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's wait untill all the datasets have been created."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"\n",

"max_time = time.time() + 6*60*60 # 6 hours\n",

"while time.time() < max_time:\n",

" describe_dataset_response = personalize.describe_dataset(\n",

" datasetArn = interactions_dataset_arn\n",

" )\n",

" status = describe_dataset_response[\"dataset\"]['status']\n",

" print(\"Interactions Dataset: {}\".format(status))\n",

" \n",

" if status == \"ACTIVE\" or status == \"CREATE FAILED\":\n",

" break\n",

" \n",

" time.sleep(60)\n",

" \n",

"while time.time() < max_time:\n",

" describe_dataset_response = personalize.describe_dataset(\n",

" datasetArn = items_dataset_arn\n",

" )\n",

" status = describe_dataset_response[\"dataset\"]['status']\n",

" print(\"Items Dataset: {}\".format(status))\n",

" \n",

" if status == \"ACTIVE\" or status == \"CREATE FAILED\":\n",

" break\n",

" \n",

" time.sleep(60)\n",

" \n",

"while time.time() < max_time:\n",

" describe_dataset_response = personalize.describe_dataset(\n",

" datasetArn = users_dataset_arn\n",

" )\n",

" status = describe_dataset_response[\"dataset\"]['status']\n",

" print(\"Users Dataset: {}\".format(status))\n",

" \n",

" if status == \"ACTIVE\" or status == \"CREATE FAILED\":\n",

" break\n",

" \n",

" time.sleep(60)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Import the Interactions Data \n",

"[Back to top](#top)\n",

"\n",

"Earlier you created the dataset group and dataset to house your information, so now you will execute an import job that will load the interactions data from the S3 bucket into the Amazon Personalize dataset. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"interactions_create_dataset_import_job_response = personalize.create_dataset_import_job(\n",

" jobName = \"immersion-day-retail-dataset-interactions-import\",\n",

" datasetArn = interactions_dataset_arn,\n",

" dataSource = {\n",

" \"dataLocation\": \"s3://{}/{}\".format(bucket_name, interactions_filename)\n",

" },\n",

" roleArn = role_arn\n",

")\n",

"\n",

"interactions_dataset_import_job_arn = interactions_create_dataset_import_job_response['datasetImportJobArn']\n",

"print(json.dumps(interactions_create_dataset_import_job_response, indent=2))"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Import the Item Metadata \n",

"[Back to top](#top)\n",

"\n",

"Earlier you created the dataset group and dataset to house your information, now you will execute an import job that will load the item data from the S3 bucket into the Amazon Personalize dataset. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"items_create_dataset_import_job_response = personalize.create_dataset_import_job(\n",

" jobName = \"immersion-day-retail-dataset-items-import\",\n",

" datasetArn = items_dataset_arn,\n",

" dataSource = {\n",

" \"dataLocation\": \"s3://{}/{}\".format(bucket_name, items_filename)\n",

" },\n",

" roleArn = role_arn\n",

")\n",

"\n",

"items_dataset_import_job_arn = items_create_dataset_import_job_response['datasetImportJobArn']\n",

"print(json.dumps(items_create_dataset_import_job_response, indent=2))"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Import the User Metadata \n",

"[Back to top](#top)\n",

"\n",

"Earlier you created the dataset group and dataset to house your information, now you will execute an import job that will load the user data from the S3 bucket into the Amazon Personalize dataset. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"users_create_dataset_import_job_response = personalize.create_dataset_import_job(\n",

" jobName = \"immersion-day-retail-dataset-users-import\",\n",

" datasetArn = users_dataset_arn,\n",

" dataSource = {\n",

" \"dataLocation\": \"s3://{}/{}\".format(bucket_name, users_filename)\n",

" },\n",

" roleArn = role_arn\n",

")\n",

"\n",

"users_dataset_import_job_arn = users_create_dataset_import_job_response['datasetImportJobArn']\n",

"print(json.dumps(users_create_dataset_import_job_response, indent=2))"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Wait for Import Jobs to Complete\n",

"\n",

"Before we can use the dataset, the import job must be active. Execute the cell below and wait for it to show the ACTIVE status. It checks the status of the import job every minute, up to a maximum of 6 hours.\n",

"\n",

"It will take 10-15 minutes for the import jobs to complete. While you're waiting you can learn more about Datasets and Schemas in [the documentation](https://docs.aws.amazon.com/personalize/latest/dg/how-it-works-dataset-schema.html).\n",

"\n",

"We will wait for all three jobs to finish."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"\n",

"import_job_arns = [ items_dataset_import_job_arn, users_dataset_import_job_arn, interactions_dataset_import_job_arn ]\n",

"\n",

"max_time = time.time() + 3*60*60 # 3 hours\n",

"while time.time() < max_time:\n",

" for job_arn in reversed(import_job_arns):\n",

" import_job_response = personalize.describe_dataset_import_job(\n",

" datasetImportJobArn = job_arn\n",

" )\n",

" status = import_job_response[\"datasetImportJob\"]['status']\n",

"\n",

" if status == \"ACTIVE\":\n",

" print(f'Import job {job_arn} successfully completed')\n",

" import_job_arns.remove(job_arn)\n",

" elif status == \"CREATE FAILED\":\n",

" print(f'Import job {job_arn} failed')\n",

" if import_job_response.get('failureReason'):\n",

" print(' Reason: ' + import_job_response['failureReason'])\n",

" import_job_arns.remove(job_arn)\n",

"\n",

" if len(import_job_arns) > 0:\n",

" print('At least one dataset import job still in progress')\n",

" time.sleep(60)\n",

" else:\n",

" print(\"All import jobs have ended\")\n",

" break"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"With all imports now complete you can start training recommenders and solutions. Run the cell below before moving on to store a few values for usage in the next notebooks. After completing that cell open notebook `02_Training_Layer.ipynb` to continue."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Storing Useful Variables \n",

"[Back to top](#top)\n",

"\n",

"Before exiting this notebook, run the following cells to save the version ARNs for use in the next notebook."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%store items_dataset_arn\n",

"%store interactions_dataset_arn\n",

"%store users_dataset_arn\n",

"%store dataset_group_arn\n",

"%store bucket_name\n",

"%store role_arn\n",

"%store role_name\n",

"%store region\n",

"%store item_metadata_df\n",

"%store user_metadata_df\n",

"%store products_df"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": []

}

],

"metadata": {

"kernelspec": {

"display_name": "conda_python3",

"language": "python",

"name": "conda_python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.8.12"

}

},

"nbformat": 4,

"nbformat_minor": 4

}