# ml-parameter-provider

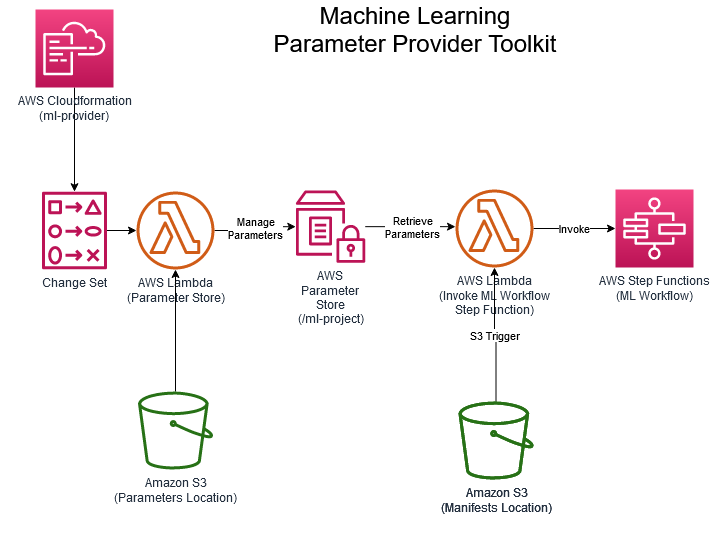

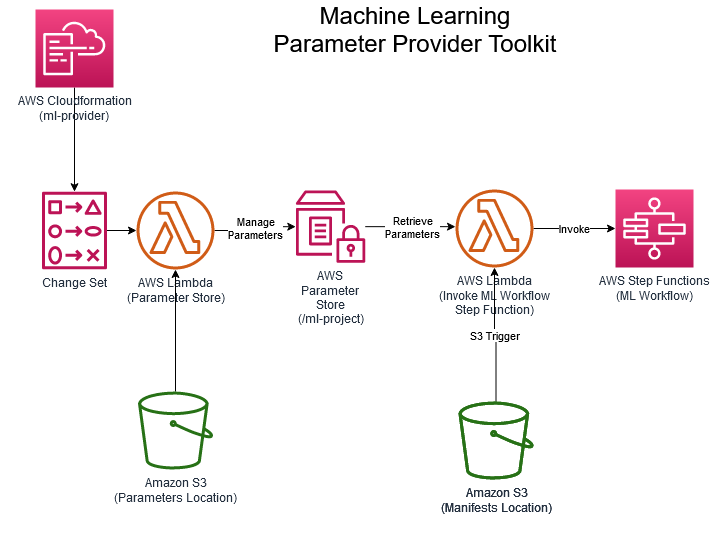

> A Parameter Provider Toolkit for ML Workloads as a Cloudformation stack.

[](contributing.md)

[](https://s3-us-west-2.amazonaws.com/codefactory-us-west-2-prod-default-build-badges/passing.svg)

Current version: **1.0.0**

Lead Maintainer: [Anil Sener](mailto:senera@amazon.com)

## 📋 Table of content

- [Installation](#-install)

- [Metrics](#-metrics)

- [Pre-requisites](#-pre-requisites)

- [Description](#-description)

- [Usage](#-usage)

- [Screenshots](#-screenshots)

- [See also](#-see-also)

## 🚀 Install

In order to add this block, head to your project directory in your terminal and follow the steps in [Pre-requisites](#-pre-requisites) and [Usage](#-usage) sections below.

> ⚠️ You need to have the [AWS SAM CLI](https://docs.aws.amazon.com/serverless-application-model/latest/developerguide/serverless-sam-cli-install.html) installed on your deployment machine before installing this package.

## 📊 Metrics

The below metrics displays approximate values associated with deploying and using this block.

Metric | Value

------ | ------

**Type** | Architecture

**Installation Time** | Less than 1 minute

**Audience** | Developers, Solutions Architects

**Requirements** | [aws-sam](https://docs.aws.amazon.com/serverless-application-model/latest/developerguide/serverless-sam-cli-install.html),[jq](https://stedolan.github.io/jq/download/)

## 🎒 Pre-requisites

- Make sure that you have installed the [AWS SAM CLI](https://docs.aws.amazon.com/serverless-application-model/latest/developerguide/serverless-sam-cli-install.html) on your deployment machine.

- Make sure that you installed [jq](https://stedolan.github.io/jq/download/) on your deployment machine.

- Make sure you have Python 3.8 installed on your deployment machine.

- Make sure that you have uploaded the [2 required parameter JSON files on S3](#-parameter-json-files) (e.g `ml-parameters.json` and `hyperparameters.json`) before the SAM application deployment.

- Make sure that you have created a ML Workflow State Machine using AWS Step Functions which will be invoked by this stack with ML parameters as an input.

### 📘Parameter JSON files

Below is a list of JSON files that you'd be providing to this stack in order to customize its behavior. Some of them are required before the actual deployment (required at deployment-time), and some are required at run-time.

### Deployment-time dependencies

1. You will find a `template` of `ml-parameters.json` file under `/examples` folder. This file contains all of the customizable parameters required to describe your ML workflow, except hyper-parameters which are described in their own file (see below).

See a template of a ml-parameters.json file

```json

{

"tuningJobName": "survival-tuning",

"tuningStrategy": "Bayesian",

"algorithmARN": "arn:aws:sagemaker:::algorithm/h2o-gbm-algorithm",

"maxParallelTrainingJobs": 10,

"maxNumberOfTrainingJobs": 10,

"inputContentType": "text/csv",

"trainingJobDefinitionName": "training-job-def-0",

"enableManagedSpotTraining": true,

"spotTrainingCheckpointS3Uri": "s3:///model-training-checkpoint/",

"trainingInstanceType": "ml.c5.2xlarge",

"trainingInstanceVolumeSizeInGB": 30,

"trainingJobEarlyStoppingType": "Auto",

"endpointName": "survival-endpoint",

"model": {

"artifactType": "MOJO",

"artifactsS3OutputPath": "s3:///model-artifacts/",

"name": "survival-model",

"trainingSecurityGroupIds": [

""

],

"trainingSubnets": [

"",

"",

""

],

"hosting": {

"initialInstanceCount": "1",

"instanceType": "ml.c5.2xlarge",

"securityGroupIds": [

""

],

"subnets": [

"",

"",

""

]

}

},

"autoscalingMinCapacity": 1,

"autoscalingMaxCapacity": 4,

"targetTrackingScalingPolicyConfiguration": {

"DisableScaleIn": true,

"PredefinedMetricSpecification": {

"PredefinedMetricType": "SageMakerVariantInvocationsPerInstance"

},

"ScaleInCooldown": 300,

"ScaleOutCooldown": 60,

"TargetValue": 5000

}

}

```

See a sample of a hyperparameters.json file

```json

{

"parameterRanges": {

"IntegerParameterRanges": [

{

"Name": "ntrees",

"MinValue": "10",

"MaxValue": "100",

"ScalingType": "Linear"

},

{

"Name": "min_rows",

"MinValue": "10",

"MaxValue": "30",

"ScalingType": "Linear"

},

{

"Name": "max_depth",

"MinValue": "3",

"MaxValue": "7",

"ScalingType": "Linear"

},

{

"Name": "score_tree_interval",

"MinValue": "5",

"MaxValue": "10",

"ScalingType": "Linear"

}

],

"ContinuousParameterRanges": [

{

"Name": "learn_rate",

"MinValue": "0.001",

"MaxValue": "0.01",

"ScalingType": "Logarithmic"

},

{

"Name": "sample_rate",

"MinValue": "0.6",

"MaxValue": "1.0",

"ScalingType": "Auto"

},

{

"Name": "col_sample_rate",

"MinValue": "0.7",

"MaxValue": "0.9",

"ScalingType": "Auto"

}

],

"CategoricalParameterRanges": [

]

},

"staticHyperParameters":{

"stopping_metric":"auc",

"training": "{'classification': 'true', 'target': 'Survived', 'distribution':'bernoulli','ignored_columns':'PassengerId,Name,Cabin,Ticket','categorical_columns':'Sex,Embarked,Survived,Pclass,Embarked'}",

"balance_classes":"True",

"seed": "1",

"stopping_rounds":"10",

"stopping_tolerance":"1e-9"

}

}

```

See a sample of a manifest file

```json

{

"channels": [

{

"channelName": "training",

"s3DataSource": {

"AttributeNames": [],

"S3DataDistributionType": "FullyReplicated",

"S3DataType": "S3Prefix",

"S3Uri": "s3:///titanic/training/train.csv"

}

},

{

"channelName": "validation",

"s3DataSource": {

"AttributeNames": [],

"S3DataDistributionType": "FullyReplicated",

"S3DataType": "S3Prefix",

"S3Uri": "s3:///titanic/validation/validation.csv"

}

}

]

}

```

## 🛠 Usage

1. Deploy the package via the SAM CLI providing the settings for deployment.

```sh

sam deploy --guided

```

2. Observe the deployed `ml-parameter-provider` Serverless Application in the AWS Console.

3. Navigate to Amazon S3 console, create a manifest.json file and upload to the S3 location previously specified by `ManifestS3BucketName` and `ManifestS3BucketKeyPrefix` options during the toolkit deployment.

4. A new execution of the state machine specified in `TargetStateMachineArn` deployment option will be triggered and the deployment will start.

### SAM CLI Deployment Options

The deployment options that you can pass to the ML Parameter Provider toolkit are described below.

Name | Default value | Description

-------------- | ------------- | -----------

**Stack Name** | sam-app | Name of the stack/serverless application for example `ml-parameter-provider`.

**AWS Region** | None | AWS Region to deploy the infrastructure for ML Parameter Provider Serverless Application.

**Parameter Environment** | `development` | Environment to tag the created resources.

**ParameterStorePath** | `/ml-project` | Parent path in AWS Systems Manager Parameter Store to store all parameters imported by the toolkit. It is recommended to set this to a meaningful ML project/domain name.

**TargetStateMachineArn** | None | Amazon Resource Name of AWS Step Functions State Machine which will be executed using parameters in parameter store.

**ManifestS3BucketName** | None | Please set the S3 bucket name where manifest JSON files will be uploaded.

**ManifestS3BucketKeyPrefix** | `manifests/` | Please set the S3 key prefix where manifest JSON files will be uploaded.

**HyperparametersS3BucketName** | None | Please set the S3 bucket name where hyperparameter JSON file to be read from during the deployment.

**HyperparametersS3Key** | `hyperparameters.json` | Please set the S3 key prefix where hyperparameters JSON files will be uploaded.

**ParametersS3BucketName** | None | Please set the S3 bucket name where parameters JSON file to be read from during the deployment.

**ParametersS3Key** | `ml-parameters.json` | Please set the S3 key prefix where parameters JSON files will be uploaded.

## 📷 Screenshots

Below are different screenshots displaying sexecution of ML Workflow AWS Step Function and Model Tuning looks like in the AWS Console.

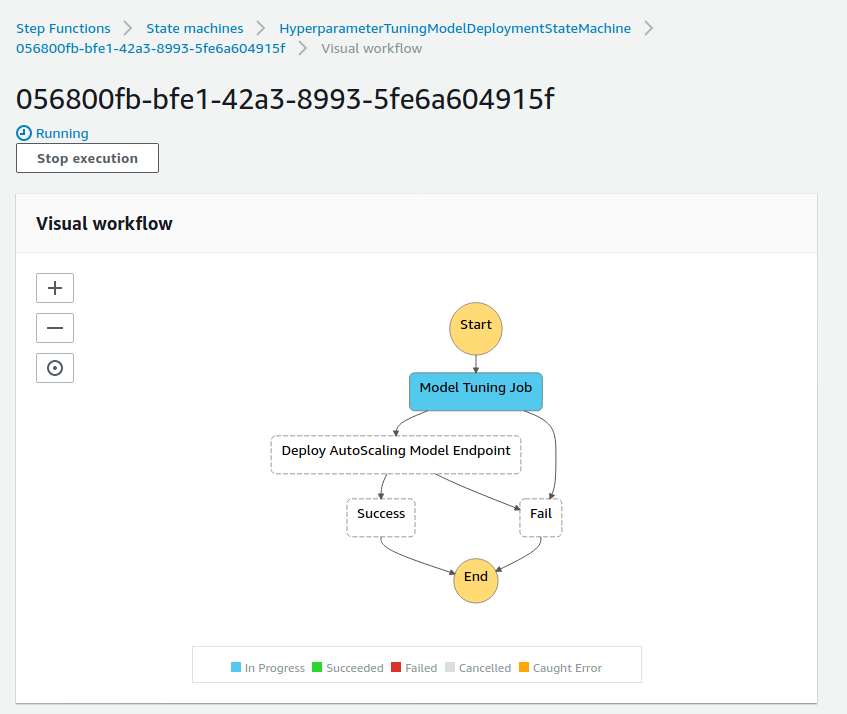

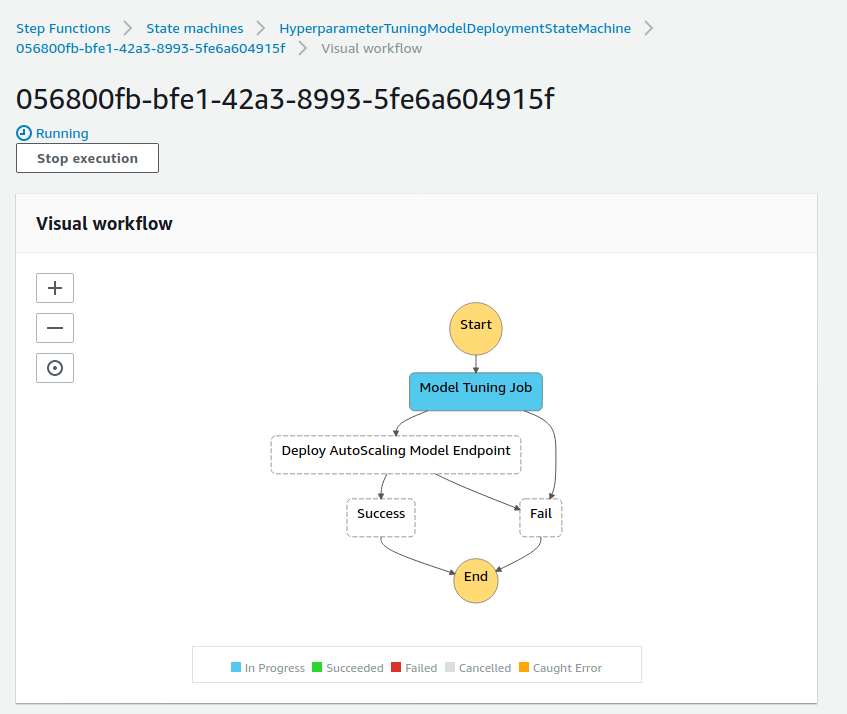

### The state machine during execution

You can see below a current execution of the `HyperparameterTuningModelDeploymentStateMachine` in the AWS Step Functions console.

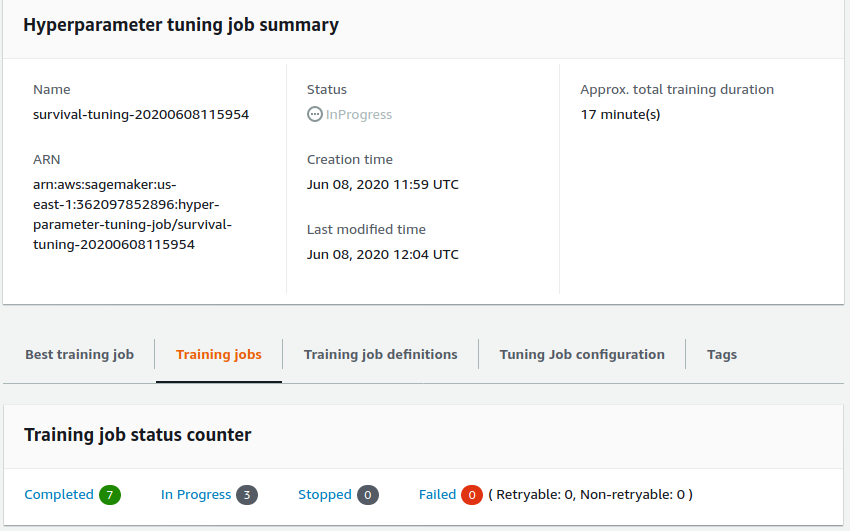

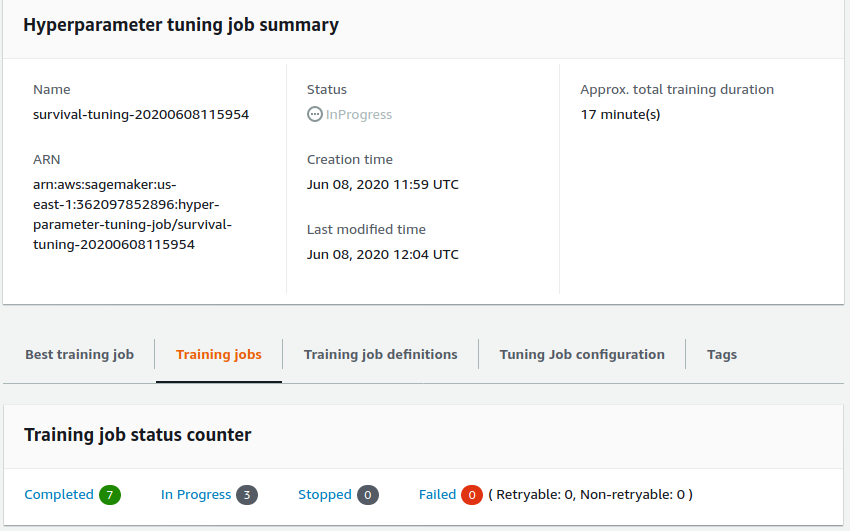

### The Sagemaker Hyperparameter Tuning Job during execution

Below is a screenshot of training jobs with `InProgress` status created by Sagemaker Hyperparameter Tuning Job.

## 👀 See also

- The [AWS Sagemaker](https://docs.aws.amazon.com/sagemaker/latest/dg/whatis.html) official documentation.

- The [AWS Steps Function](https://docs.aws.amazon.com/step-functions/latest/dg/welcome.html) official documentation.

- The [Sagemaker Model Tuner with Endpoint Deployment](https://github.com/aws-samples/amazon-sagemaker-h2o-blog/tree/master/sagemaker-model-tuner-with-endpoint-deployment) project.

- The [Sagemaker Model Tuner](https://github.com/aws-samples/amazon-sagemaker-h2o-blog/tree/master/sagemaker-model-tuner) project.

- The [Sagemaker Model Deployer](https://github.com/aws-samples/amazon-sagemaker-h2o-blog/tree/master/sagemaker-model-deployer) project.

![]()