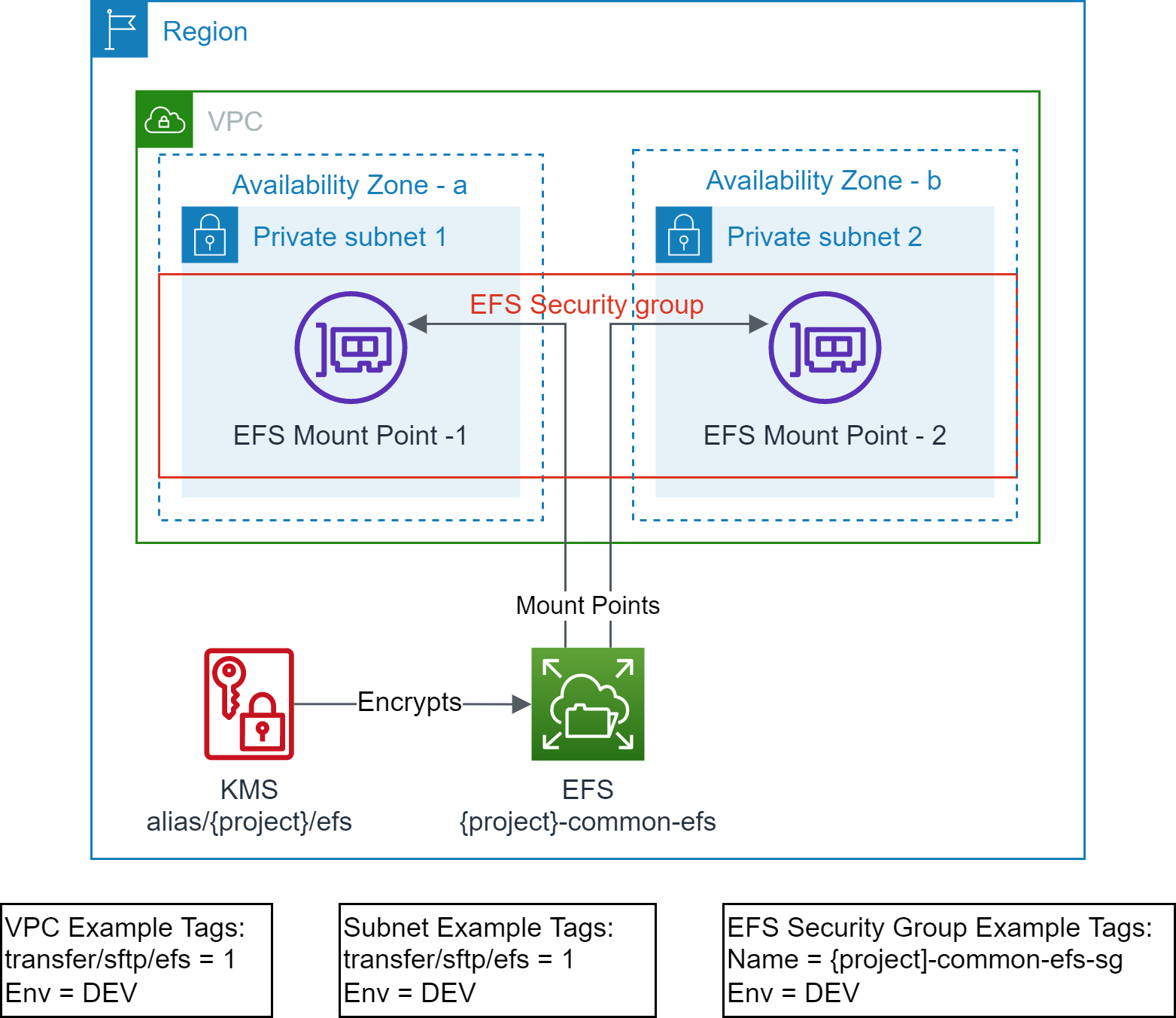

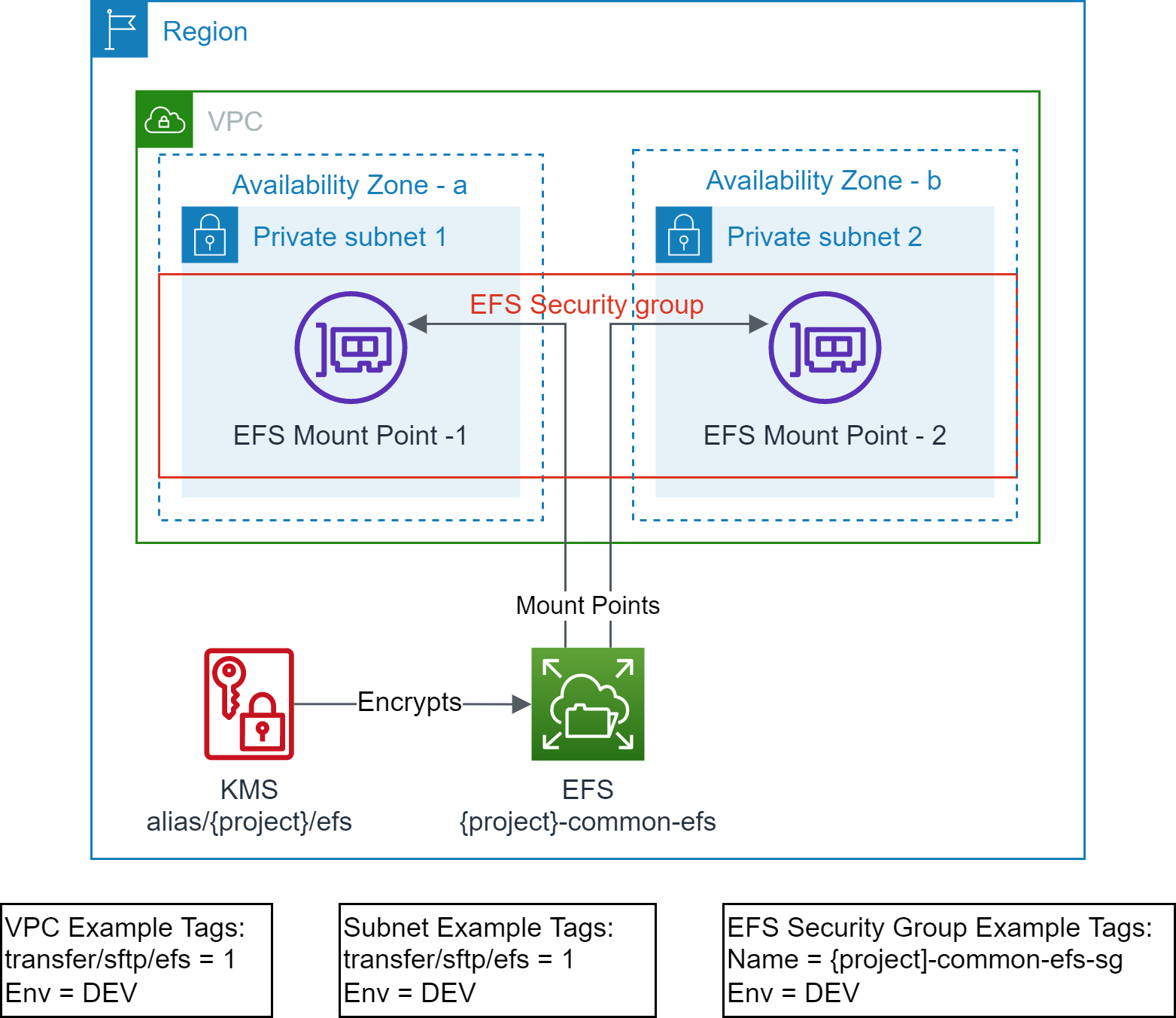

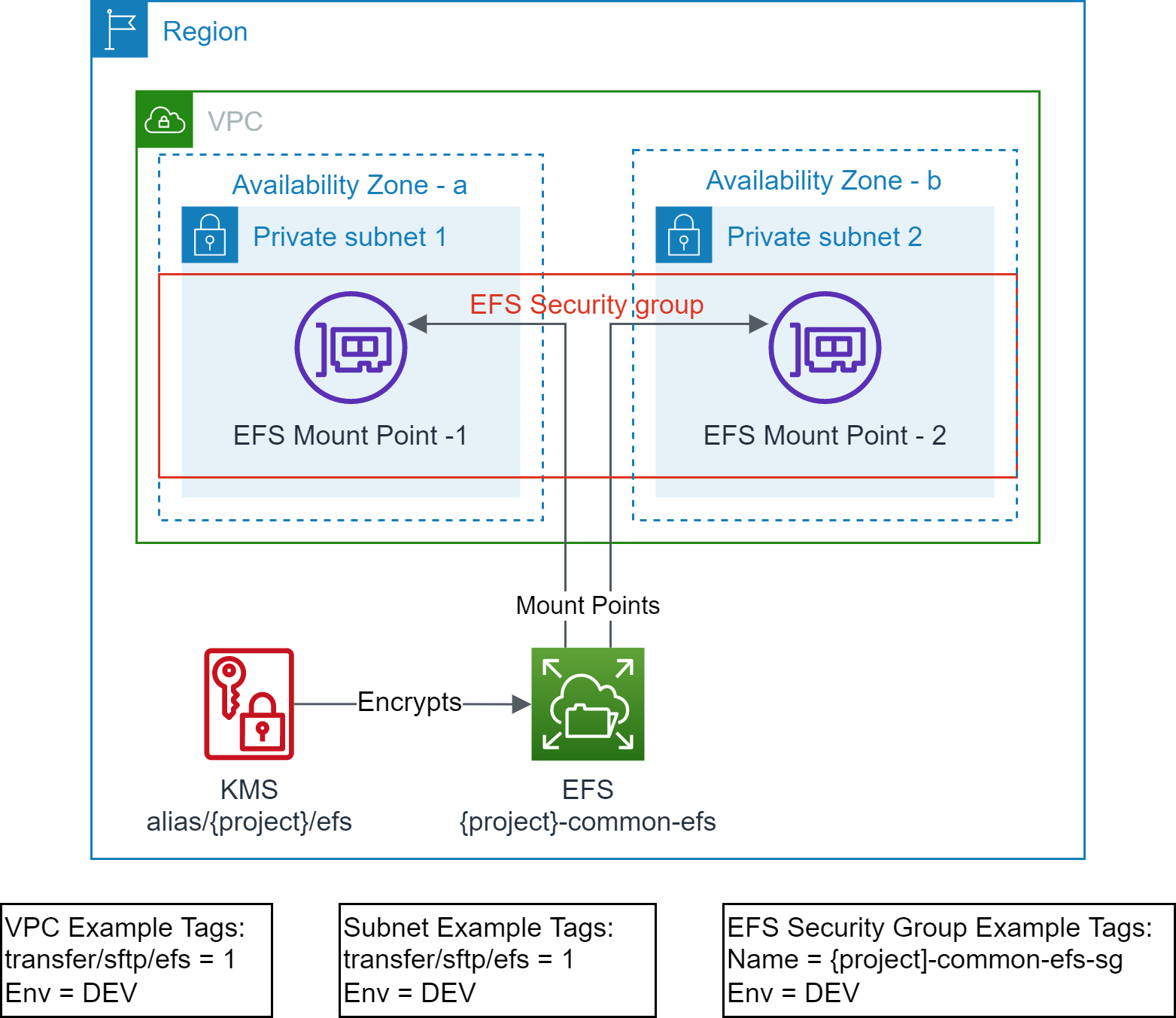

# Scenario2: Shared EFS and Owned EFS Access Point

This example provisions the resources to simulate the scenario2:

- EFS file system exists and optionally encrypted using KMS.

- EFS access point does not exist. It is owned by the SFTP server.

- EFS mount points exist in the target VPC Subnets.

- EFS Security Group exists and attached to the EFS mount points.

## Prerequisites

- Terraform backend provider and state locking providers are identified and bootstrapped.

- A [bootstrap](../../../bootstrap) module/example is provided that provisions Amazon S3 for Terraform state storage and Amazon DynamoDB for Terraform state locking.

- The target VPC along with the target Subnets exist and identified via Tags.

- A [vpc](../../../vpc) example is provided that provisions VPC, Subnets and related resources with example tagging.

- The example uses the following tags to identify the target VPC and Subnets.

```text

"transfer/sftp/efs" = "1"

"Env" = "DEV"

```

## Execution

- cd to `examples/efs/scenario2` folder.

- Modify the `backend "S3"` section in `provider.tf` with correct values for `region`, `bucket`, `dynamodb_table`, and `key`.

- Use provided values as guidance.

- Modify `terraform.tfvars` to your requirements.

- Use provided values as guidance.

- Make sure you are using the correct AWS Profile that has permission to provision the target resources.

- `aws sts get-caller-identity`

- Execute `terraform init` to initialize Terraform.

- Execute `terraform plan` and verify the changes.

- Execute `terraform apply` and approve the changes to provision the resources.

## Requirements

| Name | Version |

|------|---------|

| [terraform](#requirement\_terraform) | >= v1.1.9 |

| [aws](#requirement\_aws) | >= 4.13.0 |

## Providers

No providers.

## Modules

| Name | Source | Version |

|------|--------|---------|

| [common\_efs](#module\_common\_efs) | github.com/aws-samples/aws-tf-efs//modules/aws/efs | v1.0.0 |

## Resources

No resources.

## Inputs

| Name | Description | Type | Default | Required |

|------|-------------|------|---------|:--------:|

| [env\_name](#input\_env\_name) | Environment name e.g. dev, prod | `string` | n/a | yes |

| [project](#input\_project) | Project name (prefix/suffix) to be used on all the resources identification | `string` | n/a | yes |

| [region](#input\_region) | The AWS Region e.g. us-east-1 for the environment | `string` | n/a | yes |

| [subnet\_tags](#input\_subnet\_tags) | Tags to discover target subnets in the VPC, these tags should identify one or more subnets | `map(string)` | n/a | yes |

| [tags](#input\_tags) | Common and mandatory tags for the resources | `map(string)` | n/a | yes |

| [vpc\_tags](#input\_vpc\_tags) | Tags to discover target VPC, these tags should uniquely identify a VPC | `map(string)` | n/a | yes |

| [efs\_access\_point\_specs](#input\_efs\_access\_point\_specs) | List of EFS Access Point Specs to be created. It can be empty list. | list(object({

efs_name = string # unique name e.g. common

efs_ap = string # unique name e.g. common_sftp

uid = number

gid = number

secondary_gids = list(number)

root_path = string # e.g. /{env}/{project}/{purpose}/{name}

owner_uid = number # e.g. 0

owner_gid = number # e.g. 0

root_permission = string # e.g. 0755

principal_arns = list(string)

})) | `[]` | no |

| [efs\_id](#input\_efs\_id) | EFS File System Id, if not provided a new EFS will be created | `string` | `null` | no |

| [kms\_alias](#input\_kms\_alias) | KMS Alias to discover KMS for EFS encryption, if not provided a new CMK will be created | `string` | `""` | no |

| [security\_group\_tags](#input\_security\_group\_tags) | Tags used to discover EFS Security Group, if not provided new EFS security group will be created | `map(string)` | `null` | no |

## Outputs

| Name | Description |

|------|-------------|

| [efs](#output\_efs) | Elastic File System info |

| [efs\_ap](#output\_efs\_ap) | Elastic File System Access Points |

| [efs\_kms](#output\_efs\_kms) | KMS Keys created for EFS |