{

"cells": [

{

"cell_type": "markdown",

"id": "fa00e4c6",

"metadata": {},

"source": [

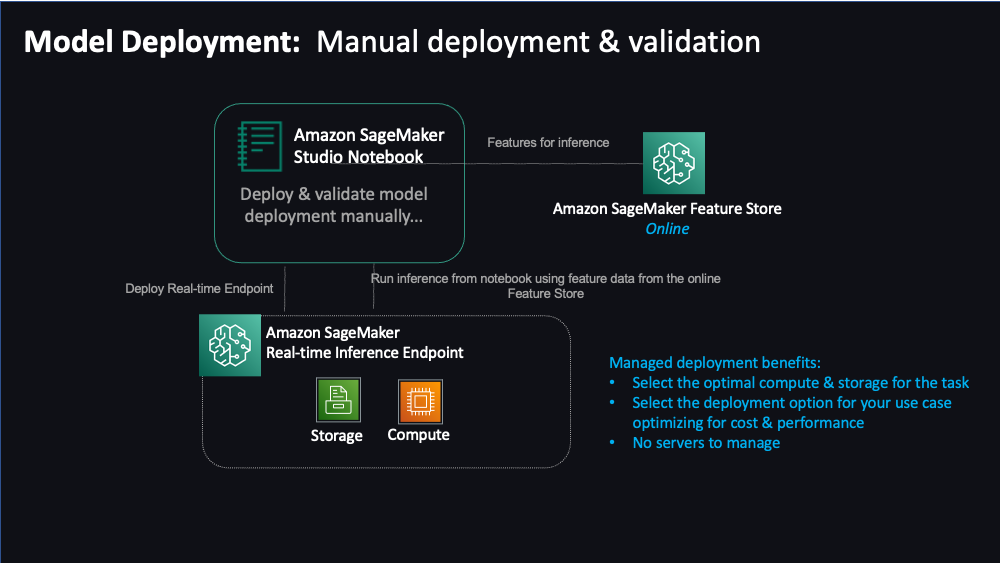

"# MLOps: Initial - Manual deployment & validation \n",

"\n",

" \n",

"\t⚠️ PRE-REQUISITE: Before proceeding with this notebook, please ensure that you have executed the 1-data-prep-feature-store.ipynb and 2-training-registry.ipynb Notebooks\n",

"

"

]

},

{

"cell_type": "markdown",

"id": "14a19cd8",

"metadata": {},

"source": [

"## Contents\n",

"\n",

"- [Introduction](#Introduction)\n",

"- [SageMaker Endpoint](#SageMaker-Endpoint)"

]

},

{

"cell_type": "markdown",

"id": "b9bfc677",

"metadata": {},

"source": [

"## Introduction"

]

},

{

"cell_type": "markdown",

"id": "f0e1b87b",

"metadata": {},

"source": [

"This is our third notebook which will explore the model deployment of ML workflow.\n",

"\n",

"Here, we will put on the hat of a `Data Scientist` or `ML Engineer` and will perform the task of model deployment which includes fetching the right model and deploying it for inference. This is often done during model development to validate consuming applications or inference code as well as for ad-hoc inference. In the early stages of MLOps adoptions, this is often a manual task. However, for models deployed that are serving live business critical production traffic - typically the deployment is handled through Infrastructure-as-Code(IaC)/Configuration-as-Code(CaC) outside the experimentation environment. This allows for robust deployment patterns that support advanced deployment strategies (ex. A/B, Shadow, Blue/Green) as well as automatic rollback capabilities.\n",

"\n",

"For this task we will be using Amazon SageMaker Model Hosting capabilities to manually deploy and perform inference. In this case, we will utilize a real-time endpoint; however, SageMaker has other deployment options to meet the needs of your use cases. Please refer to the provided [detailed guidance](https://docs.aws.amazon.com/sagemaker/latest/dg/deploy-model.html) when choosing the right option for your use case. We'll also pull features for inference from the online SageMaker Feature Store. Keep in mind, this is typically done through the consuming application and inference pipelines but we are showing it here to illustrate the manual steps often performed during validation stages of development. \n",

"\n",

"\n",

"\n",

"Let's get started!"

]

},

{

"cell_type": "markdown",

"id": "6980e54b-e544-4b4f-a230-d1d7c4f2fa52",

"metadata": {},

"source": [

"**Imports**"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "504c6d5a",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"!pip install -U sagemaker"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "da514842",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"%store -r"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "1ebce8cc",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"from urllib.parse import urlparse\n",

"import io\n",

"import time\n",

"from sagemaker.model import ModelPackage\n",

"import boto3\n",

"import sagemaker\n",

"from sagemaker.predictor import Predictor\n",

"from sagemaker.serializers import CSVSerializer\n",

"from sagemaker.deserializers import JSONDeserializer\n",

"import numpy as np\n",

"import pathlib\n",

"from sagemaker.feature_store.feature_group import FeatureGroup\n",

"from helper_library import *"

]

},

{

"cell_type": "markdown",

"id": "8759a53f",

"metadata": {},

"source": [

"**Session variables**"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "d951f780",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"# Useful SageMaker variables\n",

"sagemaker_session = sagemaker.Session()\n",

"bucket = sagemaker_session.default_bucket()\n",

"role_arn= sagemaker.get_execution_role()\n",

"region = sagemaker_session.boto_region_name\n",

"s3_client = boto3.client('s3', region_name=region)\n",

"sagemaker_client = boto3.client('sagemaker')"

]

},

{

"cell_type": "markdown",

"id": "5774fc61",

"metadata": {},

"source": [

"## SageMaker Endpoint"

]

},

{

"cell_type": "markdown",

"id": "e7786ab5",

"metadata": {},

"source": [

"You can deploy your trained model as [SageMaker hosted endpoint](https://docs.aws.amazon.com/sagemaker/latest/dg/realtime-endpoints-deployment.html) which serves real-time predictions from a trained model. The endpoint will retrieve the model created during training and deploy it within a SageMaker scikit-learn container. This all can be accomplished with one line of code. Note that it will take several minutes to deploy the model to a hosted endpoint."

]

},

{

"cell_type": "markdown",

"id": "cfdb209e",

"metadata": {},

"source": [

"Let's get the model we registered in the Model Registry."

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "79882a19",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"random_forest_regressor_model = ModelPackage(\n",

" role_arn,\n",

" model_package_arn=model_package_arn,\n",

" name=model_name\n",

")"

]

},

{

"cell_type": "markdown",

"id": "81b0e02f",

"metadata": {},

"source": [

"It's current status is `PendingApproval`. In order to use this model for offline predictions or as a real-time endpoint, we should adopt the practice of setting the status to `Approved` prior to deployment. You can optionally register your model in `Approved` status at the time of model registration; however, this option allows for as secondary peer review approval workflow. "

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "0378ac9e",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"sagemaker_client.update_model_package(\n",

" ModelPackageArn=random_forest_regressor_model.model_package_arn,\n",

" ModelApprovalStatus='Approved'\n",

")"

]

},

{

"cell_type": "markdown",

"id": "018ecb31",

"metadata": {},

"source": [

"Now we can deploy it!"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "7267a878",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"from IPython.core.display import display, HTML\n",

"endpoint_name = f'{model_name}-endpoint-' + time.strftime('%Y-%m-%d-%H-%M-%S')\n",

"display(\n",

" HTML(\n",

" 'Review The Endpoint After About 5 Minutes'.format(\n",

" region, endpoint_name\n",

" )\n",

" )\n",

")\n",

"random_forest_regressor_model.deploy(\n",

" initial_instance_count=1,\n",

" instance_type='ml.t2.medium',\n",

" endpoint_name=endpoint_name\n",

")"

]

},

{

"cell_type": "markdown",

"id": "171d75d9",

"metadata": {},

"source": [

"Let's test this real-time endpoint by passing it some data and getting a real-time prediction back."

]

},

{

"cell_type": "markdown",

"id": "b666e361",

"metadata": {},

"source": [

"## Read from offline Feature Store\n",

"\n",

"Pull data from the offline feature store for quick validation of model deployment"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "986764c9",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"fs_group = FeatureGroup(name=test_feature_group_name, sagemaker_session=sagemaker_session) \n",

"query = fs_group.athena_query()\n",

"table = query.table_name\n",

"query_string = f'SELECT {features_to_select} FROM \"sagemaker_featurestore\".\"{table}\" ORDER BY record_id'\n",

"query_results = 'sagemaker-featurestore'\n",

"output_location = f's3://{bucket}/{query_results}/query_results/'\n",

"query.run(\n",

" query_string=query_string, \n",

" output_location=output_location\n",

")\n",

"query.wait()\n",

"df = query.as_dataframe()\n",

"df.head()"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "7436ebc7",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"# Attach to the SageMaker endpoint\n",

"predictor = Predictor(\n",

" endpoint_name=endpoint_name,\n",

" sagemaker_session=sagemaker_session,\n",

" serializer=CSVSerializer(),\n",

" deserializer=JSONDeserializer()\n",

")\n",

"\n",

"dropped_df = df.drop(columns=[\"price\"])\n",

"\n",

"# Get a real-time prediction (only predicting the 1st 5 columns to reduce output size)\n",

"predictor.predict(dropped_df[:5].to_csv(index=False, header=False))"

]

},

{

"cell_type": "markdown",

"id": "0ba03d82",

"metadata": {},

"source": [

"## Read from online Feature Store\n",

"\n",

"In a production environment supporting real-time use cases, the ability to pull features for inference with low latency is critical. Here, we show how you can pull records from the online feature store to use with your deployed real-time endpoint. Again, this is typically done through the consuming application or inference pipeline but we show it here to illustrate the required steps. "

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "71c7dacf",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"customer_record = get_online_feature_group_records(test_feature_group_name, ['1'])"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "933a3d6c",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"record = customer_record[0]"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "598b22d4",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"record.pop('PRICE')\n",

"record.pop('event_time')\n",

"record.pop('record_id')"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "4c1f6f55-17dc-418b-a53e-e26784715a9f",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"record"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "02803f04-8bf7-4654-935f-b5c355a401bd",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"from io import StringIO\n",

"import pandas as pd\n",

"pd.read_csv(StringIO('-1.2786684709120923,-0.2238534355557023,-1.4253170501458523,-0.0020177318669674,-0.6703058406786521,1.330874688860325,-0.9766068758234135,1.0010005005003753')).shape == (0,8)"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "24ffcf8f",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"payload = ','.join(str(record[key]) for key in customer_record[0])"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "c096aabe",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"payload"

]

},

{

"cell_type": "markdown",

"id": "2352f567-aac9-4804-8b24-68777aa0a92d",

"metadata": {},

"source": [

"Predict using the features pulled from the online feature store..."

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "5a4eb155",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"predictor.predict('-1.2786684709120923,-0.2238534355557023,-1.4253170501458523,-0.0020177318669674,-0.6703058406786521,1.330874688860325,-0.9766068758234135,1.0010005005003753')"

]

}

],

"metadata": {

"instance_type": "ml.t3.medium",

"kernelspec": {

"display_name": "Python 3 (Data Science)",

"language": "python",

"name": "python3__SAGEMAKER_INTERNAL__arn:aws:sagemaker:us-east-1:081325390199:image/datascience-1.0"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.7.10"

}

},

"nbformat": 4,

"nbformat_minor": 5

}