{

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# Fraud Detection for Automobile Claims: Mitigate Bias, Train, Register, and Deploy Unbiased Model"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"---\n",

"\n",

"This notebook's CI test result for us-west-2 is as follows. CI test results in other regions can be found at the end of the notebook. \n",

"\n",

"\n",

"\n",

"---"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Background\n",

"\n",

"This notebook is the fourth part of a series of notebooks that will demonstrate how to prepare, train, and deploy a model that detects fradulent auto claims. In this notebook, we will describe how to detect bias using Clarify, mitigate it with SMOTE, train another model, put it in the Model Registry along with all the Lineage of the Artifacts created along the way: data, code and model metadata. You can choose to run this notebook by itself or in sequence with the other notebooks listed below. Please see the [README.md](README.md) for more information about this use case implemented by this series of notebooks. \n",

"\n",

"\n",

"1. [Fraud Detection for Automobile Claims: Data Exploration](./0-AutoClaimFraudDetection.ipynb)\n",

"1. [Fraud Detection for Automobile Claims: Data Preparation, Process, and Store Features](./1-data-prep-e2e.ipynb)\n",

"1. [Fraud Detection for Automobile Claims: Train, Check Bias, Tune, Record Lineage, and Register a Model](./2-lineage-train-assess-bias-tune-registry-e2e.ipynb)\n",

"1. **[Fraud Detection for Automobile Claims: Mitigate Bias, Train, Register, and Deploy Unbiased Model](./3-mitigate-bias-train-model2-registry-e2e.ipynb)**\n",

"\n",

"\n",

"## Contents\n",

"1. [Architecture: Train, Check Bias, Tune, Record Lineage, Register Model](#Architecture:-Train,-Check-Bias,-Tune,-Record-Lineage,-Register-Model)\n",

"1. [Develop an Unbiased Model](#Develop-an-Unbiased-Model)\n",

"1. [Analyze Model for Bias and Explainability](#Analyze-Model-for-Bias-and-Explainability)\n",

"1. [View Results of Clarify Job](#View-Results-of-Clarify-Job)\n",

"1. [Configure and Run Explainability Job](#Configure-and-Run-Explainability-Job)\n",

"1. [Create Model Package for the Trained Model](#Create-Model-Package-for-the-Trained-Model)\n",

"1. [Architecture: Deploy and Serve Model](#Architecture:-Deploy-and-Serve-Model)\n",

"1. [Deploy an Approved Model](#Deploy-an-Approved-Model)\n",

"1. [Run Predictions on Claims](#Run-Predictions-on-Claims)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Install required and/or update third-party libraries"

]

},

{

"cell_type": "code",

"execution_count": 3,

"metadata": {

"tags": []

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"\u001b[33mWARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv\u001b[0m\u001b[33m\n",

"\u001b[0m\u001b[31mERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.\n",

"daal4py 2021.3.0 requires daal==2021.2.3, which is not installed.\n",

"awscli 1.27.153 requires botocore==1.29.153, but you have botocore 1.20.112 which is incompatible.\n",

"awscli 1.27.153 requires s3transfer<0.7.0,>=0.6.0, but you have s3transfer 0.4.2 which is incompatible.\n",

"numba 0.54.1 requires numpy<1.21,>=1.17, but you have numpy 1.22.4 which is incompatible.\n",

"sagemaker-datawrangler 0.4.3 requires sagemaker-data-insights==0.4.0, but you have sagemaker-data-insights 0.3.3 which is incompatible.\n",

"sagemaker-studio-analytics-extension 0.0.19 requires boto3<2.0,>=1.26.49, but you have boto3 1.17.70 which is incompatible.\u001b[0m\u001b[31m\n",

"\u001b[0m\u001b[33mWARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv\u001b[0m\u001b[33m\n",

"\u001b[0m"

]

}

],

"source": [

"!python -m pip install -Uq pip\n",

"!python -m pip install -q awswrangler imbalanced-learn==0.7.0 sagemaker==2.23.0 boto3==1.17.70"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Import libraries"

]

},

{

"cell_type": "code",

"execution_count": 5,

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"import json\n",

"import time\n",

"import boto3\n",

"import sagemaker\n",

"import numpy as np\n",

"import pandas as pd\n",

"import awswrangler as wr\n",

"import matplotlib.pyplot as plt\n",

"from imblearn.over_sampling import SMOTE\n",

"from sagemaker.xgboost.estimator import XGBoost\n",

"\n",

"from model_package_src.inference_specification import InferenceSpecification\n",

"\n",

"%matplotlib inline"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Set region, boto3 and SageMaker SDK variables"

]

},

{

"cell_type": "code",

"execution_count": 6,

"metadata": {

"tags": []

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Using AWS Region: us-east-1\n"

]

}

],

"source": [

"# You can change this to a region of your choice\n",

"import sagemaker\n",

"\n",

"region = sagemaker.Session().boto_region_name\n",

"print(\"Using AWS Region: {}\".format(region))"

]

},

{

"cell_type": "code",

"execution_count": 7,

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"boto3.setup_default_session(region_name=region)\n",

"\n",

"boto_session = boto3.Session(region_name=region)\n",

"\n",

"s3_client = boto3.client(\"s3\", region_name=region)\n",

"\n",

"sagemaker_boto_client = boto_session.client(\"sagemaker\")\n",

"\n",

"sagemaker_session = sagemaker.Session(\n",

" boto_session=boto_session, sagemaker_client=sagemaker_boto_client\n",

")\n",

"\n",

"sagemaker_role = sagemaker.get_execution_role()\n",

"\n",

"account_id = boto3.client(\"sts\").get_caller_identity()[\"Account\"]"

]

},

{

"cell_type": "code",

"execution_count": 18,

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"# variables used for parameterizing the notebook run\n",

"bucket = sagemaker_session.default_bucket()\n",

"prefix = \"fraud-detect-demo\"\n",

"\n",

"claims_fg_name = f\"{prefix}-claims\"\n",

"customers_fg_name = f\"{prefix}-customers\"\n",

"\n",

"model_2_name = f\"{prefix}-xgboost-post-smote\"\n",

"\n",

"train_data_upsampled_s3_path = f\"s3://{bucket}/{prefix}/data/train/upsampled/train.csv\"\n",

"bias_report_2_output_path = f\"s3://{bucket}/{prefix}/clarify-output/bias-2\"\n",

"explainability_output_path = f\"s3://{bucket}/{prefix}/clarify-output/explainability\"\n",

"\n",

"train_instance_count = 1\n",

"train_instance_type = \"ml.m4.xlarge\"\n",

"\n",

"claify_instance_count = 1\n",

"clairfy_instance_type = \"ml.c5.xlarge\""

]

},

{

"cell_type": "code",

"execution_count": 19,

"metadata": {

"tags": []

},

"outputs": [

{

"data": {

"text/plain": [

"'s3://sagemaker-us-east-1-527468462420/fraud-detect-demo/data/train/upsampled/train.csv'"

]

},

"execution_count": 19,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"train_data_upsampled_s3_path"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

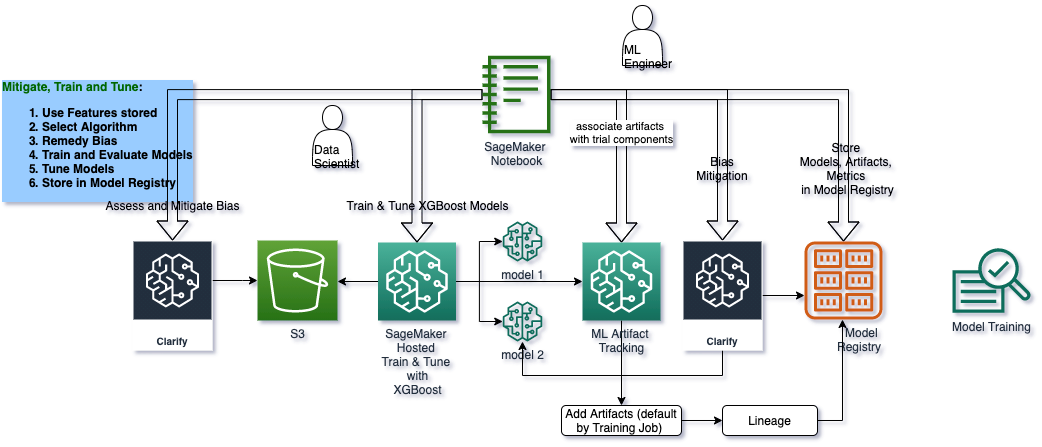

"## Architecture: Train, Check Bias, Tune, Record Lineage, Register Model\n",

"----\n",

"\n",

""

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Develop an Unbiased Model\n",

"----\n",

"\n",

"In this second model, you will fix the gender imbalance in the dataset using SMOTE and train another model using XGBoost. This model will also be saved to our registry and eventually approved for deployment."

]

},

{

"cell_type": "code",

"execution_count": 10,

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"train = pd.read_csv(\"data/train.csv\")\n",

"test = pd.read_csv(\"data/test.csv\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"\n",

"\n",

"### Resolve class imbalance using SMOTE\n",

"\n",

"To handle the imbalance, we can over-sample (i.e. upsample) the minority class using [SMOTE (Synthetic Minority Over-sampling Technique)](https://arxiv.org/pdf/1106.1813.pdf). After installing the imbalanced-learn module, if you receive an ImportError when importing SMOTE, then try restarting the kernel. "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Gender balance before SMOTE"

]

},

{

"cell_type": "code",

"execution_count": 11,

"metadata": {

"tags": []

},

"outputs": [

{

"data": {

"text/plain": [

"0 10031\n",

"1 5969\n",

"Name: customer_gender_female, dtype: int64"

]

},

"execution_count": 11,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"gender = train[\"customer_gender_female\"]\n",

"gender.value_counts()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Gender balance after SMOTE"

]

},

{

"cell_type": "code",

"execution_count": 12,

"metadata": {

"tags": []

},

"outputs": [

{

"data": {

"text/plain": [

"1 10031\n",

"0 10031\n",

"Name: customer_gender_female, dtype: int64"

]

},

"execution_count": 12,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"sm = SMOTE(random_state=42)\n",

"train_data_upsampled, gender_res = sm.fit_resample(train, gender)\n",

"train_data_upsampled[\"customer_gender_female\"].value_counts()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Set the hyperparameters\n",

"These are the parameters which will be sent to our training script in order to train the model. Although they are all defined as \"hyperparameters\" here, they can encompass XGBoost's [Learning Task Parameters](https://xgboost.readthedocs.io/en/latest/parameter.html#learning-task-parameters), [Tree Booster Parameters](https://xgboost.readthedocs.io/en/latest/parameter.html#parameters-for-tree-booster), or any other parameters you'd like to configure for XGBoost."

]

},

{

"cell_type": "code",

"execution_count": 13,

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"hyperparameters = {\n",

" \"max_depth\": \"3\",\n",

" \"eta\": \"0.2\",\n",

" \"objective\": \"binary:logistic\",\n",

" \"num_round\": \"100\",\n",

"}"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Train new model\n"

]

},

{

"cell_type": "code",

"execution_count": 14,

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"train_data_upsampled.to_csv(\"data/upsampled_train.csv\", index=False)\n",

"s3_client.upload_file(\n",

" Filename=\"data/upsampled_train.csv\",\n",

" Bucket=bucket,\n",

" Key=f\"{prefix}/data/train/upsampled/train.csv\",\n",

")"

]

},

{

"cell_type": "code",

"execution_count": 15,

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"xgb_estimator = XGBoost(\n",

" entry_point=\"xgboost_starter_script.py\",\n",

" hyperparameters=hyperparameters,\n",

" role=sagemaker_role,\n",

" instance_count=train_instance_count,\n",

" instance_type=train_instance_type,\n",

" framework_version=\"1.0-1\",\n",

")"

]

},

{

"cell_type": "code",

"execution_count": 16,

"metadata": {

"scrolled": true,

"tags": []

},

"outputs": [

{

"ename": "FileNotFoundError",

"evalue": "[Errno 2] No such file or directory: 'xgboost_starter_script.py'",

"output_type": "error",

"traceback": [

"\u001b[0;31m---------------------------------------------------------------------------\u001b[0m",

"\u001b[0;31mFileNotFoundError\u001b[0m Traceback (most recent call last)",

"Cell \u001b[0;32mIn[16], line 3\u001b[0m\n\u001b[1;32m 1\u001b[0m \u001b[38;5;28;01mif\u001b[39;00m \u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mtraining_job_2_name\u001b[39m\u001b[38;5;124m\"\u001b[39m \u001b[38;5;129;01mnot\u001b[39;00m \u001b[38;5;129;01min\u001b[39;00m \u001b[38;5;28mlocals\u001b[39m():\n\u001b[0;32m----> 3\u001b[0m \u001b[43mxgb_estimator\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mfit\u001b[49m\u001b[43m(\u001b[49m\u001b[43minputs\u001b[49m\u001b[38;5;241;43m=\u001b[39;49m\u001b[43m{\u001b[49m\u001b[38;5;124;43m\"\u001b[39;49m\u001b[38;5;124;43mtrain\u001b[39;49m\u001b[38;5;124;43m\"\u001b[39;49m\u001b[43m:\u001b[49m\u001b[43m \u001b[49m\u001b[43mtrain_data_upsampled_s3_path\u001b[49m\u001b[43m}\u001b[49m\u001b[43m)\u001b[49m\n\u001b[1;32m 4\u001b[0m training_job_2_name \u001b[38;5;241m=\u001b[39m xgb_estimator\u001b[38;5;241m.\u001b[39mlatest_training_job\u001b[38;5;241m.\u001b[39mjob_name\n\u001b[1;32m 6\u001b[0m \u001b[38;5;28;01melse\u001b[39;00m:\n",

"File \u001b[0;32m/opt/conda/lib/python3.8/site-packages/sagemaker/estimator.py:654\u001b[0m, in \u001b[0;36mEstimatorBase.fit\u001b[0;34m(self, inputs, wait, logs, job_name, experiment_config)\u001b[0m\n\u001b[1;32m 613\u001b[0m \u001b[38;5;28;01mdef\u001b[39;00m \u001b[38;5;21mfit\u001b[39m(\u001b[38;5;28mself\u001b[39m, inputs\u001b[38;5;241m=\u001b[39m\u001b[38;5;28;01mNone\u001b[39;00m, wait\u001b[38;5;241m=\u001b[39m\u001b[38;5;28;01mTrue\u001b[39;00m, logs\u001b[38;5;241m=\u001b[39m\u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mAll\u001b[39m\u001b[38;5;124m\"\u001b[39m, job_name\u001b[38;5;241m=\u001b[39m\u001b[38;5;28;01mNone\u001b[39;00m, experiment_config\u001b[38;5;241m=\u001b[39m\u001b[38;5;28;01mNone\u001b[39;00m):\n\u001b[1;32m 614\u001b[0m \u001b[38;5;124;03m\"\"\"Train a model using the input training dataset.\u001b[39;00m\n\u001b[1;32m 615\u001b[0m \n\u001b[1;32m 616\u001b[0m \u001b[38;5;124;03m The API calls the Amazon SageMaker CreateTrainingJob API to start\u001b[39;00m\n\u001b[0;32m (...)\u001b[0m\n\u001b[1;32m 652\u001b[0m \n\u001b[1;32m 653\u001b[0m \u001b[38;5;124;03m \"\"\"\u001b[39;00m\n\u001b[0;32m--> 654\u001b[0m \u001b[38;5;28;43mself\u001b[39;49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43m_prepare_for_training\u001b[49m\u001b[43m(\u001b[49m\u001b[43mjob_name\u001b[49m\u001b[38;5;241;43m=\u001b[39;49m\u001b[43mjob_name\u001b[49m\u001b[43m)\u001b[49m\n\u001b[1;32m 656\u001b[0m \u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39mlatest_training_job \u001b[38;5;241m=\u001b[39m _TrainingJob\u001b[38;5;241m.\u001b[39mstart_new(\u001b[38;5;28mself\u001b[39m, inputs, experiment_config)\n\u001b[1;32m 657\u001b[0m \u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39mjobs\u001b[38;5;241m.\u001b[39mappend(\u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39mlatest_training_job)\n",

"File \u001b[0;32m/opt/conda/lib/python3.8/site-packages/sagemaker/estimator.py:2186\u001b[0m, in \u001b[0;36mFramework._prepare_for_training\u001b[0;34m(self, job_name)\u001b[0m\n\u001b[1;32m 2184\u001b[0m \u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39mcode_uri \u001b[38;5;241m=\u001b[39m \u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39muploaded_code\u001b[38;5;241m.\u001b[39ms3_prefix\n\u001b[1;32m 2185\u001b[0m \u001b[38;5;28;01melse\u001b[39;00m:\n\u001b[0;32m-> 2186\u001b[0m \u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39muploaded_code \u001b[38;5;241m=\u001b[39m \u001b[38;5;28;43mself\u001b[39;49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43m_stage_user_code_in_s3\u001b[49m\u001b[43m(\u001b[49m\u001b[43m)\u001b[49m\n\u001b[1;32m 2187\u001b[0m code_dir \u001b[38;5;241m=\u001b[39m \u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39muploaded_code\u001b[38;5;241m.\u001b[39ms3_prefix\n\u001b[1;32m 2188\u001b[0m script \u001b[38;5;241m=\u001b[39m \u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39muploaded_code\u001b[38;5;241m.\u001b[39mscript_name\n",

"File \u001b[0;32m/opt/conda/lib/python3.8/site-packages/sagemaker/estimator.py:2234\u001b[0m, in \u001b[0;36mFramework._stage_user_code_in_s3\u001b[0;34m(self)\u001b[0m\n\u001b[1;32m 2231\u001b[0m output_bucket, _ \u001b[38;5;241m=\u001b[39m parse_s3_url(\u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39moutput_path)\n\u001b[1;32m 2232\u001b[0m kms_key \u001b[38;5;241m=\u001b[39m \u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39moutput_kms_key \u001b[38;5;28;01mif\u001b[39;00m code_bucket \u001b[38;5;241m==\u001b[39m output_bucket \u001b[38;5;28;01melse\u001b[39;00m \u001b[38;5;28;01mNone\u001b[39;00m\n\u001b[0;32m-> 2234\u001b[0m \u001b[38;5;28;01mreturn\u001b[39;00m \u001b[43mtar_and_upload_dir\u001b[49m\u001b[43m(\u001b[49m\n\u001b[1;32m 2235\u001b[0m \u001b[43m \u001b[49m\u001b[43msession\u001b[49m\u001b[38;5;241;43m=\u001b[39;49m\u001b[38;5;28;43mself\u001b[39;49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43msagemaker_session\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mboto_session\u001b[49m\u001b[43m,\u001b[49m\n\u001b[1;32m 2236\u001b[0m \u001b[43m \u001b[49m\u001b[43mbucket\u001b[49m\u001b[38;5;241;43m=\u001b[39;49m\u001b[43mcode_bucket\u001b[49m\u001b[43m,\u001b[49m\n\u001b[1;32m 2237\u001b[0m \u001b[43m \u001b[49m\u001b[43ms3_key_prefix\u001b[49m\u001b[38;5;241;43m=\u001b[39;49m\u001b[43mcode_s3_prefix\u001b[49m\u001b[43m,\u001b[49m\n\u001b[1;32m 2238\u001b[0m \u001b[43m \u001b[49m\u001b[43mscript\u001b[49m\u001b[38;5;241;43m=\u001b[39;49m\u001b[38;5;28;43mself\u001b[39;49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mentry_point\u001b[49m\u001b[43m,\u001b[49m\n\u001b[1;32m 2239\u001b[0m \u001b[43m \u001b[49m\u001b[43mdirectory\u001b[49m\u001b[38;5;241;43m=\u001b[39;49m\u001b[38;5;28;43mself\u001b[39;49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43msource_dir\u001b[49m\u001b[43m,\u001b[49m\n\u001b[1;32m 2240\u001b[0m \u001b[43m \u001b[49m\u001b[43mdependencies\u001b[49m\u001b[38;5;241;43m=\u001b[39;49m\u001b[38;5;28;43mself\u001b[39;49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mdependencies\u001b[49m\u001b[43m,\u001b[49m\n\u001b[1;32m 2241\u001b[0m \u001b[43m \u001b[49m\u001b[43mkms_key\u001b[49m\u001b[38;5;241;43m=\u001b[39;49m\u001b[43mkms_key\u001b[49m\u001b[43m,\u001b[49m\n\u001b[1;32m 2242\u001b[0m \u001b[43m \u001b[49m\u001b[43ms3_resource\u001b[49m\u001b[38;5;241;43m=\u001b[39;49m\u001b[38;5;28;43mself\u001b[39;49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43msagemaker_session\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43ms3_resource\u001b[49m\u001b[43m,\u001b[49m\n\u001b[1;32m 2243\u001b[0m \u001b[43m\u001b[49m\u001b[43m)\u001b[49m\n",

"File \u001b[0;32m/opt/conda/lib/python3.8/site-packages/sagemaker/fw_utils.py:225\u001b[0m, in \u001b[0;36mtar_and_upload_dir\u001b[0;34m(session, bucket, s3_key_prefix, script, directory, dependencies, kms_key, s3_resource)\u001b[0m\n\u001b[1;32m 223\u001b[0m \u001b[38;5;28;01mtry\u001b[39;00m:\n\u001b[1;32m 224\u001b[0m source_files \u001b[38;5;241m=\u001b[39m _list_files_to_compress(script, directory) \u001b[38;5;241m+\u001b[39m dependencies\n\u001b[0;32m--> 225\u001b[0m tar_file \u001b[38;5;241m=\u001b[39m \u001b[43msagemaker\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mutils\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mcreate_tar_file\u001b[49m\u001b[43m(\u001b[49m\n\u001b[1;32m 226\u001b[0m \u001b[43m \u001b[49m\u001b[43msource_files\u001b[49m\u001b[43m,\u001b[49m\u001b[43m \u001b[49m\u001b[43mos\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mpath\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mjoin\u001b[49m\u001b[43m(\u001b[49m\u001b[43mtmp\u001b[49m\u001b[43m,\u001b[49m\u001b[43m \u001b[49m\u001b[43m_TAR_SOURCE_FILENAME\u001b[49m\u001b[43m)\u001b[49m\n\u001b[1;32m 227\u001b[0m \u001b[43m \u001b[49m\u001b[43m)\u001b[49m\n\u001b[1;32m 229\u001b[0m \u001b[38;5;28;01mif\u001b[39;00m kms_key:\n\u001b[1;32m 230\u001b[0m extra_args \u001b[38;5;241m=\u001b[39m {\u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mServerSideEncryption\u001b[39m\u001b[38;5;124m\"\u001b[39m: \u001b[38;5;124m\"\u001b[39m\u001b[38;5;124maws:kms\u001b[39m\u001b[38;5;124m\"\u001b[39m, \u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mSSEKMSKeyId\u001b[39m\u001b[38;5;124m\"\u001b[39m: kms_key}\n",

"File \u001b[0;32m/opt/conda/lib/python3.8/site-packages/sagemaker/utils.py:337\u001b[0m, in \u001b[0;36mcreate_tar_file\u001b[0;34m(source_files, target)\u001b[0m\n\u001b[1;32m 334\u001b[0m \u001b[38;5;28;01mwith\u001b[39;00m tarfile\u001b[38;5;241m.\u001b[39mopen(filename, mode\u001b[38;5;241m=\u001b[39m\u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mw:gz\u001b[39m\u001b[38;5;124m\"\u001b[39m) \u001b[38;5;28;01mas\u001b[39;00m t:\n\u001b[1;32m 335\u001b[0m \u001b[38;5;28;01mfor\u001b[39;00m sf \u001b[38;5;129;01min\u001b[39;00m source_files:\n\u001b[1;32m 336\u001b[0m \u001b[38;5;66;03m# Add all files from the directory into the root of the directory structure of the tar\u001b[39;00m\n\u001b[0;32m--> 337\u001b[0m \u001b[43mt\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43madd\u001b[49m\u001b[43m(\u001b[49m\u001b[43msf\u001b[49m\u001b[43m,\u001b[49m\u001b[43m \u001b[49m\u001b[43marcname\u001b[49m\u001b[38;5;241;43m=\u001b[39;49m\u001b[43mos\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mpath\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mbasename\u001b[49m\u001b[43m(\u001b[49m\u001b[43msf\u001b[49m\u001b[43m)\u001b[49m\u001b[43m)\u001b[49m\n\u001b[1;32m 338\u001b[0m \u001b[38;5;28;01mreturn\u001b[39;00m filename\n",

"File \u001b[0;32m/opt/conda/lib/python3.8/tarfile.py:1955\u001b[0m, in \u001b[0;36mTarFile.add\u001b[0;34m(self, name, arcname, recursive, filter)\u001b[0m\n\u001b[1;32m 1952\u001b[0m \u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39m_dbg(\u001b[38;5;241m1\u001b[39m, name)\n\u001b[1;32m 1954\u001b[0m \u001b[38;5;66;03m# Create a TarInfo object from the file.\u001b[39;00m\n\u001b[0;32m-> 1955\u001b[0m tarinfo \u001b[38;5;241m=\u001b[39m \u001b[38;5;28;43mself\u001b[39;49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mgettarinfo\u001b[49m\u001b[43m(\u001b[49m\u001b[43mname\u001b[49m\u001b[43m,\u001b[49m\u001b[43m \u001b[49m\u001b[43marcname\u001b[49m\u001b[43m)\u001b[49m\n\u001b[1;32m 1957\u001b[0m \u001b[38;5;28;01mif\u001b[39;00m tarinfo \u001b[38;5;129;01mis\u001b[39;00m \u001b[38;5;28;01mNone\u001b[39;00m:\n\u001b[1;32m 1958\u001b[0m \u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39m_dbg(\u001b[38;5;241m1\u001b[39m, \u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mtarfile: Unsupported type \u001b[39m\u001b[38;5;132;01m%r\u001b[39;00m\u001b[38;5;124m\"\u001b[39m \u001b[38;5;241m%\u001b[39m name)\n",

"File \u001b[0;32m/opt/conda/lib/python3.8/tarfile.py:1834\u001b[0m, in \u001b[0;36mTarFile.gettarinfo\u001b[0;34m(self, name, arcname, fileobj)\u001b[0m\n\u001b[1;32m 1832\u001b[0m \u001b[38;5;28;01mif\u001b[39;00m fileobj \u001b[38;5;129;01mis\u001b[39;00m \u001b[38;5;28;01mNone\u001b[39;00m:\n\u001b[1;32m 1833\u001b[0m \u001b[38;5;28;01mif\u001b[39;00m \u001b[38;5;129;01mnot\u001b[39;00m \u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39mdereference:\n\u001b[0;32m-> 1834\u001b[0m statres \u001b[38;5;241m=\u001b[39m \u001b[43mos\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mlstat\u001b[49m\u001b[43m(\u001b[49m\u001b[43mname\u001b[49m\u001b[43m)\u001b[49m\n\u001b[1;32m 1835\u001b[0m \u001b[38;5;28;01melse\u001b[39;00m:\n\u001b[1;32m 1836\u001b[0m statres \u001b[38;5;241m=\u001b[39m os\u001b[38;5;241m.\u001b[39mstat(name)\n",

"\u001b[0;31mFileNotFoundError\u001b[0m: [Errno 2] No such file or directory: 'xgboost_starter_script.py'"

]

}

],

"source": [

"if \"training_job_2_name\" not in locals():\n",

"\n",

" xgb_estimator.fit(inputs={\"train\": train_data_upsampled_s3_path})\n",

" training_job_2_name = xgb_estimator.latest_training_job.job_name\n",

"\n",

"else:\n",

"\n",

" print(f\"Using previous training job: {training_job_2_name}\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Register artifacts"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"training_job_2_info = sagemaker_boto_client.describe_training_job(\n",

" TrainingJobName=training_job_2_name\n",

")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Code artifact"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"# return any existing artifact which match the our training job's code arn\n",

"code_s3_uri = training_job_2_info[\"HyperParameters\"][\"sagemaker_submit_directory\"]\n",

"\n",

"list_response = list(\n",

" sagemaker.lineage.artifact.Artifact.list(\n",

" source_uri=code_s3_uri, sagemaker_session=sagemaker_session\n",

" )\n",

")\n",

"\n",

"# use existing arifact if it's already been created, otherwise create a new artifact\n",

"if list_response:\n",

" code_artifact = list_response[0]\n",

" print(f\"Using existing artifact: {code_artifact.artifact_arn}\")\n",

"else:\n",

" code_artifact = sagemaker.lineage.artifact.Artifact.create(\n",

" artifact_name=\"TrainingScript\",\n",

" source_uri=code_s3_uri,\n",

" artifact_type=\"Code\",\n",

" sagemaker_session=sagemaker_session,\n",

" )\n",

" print(f\"Create artifact {code_artifact.artifact_arn}: SUCCESSFUL\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Training data artifact"

]

},

{

"cell_type": "code",

"execution_count": 17,

"metadata": {

"tags": []

},

"outputs": [

{

"ename": "NameError",

"evalue": "name 'training_job_2_info' is not defined",

"output_type": "error",

"traceback": [

"\u001b[0;31m---------------------------------------------------------------------------\u001b[0m",

"\u001b[0;31mNameError\u001b[0m Traceback (most recent call last)",

"Cell \u001b[0;32mIn[17], line 1\u001b[0m\n\u001b[0;32m----> 1\u001b[0m training_data_s3_uri \u001b[38;5;241m=\u001b[39m \u001b[43mtraining_job_2_info\u001b[49m[\u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mInputDataConfig\u001b[39m\u001b[38;5;124m\"\u001b[39m][\u001b[38;5;241m0\u001b[39m][\u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mDataSource\u001b[39m\u001b[38;5;124m\"\u001b[39m][\u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mS3DataSource\u001b[39m\u001b[38;5;124m\"\u001b[39m][\n\u001b[1;32m 2\u001b[0m \u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mS3Uri\u001b[39m\u001b[38;5;124m\"\u001b[39m\n\u001b[1;32m 3\u001b[0m ]\n\u001b[1;32m 5\u001b[0m list_response \u001b[38;5;241m=\u001b[39m \u001b[38;5;28mlist\u001b[39m(\n\u001b[1;32m 6\u001b[0m sagemaker\u001b[38;5;241m.\u001b[39mlineage\u001b[38;5;241m.\u001b[39martifact\u001b[38;5;241m.\u001b[39mArtifact\u001b[38;5;241m.\u001b[39mlist(\n\u001b[1;32m 7\u001b[0m source_uri\u001b[38;5;241m=\u001b[39mtraining_data_s3_uri, sagemaker_session\u001b[38;5;241m=\u001b[39msagemaker_session\n\u001b[1;32m 8\u001b[0m )\n\u001b[1;32m 9\u001b[0m )\n\u001b[1;32m 11\u001b[0m \u001b[38;5;28;01mif\u001b[39;00m list_response:\n",

"\u001b[0;31mNameError\u001b[0m: name 'training_job_2_info' is not defined"

]

}

],

"source": [

"training_data_s3_uri = training_job_2_info[\"InputDataConfig\"][0][\"DataSource\"][\"S3DataSource\"][\n",

" \"S3Uri\"\n",

"]\n",

"\n",

"list_response = list(\n",

" sagemaker.lineage.artifact.Artifact.list(\n",

" source_uri=training_data_s3_uri, sagemaker_session=sagemaker_session\n",

" )\n",

")\n",

"\n",

"if list_response:\n",

" training_data_artifact = list_response[0]\n",

" print(f\"Using existing artifact: {training_data_artifact.artifact_arn}\")\n",

"else:\n",

" training_data_artifact = sagemaker.lineage.artifact.Artifact.create(\n",

" artifact_name=\"TrainingData\",\n",

" source_uri=training_data_s3_uri,\n",

" artifact_type=\"Dataset\",\n",

" sagemaker_session=sagemaker_session,\n",

" )\n",

" print(f\"Create artifact {training_data_artifact.artifact_arn}: SUCCESSFUL\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Model artifact"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"trained_model_s3_uri = training_job_2_info[\"ModelArtifacts\"][\"S3ModelArtifacts\"]\n",

"\n",

"list_response = list(\n",

" sagemaker.lineage.artifact.Artifact.list(\n",

" source_uri=trained_model_s3_uri, sagemaker_session=sagemaker_session\n",

" )\n",

")\n",

"\n",

"if list_response:\n",

" model_artifact = list_response[0]\n",

" print(f\"Using existing artifact: {model_artifact.artifact_arn}\")\n",

"else:\n",

" model_artifact = sagemaker.lineage.artifact.Artifact.create(\n",

" artifact_name=\"TrainedModel\",\n",

" source_uri=trained_model_s3_uri,\n",

" artifact_type=\"Model\",\n",

" sagemaker_session=sagemaker_session,\n",

" )\n",

" print(f\"Create artifact {model_artifact.artifact_arn}: SUCCESSFUL\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Set artifact associations"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"trial_component = sagemaker_boto_client.describe_trial_component(\n",

" TrialComponentName=training_job_2_name + \"-aws-training-job\"\n",

")\n",

"trial_component_arn = trial_component[\"TrialComponentArn\"]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Input artifacts"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"input_artifacts = [code_artifact, training_data_artifact]\n",

"\n",

"for a in input_artifacts:\n",

" try:\n",

" sagemaker.lineage.association.Association.create(\n",

" source_arn=a.artifact_arn,\n",

" destination_arn=trial_component_arn,\n",

" association_type=\"ContributedTo\",\n",

" sagemaker_session=sagemaker_session,\n",

" )\n",

" print(f\"Associate {trial_component_arn} and {a.artifact_arn}: SUCCEESFUL\\n\")\n",

" except:\n",

" print(f\"Association already exists between {trial_component_arn} and {a.artifact_arn}.\\n\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Output artifacts"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"output_artifacts = [model_artifact]\n",

"\n",

"for artifact_arn in output_artifacts:\n",

" try:\n",

" sagemaker.lineage.association.Association.create(\n",

" source_arn=a.artifact_arn,\n",

" destination_arn=trial_component_arn,\n",

" association_type=\"Produced\",\n",

" sagemaker_session=sagemaker_session,\n",

" )\n",

" print(f\"Associate {trial_component_arn} and {a.artifact_arn}: SUCCEESFUL\\n\")\n",

" except:\n",

" print(f\"Association already exists between {trial_component_arn} and {a.artifact_arn}.\\n\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Analyze Model for Bias and Explainability\n",

"----\n",

"\n",

"Amazon SageMaker Clarify provides tools to help explain how machine learning (ML) models make predictions. These tools can help ML modelers and developers and other internal stakeholders understand model characteristics as a whole prior to deployment and to debug predictions provided by the model after it's deployed. Transparency about how ML models arrive at their predictions is also critical to consumers and regulators who need to trust the model predictions if they are going to accept the decisions based on them. SageMaker Clarify uses a model-agnostic feature attribution approach, which you can used to understand why a model made a prediction after training and to provide per-instance explanation during inference. The implementation includes a scalable and efficient implementation of SHAP ([see paper](https://papers.nips.cc/paper/2017/file/8a20a8621978632d76c43dfd28b67767-Paper.pdf)), based on the concept of a Shapley value from the field of cooperative game theory that assigns each feature an importance value for a particular prediction. "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Create model from estimator"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"model_matches = sagemaker_boto_client.list_models(NameContains=model_2_name)['Models']\n",

"\n",

"if not model_matches:\n",

" \n",

" model_2 = sagemaker_session.create_model_from_job(\n",

" name=model_2_name,\n",

" training_job_name=training_job_2_info['TrainingJobName'],\n",

" role=sagemaker_role,\n",

" image_uri=training_job_2_info['AlgorithmSpecification']['TrainingImage'])\n",

" %store model_2_name\n",

" \n",

"else:\n",

" \n",

" print(f\"Model {model_2_name} already exists.\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"\n",

"\n",

"### Check for data set bias and model bias\n",

"\n",

"With SageMaker, we can check for pre-training and post-training bias. Pre-training metrics show pre-existing bias in that data, while post-training metrics show bias in the predictions from the model. Using the SageMaker SDK, we can specify which groups we want to check bias across and which metrics we'd like to show. \n",

"\n",

"To run the full Clarify job, you must un-comment the code in the cell below. Running the job will take ~15 minutes. If you wish to save time, you can view the results in the next cell after which loads a pre-generated output if no bias job was run."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"clarify_processor = sagemaker.clarify.SageMakerClarifyProcessor(\n",

" role=sagemaker_role,\n",

" instance_count=1,\n",

" instance_type=\"ml.c4.xlarge\",\n",

" sagemaker_session=sagemaker_session,\n",

")\n",

"\n",

"bias_data_config = sagemaker.clarify.DataConfig(\n",

" s3_data_input_path=train_data_upsampled_s3_path,\n",

" s3_output_path=bias_report_2_output_path,\n",

" label=\"fraud\",\n",

" headers=train.columns.to_list(),\n",

" dataset_type=\"text/csv\",\n",

")\n",

"\n",

"model_config = sagemaker.clarify.ModelConfig(\n",

" model_name=model_2_name,\n",

" instance_type=train_instance_type,\n",

" instance_count=1,\n",

" accept_type=\"text/csv\",\n",

")\n",

"\n",

"predictions_config = sagemaker.clarify.ModelPredictedLabelConfig(probability_threshold=0.5)\n",

"\n",

"bias_config = sagemaker.clarify.BiasConfig(\n",

" label_values_or_threshold=[0],\n",

" facet_name=\"customer_gender_female\",\n",

" facet_values_or_threshold=[1],\n",

")\n",

"\n",

"# # un-comment the code below to run the whole job\n",

"\n",

"# if 'clarify_bias_job_2_name' not in locals():\n",

"\n",

"# clarify_processor.run_bias(\n",

"# data_config=bias_data_config,\n",

"# bias_config=bias_config,\n",

"# model_config=model_config,\n",

"# model_predicted_label_config=predictions_config,\n",

"# pre_training_methods='all',\n",

"# post_training_methods='all')\n",

"\n",

"# clarify_bias_job_2_name = clarify_processor.latest_job.name\n",

"# %store clarify_bias_job_2_name\n",

"\n",

"# else:\n",

"# print(f'Clarify job {clarify_bias_job_2_name} has already run successfully.')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## View Results of Clarify Job\n",

"----\n",

"\n",

"Running Clarify on your dataset or model can take ~15 minutes. If you don't have time to run the job, you can view the pre-generated results included with this demo. Otherwise, you can run the job by un-commenting the code in the cell above."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"if \"clarify_bias_job_2_name\" in locals():\n",

" s3_client.download_file(\n",

" Bucket=bucket,\n",

" Key=f\"{prefix}/clarify-output/bias-2/analysis.json\",\n",

" Filename=\"clarify_output/bias_2/analysis.json\",\n",

" )\n",

" print(f\"Downloaded analysis from previous Clarify job: {clarify_bias_job_2_name}\\n\")\n",

"else:\n",

" print(f\"Loading pre-generated analysis file...\\n\")\n",

"\n",

"with open(\"clarify_output/bias_1/analysis.json\", \"r\") as f:\n",

" bias_analysis = json.load(f)\n",

"\n",

"results = bias_analysis[\"pre_training_bias_metrics\"][\"facets\"][\"customer_gender_female\"][0][\n",

" \"metrics\"\n",

"][1]\n",

"print(json.dumps(results, indent=4))\n",

"\n",

"with open(\"clarify_output/bias_2/analysis.json\", \"r\") as f:\n",

" bias_analysis = json.load(f)\n",

"\n",

"results = bias_analysis[\"pre_training_bias_metrics\"][\"facets\"][\"customer_gender_female\"][0][\n",

" \"metrics\"\n",

"][1]\n",

"print(json.dumps(results, indent=4))"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Configure and Run Explainability Job\n",

"----\n",

"\n",

"To run the full Clarify job, you must un-comment the code in the cell below. Running the job will take ~15 minutes. If you wish to save time, you can view the results in the next cell after which loads a pre-generated output if no explainability job was run."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"model_config = sagemaker.clarify.ModelConfig(\n",

" model_name=model_2_name,\n",

" instance_type=train_instance_type,\n",

" instance_count=1,\n",

" accept_type=\"text/csv\",\n",

")\n",

"\n",

"shap_config = sagemaker.clarify.SHAPConfig(\n",

" baseline=[train.median().values[1:].tolist()], num_samples=100, agg_method=\"mean_abs\"\n",

")\n",

"\n",

"explainability_data_config = sagemaker.clarify.DataConfig(\n",

" s3_data_input_path=train_data_upsampled_s3_path,\n",

" s3_output_path=explainability_output_path,\n",

" label=\"fraud\",\n",

" headers=train.columns.to_list(),\n",

" dataset_type=\"text/csv\",\n",

")\n",

"\n",

"# un-comment the code below to run the whole job\n",

"\n",

"# if \"clarify_expl_job_name\" not in locals():\n",

"\n",

"# clarify_processor.run_explainability(\n",

"# data_config=explainability_data_config,\n",

"# model_config=model_config,\n",

"# explainability_config=shap_config)\n",

"\n",

"# clarify_expl_job_name = clarify_processor.latest_job.name\n",

"# %store clarify_expl_job_name\n",

"\n",

"# else:\n",

"# print(f'Clarify job {clarify_expl_job_name} has already run successfully.')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### View Clarify explainability results (shortcut)\n",

"Running Clarify on your dataset or model can take ~15 minutes. If you don't have time to run the job, you can view the pre-generated results included with this demo. Otherwise, you can run the job by un-commenting the code in the cell above."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"if \"clarify_expl_job_name\" in locals():\n",

" s3_client.download_file(\n",

" Bucket=bucket,\n",

" Key=f\"{prefix}/clarify-output/explainability/analysis.json\",\n",

" Filename=\"clarify_output/explainability/analysis.json\",\n",

" )\n",

" print(f\"Downloaded analysis from previous Clarify job: {clarify_expl_job_name}\\n\")\n",

"else:\n",

" print(f\"Loading pre-generated analysis file...\\n\")\n",

"\n",

"with open(\"clarify_output/explainability/analysis.json\", \"r\") as f:\n",

" analysis_result = json.load(f)\n",

"\n",

"shap_values = pd.DataFrame(analysis_result[\"explanations\"][\"kernel_shap\"][\"label0\"])\n",

"importances = shap_values[\"global_shap_values\"].sort_values(ascending=False)\n",

"fig, ax = plt.subplots()\n",

"n = 5\n",

"y_pos = np.arange(n)\n",

"importance_scores = importances.values[:n]\n",

"y_label = importances.index[:n]\n",

"ax.barh(y_pos, importance_scores, align=\"center\")\n",

"ax.set_yticks(y_pos)\n",

"ax.set_yticklabels(y_label)\n",

"ax.invert_yaxis()\n",

"ax.set_xlabel(\"SHAP Value (impact on model output)\");"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"To see the autogenerated SageMaker Clarify report, run the following code and use the output link to open the report."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"from IPython.display import FileLink, FileLinks\n",

"\n",

"display(\n",

" \"Click link below to view the SageMaker Clarify report\", FileLink(\"clarify_output/report.pdf\")\n",

")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### What is SHAP?\n",

"SHAP is the method used for calculating explanations in this solution.\n",

"Unlike other feature attribution methods, such as single feature\n",

"permutation, SHAP tries to disentangle the effect of a single feature by\n",

"looking at all possible combinations of features.\n",

"\n",

"[SHAP](https://github.com/slundberg/shap) (Lundberg et al. 2017) stands\n",

"for SHapley Additive exPlanations. 'Shapley' relates to a game theoretic\n",

"concept called [Shapley\n",

"values](https://en.wikipedia.org/wiki/Shapley_value) that is used to\n",

"create the explanations. A Shapley value describes the marginal\n",

"contribution of each 'player' when considering all possible 'coalitions'.\n",

"Using this in a machine learning context, a Shapley value describes the\n",

"marginal contribution of each feature when considering all possible sets\n",

"of features. 'Additive' relates to the fact that these Shapley values can\n",

"be summed together to give the final model prediction.\n",

"\n",

"As an example, we might start off with a baseline credit default risk of\n",

"10%. Given a set of features, we can calculate the Shapley value for each\n",

"feature. Summing together all the Shapley values, we might obtain a\n",

"cumulative value of +30%. Given the same set of features, we therefore\n",

"expect our model to return a credit default risk of 40% (i.e. 10% + 30%)."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Create Model Package for the Trained Model\n",

"----\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Create and upload second model metrics report"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"model_metrics_report = {\"binary_classification_metrics\": {}}\n",

"for metric in training_job_2_info[\"FinalMetricDataList\"]:\n",

" stat = {metric[\"MetricName\"]: {\"value\": metric[\"Value\"], \"standard_deviation\": \"NaN\"}}\n",

" model_metrics_report[\"binary_classification_metrics\"].update(stat)\n",

"\n",

"with open(\"training_metrics.json\", \"w\") as f:\n",

" json.dump(model_metrics_report, f)\n",

"\n",

"metrics_s3_key = (\n",

" f\"{prefix}/training_jobs/{training_job_2_info['TrainingJobName']}/training_metrics.json\"\n",

")\n",

"s3_client.upload_file(Filename=\"training_metrics.json\", Bucket=bucket, Key=metrics_s3_key)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Define inference specification"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"mp_inference_spec = InferenceSpecification().get_inference_specification_dict(\n",

" ecr_image=training_job_2_info[\"AlgorithmSpecification\"][\"TrainingImage\"],\n",

" supports_gpu=False,\n",

" supported_content_types=[\"text/csv\"],\n",

" supported_mime_types=[\"text/csv\"],\n",

")\n",

"\n",

"mp_inference_spec[\"InferenceSpecification\"][\"Containers\"][0][\"ModelDataUrl\"] = training_job_2_info[\n",

" \"ModelArtifacts\"\n",

"][\"S3ModelArtifacts\"]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Define model metrics"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"model_metrics = {\n",

" \"ModelQuality\": {\n",

" \"Statistics\": {\n",

" \"ContentType\": \"application/json\",\n",

" \"S3Uri\": f\"s3://{bucket}/{metrics_s3_key}\",\n",

" }\n",

" },\n",

" \"Bias\": {\n",

" \"Report\": {\n",

" \"ContentType\": \"application/json\",\n",

" \"S3Uri\": f\"{explainability_output_path}/analysis.json\",\n",

" }\n",

" },\n",

"}"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Register second model package to Model Package Group"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"mpg_name = prefix\n",

"mp_input_dict = {\n",

" \"ModelPackageGroupName\": mpg_name,\n",

" \"ModelPackageDescription\": \"XGBoost classifier to detect insurance fraud with SMOTE.\",\n",

" \"ModelApprovalStatus\": \"PendingManualApproval\",\n",

" \"ModelMetrics\": model_metrics,\n",

"}\n",

"\n",

"mp_input_dict.update(mp_inference_spec)\n",

"mp2_response = sagemaker_boto_client.create_model_package(**mp_input_dict)\n",

"mp2_arn = mp2_response[\"ModelPackageArn\"]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Check status of model package creation"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"mp_info = sagemaker_boto_client.describe_model_package(\n",

" ModelPackageName=mp2_response[\"ModelPackageArn\"]\n",

")\n",

"mp_status = mp_info[\"ModelPackageStatus\"]\n",

"\n",

"while mp_status not in [\"Completed\", \"Failed\"]:\n",

" time.sleep(5)\n",

" mp_info = sagemaker_boto_client.describe_model_package(\n",

" ModelPackageName=mp2_response[\"ModelPackageArn\"]\n",

" )\n",

" mp_status = mp_info[\"ModelPackageStatus\"]\n",

" print(f\"model package status: {mp_status}\")\n",

"print(f\"model package status: {mp_status}\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### View both models in the registry"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"sagemaker_boto_client.list_model_packages(ModelPackageGroupName=mpg_name)[\"ModelPackageSummaryList\"]"

]

},

{

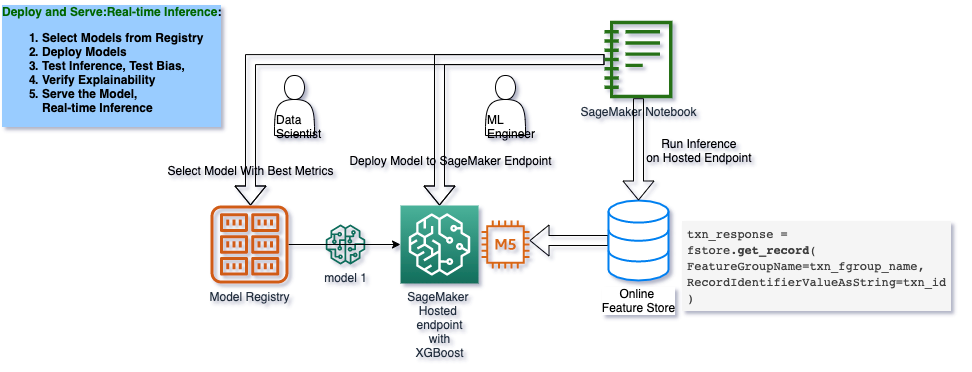

"cell_type": "markdown",

"metadata": {},

"source": [

" \n",

"\n",

"## Architecture: Deploy and Serve Model\n",

"----\n",

"\n",

"Now that we have trained a model, we can deploy and serve it. The follwoing picture shows the architecture for doing so.\n",

"\n",

""

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# variables used for parameterizing the notebook run\n",

"endpoint_name = f\"{model_2_name}-endpoint\"\n",

"endpoint_instance_count = 1\n",

"endpoint_instance_type = \"ml.m4.xlarge\"\n",

"\n",

"predictor_instance_count = 1\n",

"predictor_instance_type = \"ml.c5.xlarge\"\n",

"batch_transform_instance_count = 1\n",

"batch_transform_instance_type = \"ml.c5.xlarge\""

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Deploy an Approved Model and Make a Prediction\n",

"----"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Approve the second model\n",

"In the real-life MLOps lifecycle, a model package gets approved after evaluation by data scientists, subject matter experts and auditors."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"second_model_package = sagemaker_boto_client.list_model_packages(ModelPackageGroupName=mpg_name)[\n",

" \"ModelPackageSummaryList\"\n",

"][0]\n",

"model_package_update = {\n",

" \"ModelPackageArn\": second_model_package[\"ModelPackageArn\"],\n",

" \"ModelApprovalStatus\": \"Approved\",\n",

"}\n",

"\n",

"update_response = sagemaker_boto_client.update_model_package(**model_package_update)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Create an endpoint config and an endpoint\n",

"Deploy the endpoint. This might take about 8minutes."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"primary_container = {\"ModelPackageName\": second_model_package[\"ModelPackageArn\"]}\n",

"endpoint_config_name = f\"{model_2_name}-endpoint-config\"\n",

"existing_configs = len(\n",

" sagemaker_boto_client.list_endpoint_configs(NameContains=endpoint_config_name, MaxResults=30)[\n",

" \"EndpointConfigs\"\n",

" ]\n",

")\n",

"\n",

"if existing_configs == 0:\n",

" create_ep_config_response = sagemaker_boto_client.create_endpoint_config(\n",

" EndpointConfigName=endpoint_config_name,\n",

" ProductionVariants=[\n",

" {\n",

" \"InstanceType\": endpoint_instance_type,\n",

" \"InitialVariantWeight\": 1,\n",

" \"InitialInstanceCount\": endpoint_instance_count,\n",

" \"ModelName\": model_2_name,\n",

" \"VariantName\": \"AllTraffic\",\n",

" }\n",

" ],\n",

" )"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"existing_endpoints = sagemaker_boto_client.list_endpoints(\n",

" NameContains=endpoint_name, MaxResults=30\n",

")[\"Endpoints\"]\n",

"if not existing_endpoints:\n",

" create_endpoint_response = sagemaker_boto_client.create_endpoint(\n",

" EndpointName=endpoint_name, EndpointConfigName=endpoint_config_name\n",

" )\n",

"\n",

"endpoint_info = sagemaker_boto_client.describe_endpoint(EndpointName=endpoint_name)\n",

"endpoint_status = endpoint_info[\"EndpointStatus\"]\n",

"\n",

"while endpoint_status == \"Creating\":\n",

" endpoint_info = sagemaker_boto_client.describe_endpoint(EndpointName=endpoint_name)\n",

" endpoint_status = endpoint_info[\"EndpointStatus\"]\n",

" print(\"Endpoint status:\", endpoint_status)\n",

" if endpoint_status == \"Creating\":\n",

" time.sleep(60)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Run Predictions on Claims\n",

"----"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" \n",

"\n",

"### Create a predictor"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"predictor = sagemaker.predictor.Predictor(\n",

" endpoint_name=endpoint_name, sagemaker_session=sagemaker_session\n",

")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Sample a claim from the test data"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"dataset = pd.read_csv(\"data/dataset.csv\")\n",

"train = dataset.sample(frac=0.8, random_state=0)\n",

"test = dataset.drop(train.index)\n",

"sample_policy_id = int(test.sample(1)[\"policy_id\"])"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"test.info()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Get Multiple Claims"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"dataset = pd.read_csv(\"./data/claims_customer.csv\")\n",

"col_order = [\"fraud\"] + list(dataset.drop([\"fraud\", \"Unnamed: 0\", \"policy_id\"], axis=1).columns)\n",

"col_order"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"col_order"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Pull customer data and format the datapoint\n",

"When a customer submits an insurance claim online for instant approval, the insurance company will need to pull customer-specific data. You can do it either using the customer data we have stored in a CSV files or an online feature store to add to the claim data. The pulled data will serve as input for a model prediction.\n",

"\n",

"Then, the datapoint must match the exact input format as the model was trained--with all features in the correct order. In this example, the `col_order` variable was saved when you created the train and test datasets earlier in the guide."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"sample_policy_id = int(test.sample(1)[\"policy_id\"])\n",

"pull_from_feature_store = False\n",

"\n",

"if pull_from_feature_store:\n",

" customers_response = featurestore_runtime.get_record(\n",

" FeatureGroupName=customers_fg_name, RecordIdentifierValueAsString=str(sample_policy_id)\n",

" )\n",

"\n",

" customer_record = customers_response[\"Record\"]\n",

" customer_df = pd.DataFrame(customer_record).set_index(\"FeatureName\")\n",

"\n",

" claims_response = featurestore_runtime.get_record(\n",

" FeatureGroupName=claims_fg_name, RecordIdentifierValueAsString=str(sample_policy_id)\n",

" )\n",

"\n",

" claims_record = claims_response[\"Record\"]\n",

" claims_df = pd.DataFrame(claims_record).set_index(\"FeatureName\")\n",

"\n",

" blended_df = pd.concat([claims_df, customer_df]).loc[col_order].drop(\"fraud\")\n",

"else:\n",

" customer_claim_df = dataset[dataset[\"policy_id\"] == sample_policy_id].sample(1)\n",

" blended_df = customer_claim_df.loc[:, col_order].drop(\"fraud\", axis=1).T.reset_index()\n",

" blended_df.columns = [\"FeatureName\", \"ValueAsString\"]\n",

"\n",

"data_input = \",\".join([str(x) for x in blended_df[\"ValueAsString\"]])\n",

"data_input"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Make prediction"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"results = predictor.predict(data_input, initial_args={\"ContentType\": \"text/csv\"})\n",

"prediction = json.loads(results)\n",

"print(f\"Probablitity the claim from policy {int(sample_policy_id)} is fraudulent:\", prediction)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Notebook CI Test Results\n",

"\n",

"This notebook was tested in multiple regions. The test results are as follows, except for us-west-2 which is shown at the top of the notebook.\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n"

]

}

],

"metadata": {

"availableInstances": [

{

"_defaultOrder": 0,

"_isFastLaunch": true,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 4,

"name": "ml.t3.medium",

"vcpuNum": 2

},

{

"_defaultOrder": 1,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 8,

"name": "ml.t3.large",

"vcpuNum": 2

},

{

"_defaultOrder": 2,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 16,

"name": "ml.t3.xlarge",

"vcpuNum": 4

},

{

"_defaultOrder": 3,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 32,

"name": "ml.t3.2xlarge",

"vcpuNum": 8

},

{

"_defaultOrder": 4,

"_isFastLaunch": true,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 8,

"name": "ml.m5.large",

"vcpuNum": 2

},

{

"_defaultOrder": 5,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 16,

"name": "ml.m5.xlarge",

"vcpuNum": 4

},

{

"_defaultOrder": 6,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 32,

"name": "ml.m5.2xlarge",

"vcpuNum": 8

},

{

"_defaultOrder": 7,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 64,

"name": "ml.m5.4xlarge",

"vcpuNum": 16

},

{

"_defaultOrder": 8,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 128,

"name": "ml.m5.8xlarge",

"vcpuNum": 32

},

{

"_defaultOrder": 9,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 192,

"name": "ml.m5.12xlarge",

"vcpuNum": 48

},

{

"_defaultOrder": 10,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 256,

"name": "ml.m5.16xlarge",

"vcpuNum": 64

},

{

"_defaultOrder": 11,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 384,

"name": "ml.m5.24xlarge",

"vcpuNum": 96

},

{

"_defaultOrder": 12,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 8,

"name": "ml.m5d.large",

"vcpuNum": 2

},

{

"_defaultOrder": 13,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 16,

"name": "ml.m5d.xlarge",

"vcpuNum": 4

},

{

"_defaultOrder": 14,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 32,

"name": "ml.m5d.2xlarge",

"vcpuNum": 8

},

{

"_defaultOrder": 15,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 64,

"name": "ml.m5d.4xlarge",

"vcpuNum": 16

},

{

"_defaultOrder": 16,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 128,

"name": "ml.m5d.8xlarge",

"vcpuNum": 32

},

{

"_defaultOrder": 17,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 192,

"name": "ml.m5d.12xlarge",

"vcpuNum": 48

},

{

"_defaultOrder": 18,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 256,

"name": "ml.m5d.16xlarge",

"vcpuNum": 64

},

{

"_defaultOrder": 19,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 384,

"name": "ml.m5d.24xlarge",

"vcpuNum": 96

},

{

"_defaultOrder": 20,

"_isFastLaunch": false,

"category": "General purpose",

"gpuNum": 0,

"hideHardwareSpecs": true,

"memoryGiB": 0,

"name": "ml.geospatial.interactive",

"supportedImageNames": [

"sagemaker-geospatial-v1-0"

],

"vcpuNum": 0

},

{

"_defaultOrder": 21,

"_isFastLaunch": true,

"category": "Compute optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 4,

"name": "ml.c5.large",

"vcpuNum": 2

},

{

"_defaultOrder": 22,

"_isFastLaunch": false,

"category": "Compute optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 8,

"name": "ml.c5.xlarge",

"vcpuNum": 4

},

{

"_defaultOrder": 23,

"_isFastLaunch": false,

"category": "Compute optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 16,

"name": "ml.c5.2xlarge",

"vcpuNum": 8

},

{

"_defaultOrder": 24,

"_isFastLaunch": false,

"category": "Compute optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 32,

"name": "ml.c5.4xlarge",

"vcpuNum": 16

},

{

"_defaultOrder": 25,

"_isFastLaunch": false,

"category": "Compute optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 72,

"name": "ml.c5.9xlarge",

"vcpuNum": 36

},

{

"_defaultOrder": 26,

"_isFastLaunch": false,

"category": "Compute optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 96,

"name": "ml.c5.12xlarge",

"vcpuNum": 48

},

{

"_defaultOrder": 27,

"_isFastLaunch": false,

"category": "Compute optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 144,

"name": "ml.c5.18xlarge",

"vcpuNum": 72

},

{

"_defaultOrder": 28,

"_isFastLaunch": false,

"category": "Compute optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 192,

"name": "ml.c5.24xlarge",

"vcpuNum": 96

},

{

"_defaultOrder": 29,

"_isFastLaunch": true,

"category": "Accelerated computing",

"gpuNum": 1,

"hideHardwareSpecs": false,

"memoryGiB": 16,

"name": "ml.g4dn.xlarge",

"vcpuNum": 4

},

{

"_defaultOrder": 30,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 1,

"hideHardwareSpecs": false,

"memoryGiB": 32,

"name": "ml.g4dn.2xlarge",

"vcpuNum": 8

},

{

"_defaultOrder": 31,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 1,

"hideHardwareSpecs": false,

"memoryGiB": 64,

"name": "ml.g4dn.4xlarge",

"vcpuNum": 16

},

{

"_defaultOrder": 32,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 1,

"hideHardwareSpecs": false,

"memoryGiB": 128,

"name": "ml.g4dn.8xlarge",

"vcpuNum": 32

},

{

"_defaultOrder": 33,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 4,

"hideHardwareSpecs": false,

"memoryGiB": 192,

"name": "ml.g4dn.12xlarge",

"vcpuNum": 48

},

{

"_defaultOrder": 34,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 1,

"hideHardwareSpecs": false,

"memoryGiB": 256,

"name": "ml.g4dn.16xlarge",

"vcpuNum": 64

},

{

"_defaultOrder": 35,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 1,

"hideHardwareSpecs": false,

"memoryGiB": 61,

"name": "ml.p3.2xlarge",

"vcpuNum": 8

},

{

"_defaultOrder": 36,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 4,

"hideHardwareSpecs": false,

"memoryGiB": 244,

"name": "ml.p3.8xlarge",

"vcpuNum": 32

},

{

"_defaultOrder": 37,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 8,

"hideHardwareSpecs": false,

"memoryGiB": 488,

"name": "ml.p3.16xlarge",

"vcpuNum": 64

},

{

"_defaultOrder": 38,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 8,

"hideHardwareSpecs": false,

"memoryGiB": 768,

"name": "ml.p3dn.24xlarge",

"vcpuNum": 96

},

{

"_defaultOrder": 39,

"_isFastLaunch": false,

"category": "Memory Optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 16,

"name": "ml.r5.large",

"vcpuNum": 2

},

{

"_defaultOrder": 40,

"_isFastLaunch": false,

"category": "Memory Optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 32,

"name": "ml.r5.xlarge",

"vcpuNum": 4

},

{

"_defaultOrder": 41,

"_isFastLaunch": false,

"category": "Memory Optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 64,

"name": "ml.r5.2xlarge",

"vcpuNum": 8

},

{

"_defaultOrder": 42,

"_isFastLaunch": false,

"category": "Memory Optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 128,

"name": "ml.r5.4xlarge",

"vcpuNum": 16

},

{

"_defaultOrder": 43,

"_isFastLaunch": false,

"category": "Memory Optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 256,

"name": "ml.r5.8xlarge",

"vcpuNum": 32

},

{

"_defaultOrder": 44,

"_isFastLaunch": false,

"category": "Memory Optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 384,

"name": "ml.r5.12xlarge",

"vcpuNum": 48

},

{

"_defaultOrder": 45,

"_isFastLaunch": false,

"category": "Memory Optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 512,

"name": "ml.r5.16xlarge",

"vcpuNum": 64

},

{

"_defaultOrder": 46,

"_isFastLaunch": false,

"category": "Memory Optimized",

"gpuNum": 0,

"hideHardwareSpecs": false,

"memoryGiB": 768,

"name": "ml.r5.24xlarge",

"vcpuNum": 96

},

{

"_defaultOrder": 47,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 1,

"hideHardwareSpecs": false,

"memoryGiB": 16,

"name": "ml.g5.xlarge",

"vcpuNum": 4

},

{

"_defaultOrder": 48,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 1,

"hideHardwareSpecs": false,

"memoryGiB": 32,

"name": "ml.g5.2xlarge",

"vcpuNum": 8

},

{

"_defaultOrder": 49,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 1,

"hideHardwareSpecs": false,

"memoryGiB": 64,

"name": "ml.g5.4xlarge",

"vcpuNum": 16

},

{

"_defaultOrder": 50,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 1,

"hideHardwareSpecs": false,

"memoryGiB": 128,

"name": "ml.g5.8xlarge",

"vcpuNum": 32

},

{

"_defaultOrder": 51,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 1,

"hideHardwareSpecs": false,

"memoryGiB": 256,

"name": "ml.g5.16xlarge",

"vcpuNum": 64

},

{

"_defaultOrder": 52,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 4,

"hideHardwareSpecs": false,

"memoryGiB": 192,

"name": "ml.g5.12xlarge",

"vcpuNum": 48

},

{

"_defaultOrder": 53,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 4,

"hideHardwareSpecs": false,

"memoryGiB": 384,

"name": "ml.g5.24xlarge",

"vcpuNum": 96

},

{

"_defaultOrder": 54,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 8,

"hideHardwareSpecs": false,

"memoryGiB": 768,

"name": "ml.g5.48xlarge",

"vcpuNum": 192

},

{

"_defaultOrder": 55,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 8,

"hideHardwareSpecs": false,

"memoryGiB": 1152,

"name": "ml.p4d.24xlarge",

"vcpuNum": 96

},

{

"_defaultOrder": 56,

"_isFastLaunch": false,

"category": "Accelerated computing",

"gpuNum": 8,

"hideHardwareSpecs": false,

"memoryGiB": 1152,

"name": "ml.p4de.24xlarge",

"vcpuNum": 96

}

],

"instance_type": "ml.t3.medium",

"kernelspec": {

"display_name": "Python 3 (Data Science 2.0)",

"language": "python",

"name": "python3__SAGEMAKER_INTERNAL__arn:aws:sagemaker:us-east-1:081325390199:image/sagemaker-data-science-38"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.8.13"

}

},

"nbformat": 4,

"nbformat_minor": 4

}