{

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

" "

]

},

{

"attachments": {},

"cell_type": "markdown",

"metadata": {},

"source": [

"---\n",

"\n",

"This notebook's CI test result for us-west-2 is as follows. CI test results in other regions can be found at the end of the notebook. \n",

"\n",

"\n",

"\n",

"---"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

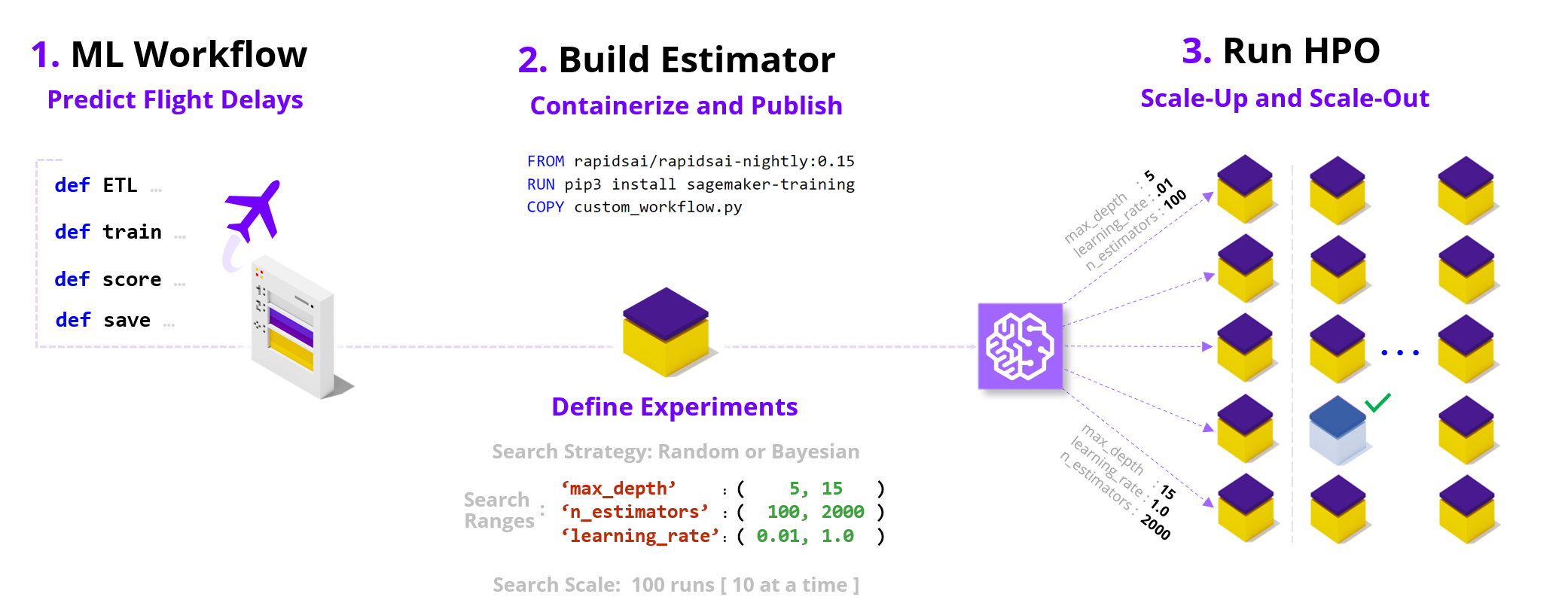

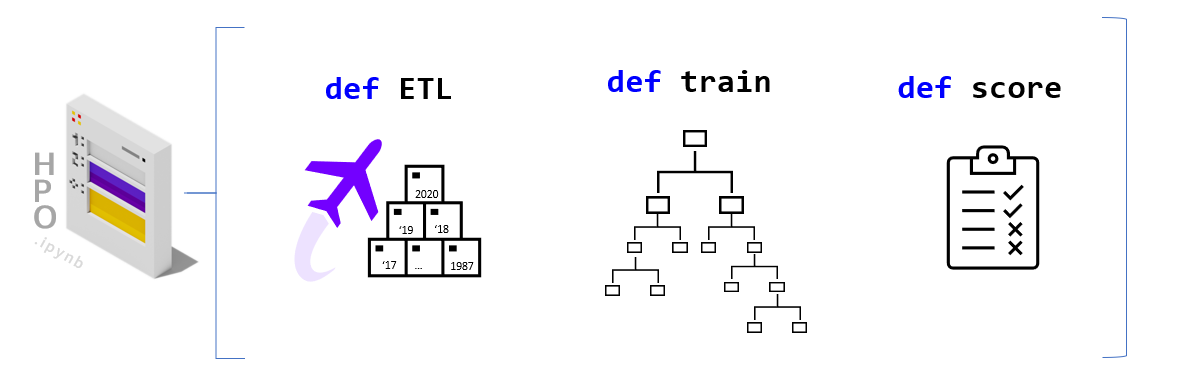

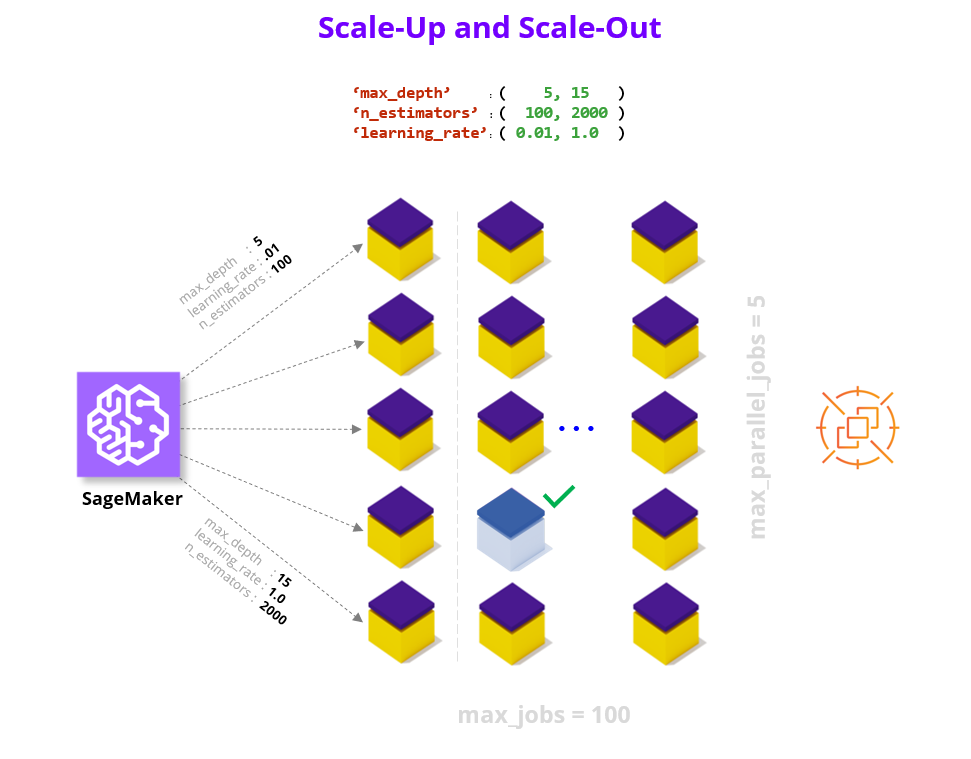

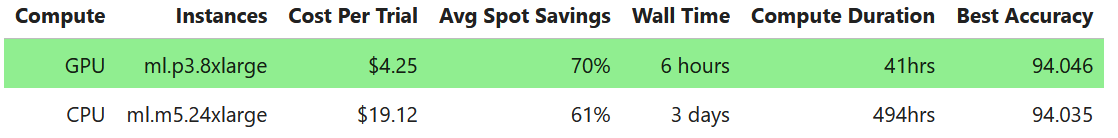

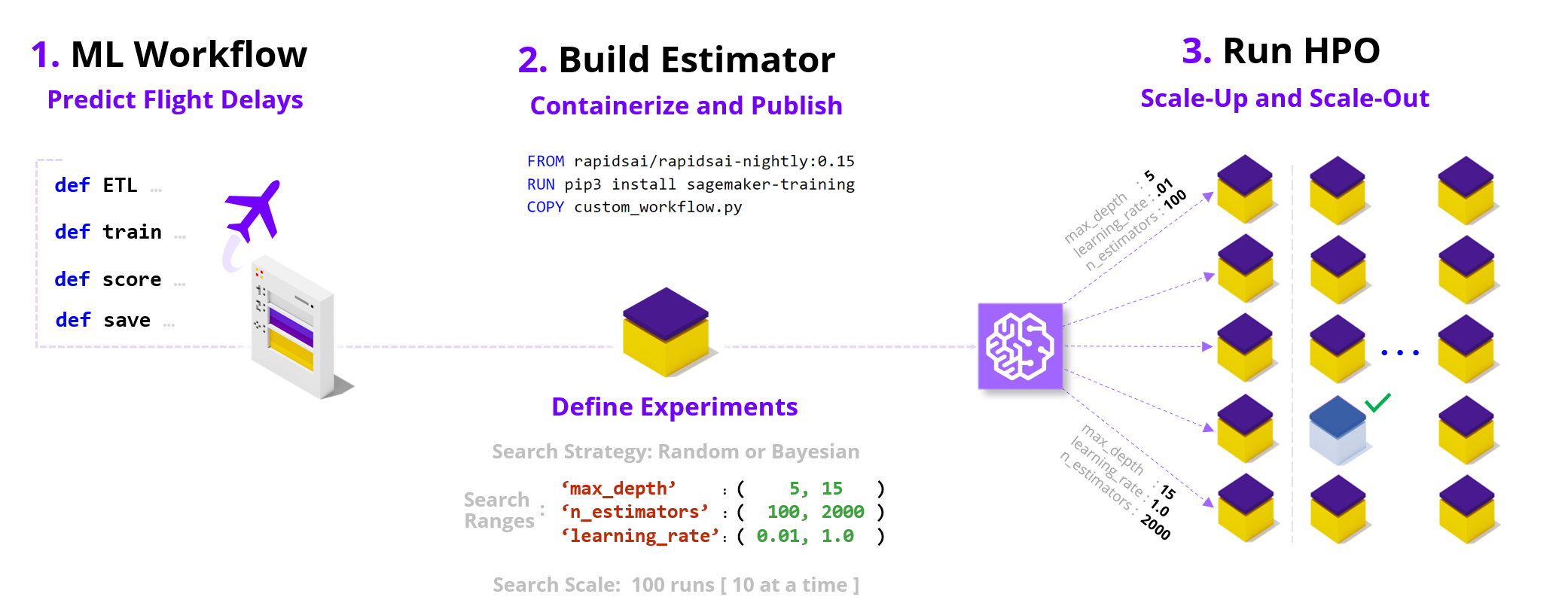

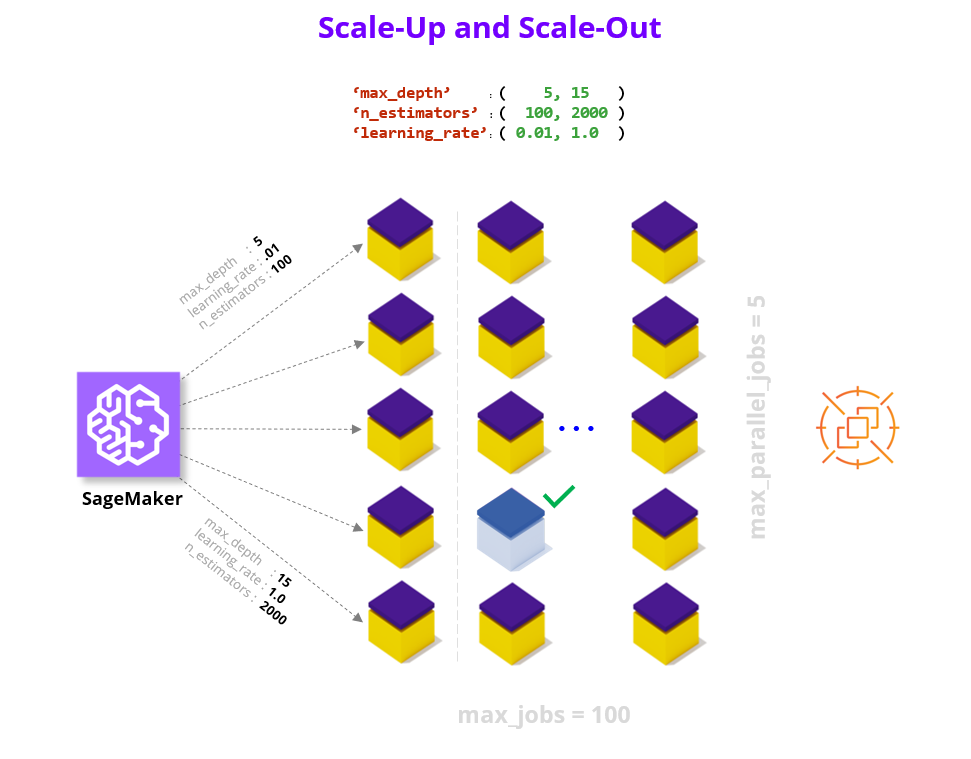

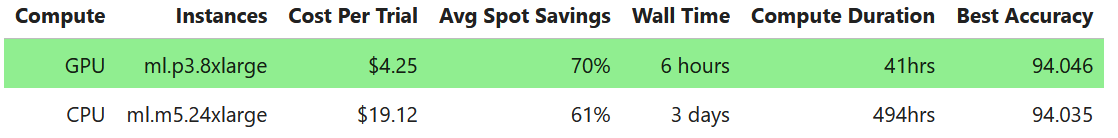

"[Hyper Parameter Optimization](https://en.wikipedia.org/wiki/Hyperparameter_optimization) (HPO) improves model quality by searching over hyperparameters, parameters not typically learned during the training process but rather values that control the learning process itself (e.g., model size/capacity). This search can significantly boost model quality relative to default settings and non-expert tuning; however, HPO can take a very long time on a non-accelerated platform. In this notebook, we containerize a RAPIDS workflow and run Bring-Your-Own-Container SageMaker HPO to show how we can overcome the computational complexity of model search. \n",

"\n",

"We accelerate HPO in two key ways: \n",

"* by *scaling within a node* (e.g., multi-GPU where each GPU brings a magnitude higher core count relative to CPUs), and \n",

"* by *scaling across nodes* and running parallel trials on cloud instances.\n",

"\n",

"By combining these two powers HPO experiments that feel unapproachable and may take multiple days on CPU instances can complete in just hours. For example, we find a **12x** speedup in wall clock time (6 hours vs 3+ days) and a **4.5x** reduction in cost when comparing between GPU and CPU [EC2 Spot instances](https://docs.aws.amazon.com/sagemaker/latest/dg/model-managed-spot-training.html) on 100 XGBoost HPO trials using 10 parallel workers on 10 years of the Airline Dataset (~63M flights) hosted in a S3 bucket. For additional details refer to the end of the notebook."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"With all these powerful tools at our disposal, every data scientist should feel empowered to up-level their model before serving it to the world!"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"

"

]

},

{

"attachments": {},

"cell_type": "markdown",

"metadata": {},

"source": [

"---\n",

"\n",

"This notebook's CI test result for us-west-2 is as follows. CI test results in other regions can be found at the end of the notebook. \n",

"\n",

"\n",

"\n",

"---"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"[Hyper Parameter Optimization](https://en.wikipedia.org/wiki/Hyperparameter_optimization) (HPO) improves model quality by searching over hyperparameters, parameters not typically learned during the training process but rather values that control the learning process itself (e.g., model size/capacity). This search can significantly boost model quality relative to default settings and non-expert tuning; however, HPO can take a very long time on a non-accelerated platform. In this notebook, we containerize a RAPIDS workflow and run Bring-Your-Own-Container SageMaker HPO to show how we can overcome the computational complexity of model search. \n",

"\n",

"We accelerate HPO in two key ways: \n",

"* by *scaling within a node* (e.g., multi-GPU where each GPU brings a magnitude higher core count relative to CPUs), and \n",

"* by *scaling across nodes* and running parallel trials on cloud instances.\n",

"\n",

"By combining these two powers HPO experiments that feel unapproachable and may take multiple days on CPU instances can complete in just hours. For example, we find a **12x** speedup in wall clock time (6 hours vs 3+ days) and a **4.5x** reduction in cost when comparing between GPU and CPU [EC2 Spot instances](https://docs.aws.amazon.com/sagemaker/latest/dg/model-managed-spot-training.html) on 100 XGBoost HPO trials using 10 parallel workers on 10 years of the Airline Dataset (~63M flights) hosted in a S3 bucket. For additional details refer to the end of the notebook."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"With all these powerful tools at our disposal, every data scientist should feel empowered to up-level their model before serving it to the world!"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" **Preamble** "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"To get things rolling let's make sure we can query our AWS SageMaker execution role and session as well as our account ID and AWS region."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"import sagemaker\n",

"from helper_functions import *"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"execution_role = sagemaker.get_execution_role()\n",

"session = sagemaker.Session()\n",

"\n",

"account=!(aws sts get-caller-identity --query Account --output text)\n",

"region=!(aws configure get region)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"account, region"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" **Key Choices** "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's go ahead and choose the configuration options for our HPO run.\n",

"\n",

"Below are two reference configurations showing a small and a large scale HPO (sized in terms of total experiments/compute). \n",

"\n",

"The default values in the notebook are set for the small HPO configuration, however you are welcome to scale them up.\n",

"\n",

"> **small HPO**: 1_year, XGBoost, 3 CV folds, singleGPU, max_jobs = 10, max_parallel_jobs = 2\n",

"\n",

"> **large HPO**: 10_year, XGBoost, 10 CV folds, multiGPU, max_jobs = 100, max_parallel_jobs = 10"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" [ Dataset ] "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We offer free hosting for several demo datasets that you can try running HPO with, or alternatively you can bring your own dataset (BYOD). \n",

"\n",

"By default we leverage the `Airline` dataset, which is a large public tracker of US domestic flight logs which we offer in various sizes (1 year, 3 year, and 10 year) and in Parquet (compressed column storage) format. The machine learning objective with this dataset is to predict whether flights will be more than 15 minutes late arriving to their destination ([dataset link](https://www.transtats.bts.gov/DatabaseInfo.asp?DB_ID=120&DB_URL=), additional details in Section 1.1). \n",

"\n",

"As an alternative we also offer the `NYC Taxi` dataset which captures yellow cab trip details in Ney York in January 2020, stored in CSV format without any compression. The machine learning objective with this dataset is to predict whether a trip had an above average tip (>$2.20).\n",

"\n",

"We host the demo datasets in public S3 demo buckets in both the **us-east-1** (N. Virginia) or **us-west-2** (Oregon) regions (i.e., `sagemaker-rapids-hpo-us-east-1`, and `sagemaker-rapids-hpo-us-west-2`). You should run the SageMaker HPO workflow in either of these two regions if you wish to leverage the demo datasets since SageMaker requires that the S3 dataset and the compute you'll be renting are co-located. \n",

"\n",

"Lastly, if you plan to use your own dataset refer to the BYOD checklist in the Appendix to help integrate into the workflow."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"| dataset | data_bucket | dataset_directory | # samples | storage type | time span |\n",

"|---|---|---|---|---|---|\n",

"| Airline Stats Small | demo | 1_year | 6.3M | Parquet | 2019 |\n",

"| Airline Stats Medium | demo | 3_year | 18M | Parquet | 2019-2017 |\n",

"| Airline Stats Large | demo | 10_year | 63M | Parquet | 2019-2010 |\n",

"| NYC Taxi | demo | NYC_taxi | 6.3M | CSV | 2020 January |\n",

"| Bring Your Own Dataset | custom | custom | custom | Parquet/CSV | custom |"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose dataset S3 bucket and directory\n",

"data_bucket = \"sagemaker-rapids-hpo-\" + region[0]\n",

"dataset_directory = \"3_year\" # '3_year', '10_year', 'NYC_taxi'\n",

"\n",

"# please choose output bucket for trained model(s)\n",

"model_output_bucket = session.default_bucket()"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"s3_data_input = f\"s3://{data_bucket}/{dataset_directory}\"\n",

"s3_model_output = f\"s3://{model_output_bucket}/trained-models\"\n",

"\n",

"best_hpo_model_local_save_directory = os.getcwd()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" [ Algorithm ] "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"From a ML/algorithm perspective, we offer [XGBoost](https://xgboost.readthedocs.io/en/latest/#) and [RandomForest](https://docs.rapids.ai/api/cuml/stable/cuml_blogs.html#tree-and-forest-models) decision tree models which do quite well on this structured dataset. You are free to switch between these two algorithm choices and everything in the example will continue to work."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose learning algorithm\n",

"algorithm_choice = \"XGBoost\"\n",

"\n",

"assert algorithm_choice in [\"XGBoost\", \"RandomForest\"]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We can also optionally increase robustness via reshuffles of the train-test split (i.e., [cross-validation folds](https://scikit-learn.org/stable/modules/cross_validation.html)). Typical values here are between 3 and 10 folds."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose cross-validation folds\n",

"cv_folds = 3\n",

"\n",

"assert cv_folds >= 1"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" [ ML Workflow Compute Choice ] "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We enable the option of running different code variations that unlock increasing amounts of parallelism in the compute workflow. \n",

"\n",

"* `singleCPU`** = [pandas](https://pandas.pydata.org/) + [sklearn](https://scikit-learn.org/stable/)\n",

"* `multiCPU` = [dask](https://dask.org/) + [pandas](https://pandas.pydata.org/) + [sklearn](https://scikit-learn.org/stable/)\n",

"\n",

"* RAPIDS `singleGPU` = [cudf](https://github.com/rapidsai/cudf) + [cuml](https://github.com/rapidsai/cuml)\n",

"* RAPIDS `multiGPU` = [dask](https://dask.org/) + [cudf](https://github.com/rapidsai/cudf) + [cuml](https://github.com/rapidsai/cuml) \n",

"\n",

"All of these code paths are available in the `/code/workflows` directory for your reference. \n",

"\n",

"> **Note that the single-CPU option will leverage multiple cores in the model training portion of the workflow; however, to unlock full parallelism in each stage of the workflow we use [Dask](https://dask.org/). \n",

"\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose code variant\n",

"ml_workflow_choice = \"singleGPU\"\n",

"\n",

"assert ml_workflow_choice in [\"singleCPU\", \"singleGPU\", \"multiCPU\", \"multiGPU\"]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" [ Search Ranges and Strategy ] \n",

""

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"One of the most important choices when running HPO is to choose the bounds of the hyperparameter search process. Below we've set the ranges of the hyperparameters to allow for interesting variation, you are of course welcome to revise these ranges based on domain knowledge especially if you plan to plug in your own dataset. \n",

"\n",

"> Note that we support additional algorithm specific parameters (refer to the `parse_hyper_parameter_inputs` function in `HPOConfig.py`), but for demo purposes have limited our choice to the three parameters that overlap between the XGBoost and RandomForest algorithms. For more details see the documentation for [XGBoost parameters](https://xgboost.readthedocs.io/en/latest/parameter.html) and [RandomForest parameters](https://docs.rapids.ai/api/cuml/stable/api.html#random-forest).\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose HPO search ranges\n",

"hyperparameter_ranges = {\n",

" \"max_depth\": sagemaker.parameter.IntegerParameter(5, 15),\n",

" \"n_estimators\": sagemaker.parameter.IntegerParameter(100, 500),\n",

" \"max_features\": sagemaker.parameter.ContinuousParameter(0.1, 1.0),\n",

"} # see note above for adding additional parameters"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"if \"XGBoost\" in algorithm_choice:\n",

" # number of trees parameter name difference b/w XGBoost and RandomForest\n",

" hyperparameter_ranges[\"num_boost_round\"] = hyperparameter_ranges.pop(\"n_estimators\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We can also choose between a Random and Bayesian search strategy for picking parameter combinations. \n",

"\n",

"**Random Search**: Choose a random combination of values from within the ranges for each training job it launches. The choice of hyperparameters doesn't depend on previous results so you can run the maximum number of concurrent workers without affecting the performance of the search. \n",

"\n",

"**Bayesian Search**: Make a guess about which hyperparameter combinations are likely to get the best results. After testing the first set of hyperparameter values, hyperparameter tuning uses regression to choose the next set of hyperparameter values to test."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose HPO search strategy\n",

"search_strategy = \"Random\"\n",

"\n",

"assert search_strategy in [\"Random\", \"Bayesian\"]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" [ Experiment Scale ] "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We also need to decide how may total experiments to run, and how many should run in parallel. Below we have a very conservative number of maximum jobs to run so that you don't accidently spawn large computations when starting out, however for meaningful HPO searches this number should be much higher (e.g., in our experiments we often run 100 max_jobs). Note that you may need to request a [quota limit increase](https://docs.aws.amazon.com/general/latest/gr/sagemaker.html) for additional `max_parallel_jobs` parallel workers. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose total number of HPO experiments[ we have set this number very low to allow for automated CI testing ]\n",

"max_jobs = 2"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose number of experiments that can run in parallel\n",

"max_parallel_jobs = 2"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's also set the max duration for an individual job to 24 hours so we don't have run-away compute jobs taking too long."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"max_duration_of_experiment_seconds = 60 * 60 * 24"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" [ Compute Platform ] "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Based on the dataset size and compute choice we will try to recommend an instance choice*, you are of course welcome to select alternate configurations. \n",

"> e.g., For the 10_year dataset option, we suggest ml.p3.8xlarge instances (4 GPUs) and ml.m5.24xlarge CPU instances ( we will need upwards of 200GB CPU RAM during model training)."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# we will recommend a compute instance type, feel free to modify\n",

"instance_type = recommend_instance_type(ml_workflow_choice, dataset_directory)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"In addition to choosing our instance type, we can also enable significant savings by leveraging [AWS EC2 Spot Instances](https://aws.amazon.com/ec2/spot/).\n",

"\n",

"We **highly recommend** that you set this flag to `True` as it typically leads to 60-70% cost savings. Note, however that you may need to request a [quota limit increase](https://docs.aws.amazon.com/general/latest/gr/sagemaker.html) to enable Spot instances in SageMaker.\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose whether spot instances should be used\n",

"use_spot_instances_flag = True"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" **Validate** "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"summarize_choices(\n",

" s3_data_input,\n",

" s3_model_output,\n",

" ml_workflow_choice,\n",

" algorithm_choice,\n",

" cv_folds,\n",

" instance_type,\n",

" use_spot_instances_flag,\n",

" search_strategy,\n",

" max_jobs,\n",

" max_parallel_jobs,\n",

" max_duration_of_experiment_seconds,\n",

")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" **1. ML Workflow** "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"

"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" **Preamble** "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"To get things rolling let's make sure we can query our AWS SageMaker execution role and session as well as our account ID and AWS region."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"import sagemaker\n",

"from helper_functions import *"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"execution_role = sagemaker.get_execution_role()\n",

"session = sagemaker.Session()\n",

"\n",

"account=!(aws sts get-caller-identity --query Account --output text)\n",

"region=!(aws configure get region)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"account, region"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" **Key Choices** "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's go ahead and choose the configuration options for our HPO run.\n",

"\n",

"Below are two reference configurations showing a small and a large scale HPO (sized in terms of total experiments/compute). \n",

"\n",

"The default values in the notebook are set for the small HPO configuration, however you are welcome to scale them up.\n",

"\n",

"> **small HPO**: 1_year, XGBoost, 3 CV folds, singleGPU, max_jobs = 10, max_parallel_jobs = 2\n",

"\n",

"> **large HPO**: 10_year, XGBoost, 10 CV folds, multiGPU, max_jobs = 100, max_parallel_jobs = 10"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" [ Dataset ] "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We offer free hosting for several demo datasets that you can try running HPO with, or alternatively you can bring your own dataset (BYOD). \n",

"\n",

"By default we leverage the `Airline` dataset, which is a large public tracker of US domestic flight logs which we offer in various sizes (1 year, 3 year, and 10 year) and in Parquet (compressed column storage) format. The machine learning objective with this dataset is to predict whether flights will be more than 15 minutes late arriving to their destination ([dataset link](https://www.transtats.bts.gov/DatabaseInfo.asp?DB_ID=120&DB_URL=), additional details in Section 1.1). \n",

"\n",

"As an alternative we also offer the `NYC Taxi` dataset which captures yellow cab trip details in Ney York in January 2020, stored in CSV format without any compression. The machine learning objective with this dataset is to predict whether a trip had an above average tip (>$2.20).\n",

"\n",

"We host the demo datasets in public S3 demo buckets in both the **us-east-1** (N. Virginia) or **us-west-2** (Oregon) regions (i.e., `sagemaker-rapids-hpo-us-east-1`, and `sagemaker-rapids-hpo-us-west-2`). You should run the SageMaker HPO workflow in either of these two regions if you wish to leverage the demo datasets since SageMaker requires that the S3 dataset and the compute you'll be renting are co-located. \n",

"\n",

"Lastly, if you plan to use your own dataset refer to the BYOD checklist in the Appendix to help integrate into the workflow."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"| dataset | data_bucket | dataset_directory | # samples | storage type | time span |\n",

"|---|---|---|---|---|---|\n",

"| Airline Stats Small | demo | 1_year | 6.3M | Parquet | 2019 |\n",

"| Airline Stats Medium | demo | 3_year | 18M | Parquet | 2019-2017 |\n",

"| Airline Stats Large | demo | 10_year | 63M | Parquet | 2019-2010 |\n",

"| NYC Taxi | demo | NYC_taxi | 6.3M | CSV | 2020 January |\n",

"| Bring Your Own Dataset | custom | custom | custom | Parquet/CSV | custom |"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose dataset S3 bucket and directory\n",

"data_bucket = \"sagemaker-rapids-hpo-\" + region[0]\n",

"dataset_directory = \"3_year\" # '3_year', '10_year', 'NYC_taxi'\n",

"\n",

"# please choose output bucket for trained model(s)\n",

"model_output_bucket = session.default_bucket()"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"s3_data_input = f\"s3://{data_bucket}/{dataset_directory}\"\n",

"s3_model_output = f\"s3://{model_output_bucket}/trained-models\"\n",

"\n",

"best_hpo_model_local_save_directory = os.getcwd()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" [ Algorithm ] "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"From a ML/algorithm perspective, we offer [XGBoost](https://xgboost.readthedocs.io/en/latest/#) and [RandomForest](https://docs.rapids.ai/api/cuml/stable/cuml_blogs.html#tree-and-forest-models) decision tree models which do quite well on this structured dataset. You are free to switch between these two algorithm choices and everything in the example will continue to work."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose learning algorithm\n",

"algorithm_choice = \"XGBoost\"\n",

"\n",

"assert algorithm_choice in [\"XGBoost\", \"RandomForest\"]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We can also optionally increase robustness via reshuffles of the train-test split (i.e., [cross-validation folds](https://scikit-learn.org/stable/modules/cross_validation.html)). Typical values here are between 3 and 10 folds."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose cross-validation folds\n",

"cv_folds = 3\n",

"\n",

"assert cv_folds >= 1"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" [ ML Workflow Compute Choice ] "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We enable the option of running different code variations that unlock increasing amounts of parallelism in the compute workflow. \n",

"\n",

"* `singleCPU`** = [pandas](https://pandas.pydata.org/) + [sklearn](https://scikit-learn.org/stable/)\n",

"* `multiCPU` = [dask](https://dask.org/) + [pandas](https://pandas.pydata.org/) + [sklearn](https://scikit-learn.org/stable/)\n",

"\n",

"* RAPIDS `singleGPU` = [cudf](https://github.com/rapidsai/cudf) + [cuml](https://github.com/rapidsai/cuml)\n",

"* RAPIDS `multiGPU` = [dask](https://dask.org/) + [cudf](https://github.com/rapidsai/cudf) + [cuml](https://github.com/rapidsai/cuml) \n",

"\n",

"All of these code paths are available in the `/code/workflows` directory for your reference. \n",

"\n",

"> **Note that the single-CPU option will leverage multiple cores in the model training portion of the workflow; however, to unlock full parallelism in each stage of the workflow we use [Dask](https://dask.org/). \n",

"\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose code variant\n",

"ml_workflow_choice = \"singleGPU\"\n",

"\n",

"assert ml_workflow_choice in [\"singleCPU\", \"singleGPU\", \"multiCPU\", \"multiGPU\"]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" [ Search Ranges and Strategy ] \n",

""

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"One of the most important choices when running HPO is to choose the bounds of the hyperparameter search process. Below we've set the ranges of the hyperparameters to allow for interesting variation, you are of course welcome to revise these ranges based on domain knowledge especially if you plan to plug in your own dataset. \n",

"\n",

"> Note that we support additional algorithm specific parameters (refer to the `parse_hyper_parameter_inputs` function in `HPOConfig.py`), but for demo purposes have limited our choice to the three parameters that overlap between the XGBoost and RandomForest algorithms. For more details see the documentation for [XGBoost parameters](https://xgboost.readthedocs.io/en/latest/parameter.html) and [RandomForest parameters](https://docs.rapids.ai/api/cuml/stable/api.html#random-forest).\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose HPO search ranges\n",

"hyperparameter_ranges = {\n",

" \"max_depth\": sagemaker.parameter.IntegerParameter(5, 15),\n",

" \"n_estimators\": sagemaker.parameter.IntegerParameter(100, 500),\n",

" \"max_features\": sagemaker.parameter.ContinuousParameter(0.1, 1.0),\n",

"} # see note above for adding additional parameters"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"if \"XGBoost\" in algorithm_choice:\n",

" # number of trees parameter name difference b/w XGBoost and RandomForest\n",

" hyperparameter_ranges[\"num_boost_round\"] = hyperparameter_ranges.pop(\"n_estimators\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We can also choose between a Random and Bayesian search strategy for picking parameter combinations. \n",

"\n",

"**Random Search**: Choose a random combination of values from within the ranges for each training job it launches. The choice of hyperparameters doesn't depend on previous results so you can run the maximum number of concurrent workers without affecting the performance of the search. \n",

"\n",

"**Bayesian Search**: Make a guess about which hyperparameter combinations are likely to get the best results. After testing the first set of hyperparameter values, hyperparameter tuning uses regression to choose the next set of hyperparameter values to test."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose HPO search strategy\n",

"search_strategy = \"Random\"\n",

"\n",

"assert search_strategy in [\"Random\", \"Bayesian\"]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" [ Experiment Scale ] "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We also need to decide how may total experiments to run, and how many should run in parallel. Below we have a very conservative number of maximum jobs to run so that you don't accidently spawn large computations when starting out, however for meaningful HPO searches this number should be much higher (e.g., in our experiments we often run 100 max_jobs). Note that you may need to request a [quota limit increase](https://docs.aws.amazon.com/general/latest/gr/sagemaker.html) for additional `max_parallel_jobs` parallel workers. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose total number of HPO experiments[ we have set this number very low to allow for automated CI testing ]\n",

"max_jobs = 2"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose number of experiments that can run in parallel\n",

"max_parallel_jobs = 2"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's also set the max duration for an individual job to 24 hours so we don't have run-away compute jobs taking too long."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"max_duration_of_experiment_seconds = 60 * 60 * 24"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" [ Compute Platform ] "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Based on the dataset size and compute choice we will try to recommend an instance choice*, you are of course welcome to select alternate configurations. \n",

"> e.g., For the 10_year dataset option, we suggest ml.p3.8xlarge instances (4 GPUs) and ml.m5.24xlarge CPU instances ( we will need upwards of 200GB CPU RAM during model training)."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# we will recommend a compute instance type, feel free to modify\n",

"instance_type = recommend_instance_type(ml_workflow_choice, dataset_directory)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"In addition to choosing our instance type, we can also enable significant savings by leveraging [AWS EC2 Spot Instances](https://aws.amazon.com/ec2/spot/).\n",

"\n",

"We **highly recommend** that you set this flag to `True` as it typically leads to 60-70% cost savings. Note, however that you may need to request a [quota limit increase](https://docs.aws.amazon.com/general/latest/gr/sagemaker.html) to enable Spot instances in SageMaker.\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# please choose whether spot instances should be used\n",

"use_spot_instances_flag = True"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" **Validate** "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"summarize_choices(\n",

" s3_data_input,\n",

" s3_model_output,\n",

" ml_workflow_choice,\n",

" algorithm_choice,\n",

" cv_folds,\n",

" instance_type,\n",

" use_spot_instances_flag,\n",

" search_strategy,\n",

" max_jobs,\n",

" max_parallel_jobs,\n",

" max_duration_of_experiment_seconds,\n",

")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" **1. ML Workflow** "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 1.1 - Dataset \n",

""

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

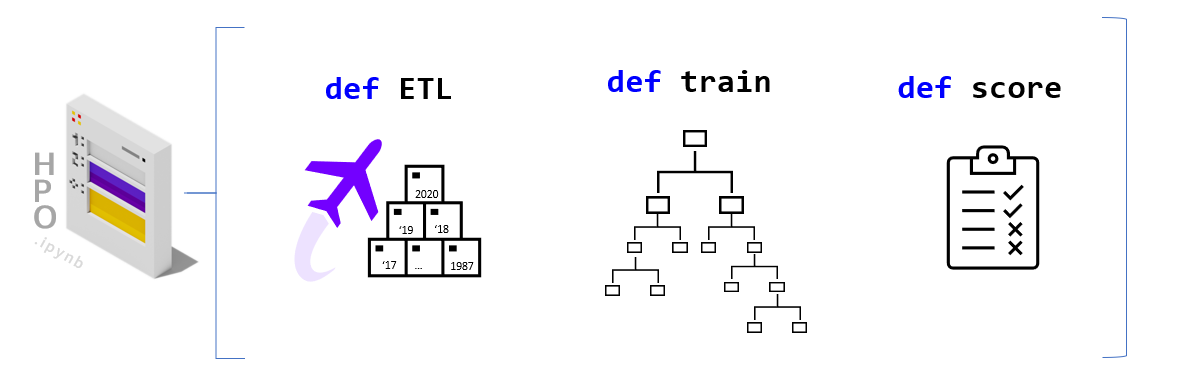

"The default settings for this demo are built to utilize the Airline dataset (Carrier On-Time Performance 1987-2020, available from the [Bureau of Transportation Statistics](https://transtats.bts.gov/Tables.asp?DB_ID=120&DB_Name=Airline%20On-Time%20Performance%20Data&DB_Short_Name=On-Time#)). Below are some additional details about this dataset, we plan to offer a companion notebook that does a deep dive on the data science behind this dataset. Note that if you are using an alternate dataset (e.g., NYC Taxi or BYOData) these details are not relevant.\n",

"\n",

"The public dataset contains logs/features about flights in the United States (17 airlines) including:\n",

"\n",

"* Locations and distance ( `Origin`, `Dest`, `Distance` )\n",

"* Airline / carrier ( `Reporting_Airline` )\n",

"* Scheduled departure and arrival times ( `CRSDepTime` and `CRSArrTime` )\n",

"* Actual departure and arrival times ( `DpTime` and `ArrTime` )\n",

"* Difference between scheduled & actual times ( `ArrDelay` and `DepDelay` )\n",

"* Binary encoded version of late, aka our target variable ( `ArrDelay15` )\n",

"\n",

"Using these features we will build a classifier model to predict whether a flight is going to be more than 15 minutes late on arrival as it prepares to depart."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 1.2 - Python ML Workflow "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

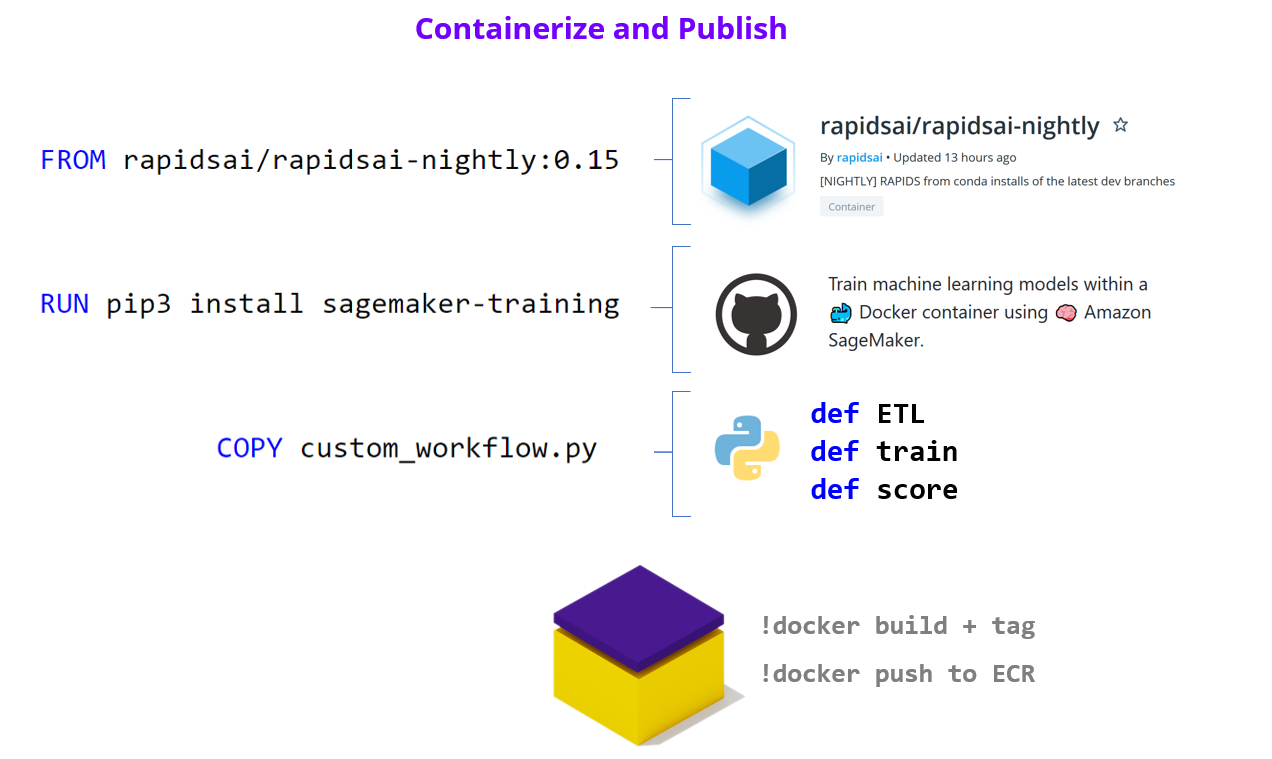

"To build a RAPIDS enabled SageMaker HPO we first need to build a [SageMaker Estimator](https://sagemaker.readthedocs.io/en/stable/api/training/estimators.html). An Estimator is a container image that captures all the software needed to run an HPO experiment. The container is augmented with entrypoint code that will be trggered at runtime by each worker. The entrypoint code enables us to write custom models and hook them up to data. "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"In order to work with SageMaker HPO, the entrypoint logic should parse hyperparameters (supplied by AWS SageMaker), load and split data, build and train a model, score/evaluate the trained model, and emit an output representing the final score for the given hyperparameter setting. We've already built multiple variations of this code.\n",

"\n",

"If you would like to make changes by adding your custom model logic feel free to modify the **train.py** and/or the specific workflow files in the `code/workflows` directory. You are also welcome to uncomment the cells below to load the read/review the code."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"First, let's switch our working directory to the location of the Estimator entrypoint and library code."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": false,

"jupyter": {

"outputs_hidden": false

}

},

"outputs": [],

"source": [

"%cd code"

]

},

{

"cell_type": "raw",

"metadata": {

"jupyter": {

"outputs_hidden": false

}

},

"source": [

"aws/code\n",

"\u251c\u2500\u2500 Dockerfile\n",

"\u251c\u2500\u2500 entrypoint.sh\n",

"\u251c\u2500\u2500 HPOConfig.py\n",

"\u251c\u2500\u2500 HPODatasets.py\n",

"\u251c\u2500\u2500 MLWorkflow.py\n",

"\u251c\u2500\u2500 serve.py\n",

"\u251c\u2500\u2500 train.py\n",

"\u2514\u2500\u2500 workflows\n",

" \u251c\u2500\u2500 MLWorkflowMultiCPU.py\n",

" \u251c\u2500\u2500 MLWorkflowMultiGPU.py\n",

" \u251c\u2500\u2500 MLWorkflowSingleCPU.py\n",

" \u2514\u2500\u2500 MLWorkflowSingleGPU.py"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# %load train.py"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# %load workflows/MLWorkflowSingleGPU.py"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" **2. Build Estimator** "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"

"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 1.1 - Dataset \n",

""

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The default settings for this demo are built to utilize the Airline dataset (Carrier On-Time Performance 1987-2020, available from the [Bureau of Transportation Statistics](https://transtats.bts.gov/Tables.asp?DB_ID=120&DB_Name=Airline%20On-Time%20Performance%20Data&DB_Short_Name=On-Time#)). Below are some additional details about this dataset, we plan to offer a companion notebook that does a deep dive on the data science behind this dataset. Note that if you are using an alternate dataset (e.g., NYC Taxi or BYOData) these details are not relevant.\n",

"\n",

"The public dataset contains logs/features about flights in the United States (17 airlines) including:\n",

"\n",

"* Locations and distance ( `Origin`, `Dest`, `Distance` )\n",

"* Airline / carrier ( `Reporting_Airline` )\n",

"* Scheduled departure and arrival times ( `CRSDepTime` and `CRSArrTime` )\n",

"* Actual departure and arrival times ( `DpTime` and `ArrTime` )\n",

"* Difference between scheduled & actual times ( `ArrDelay` and `DepDelay` )\n",

"* Binary encoded version of late, aka our target variable ( `ArrDelay15` )\n",

"\n",

"Using these features we will build a classifier model to predict whether a flight is going to be more than 15 minutes late on arrival as it prepares to depart."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 1.2 - Python ML Workflow "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"To build a RAPIDS enabled SageMaker HPO we first need to build a [SageMaker Estimator](https://sagemaker.readthedocs.io/en/stable/api/training/estimators.html). An Estimator is a container image that captures all the software needed to run an HPO experiment. The container is augmented with entrypoint code that will be trggered at runtime by each worker. The entrypoint code enables us to write custom models and hook them up to data. "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"In order to work with SageMaker HPO, the entrypoint logic should parse hyperparameters (supplied by AWS SageMaker), load and split data, build and train a model, score/evaluate the trained model, and emit an output representing the final score for the given hyperparameter setting. We've already built multiple variations of this code.\n",

"\n",

"If you would like to make changes by adding your custom model logic feel free to modify the **train.py** and/or the specific workflow files in the `code/workflows` directory. You are also welcome to uncomment the cells below to load the read/review the code."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"First, let's switch our working directory to the location of the Estimator entrypoint and library code."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": false,

"jupyter": {

"outputs_hidden": false

}

},

"outputs": [],

"source": [

"%cd code"

]

},

{

"cell_type": "raw",

"metadata": {

"jupyter": {

"outputs_hidden": false

}

},

"source": [

"aws/code\n",

"\u251c\u2500\u2500 Dockerfile\n",

"\u251c\u2500\u2500 entrypoint.sh\n",

"\u251c\u2500\u2500 HPOConfig.py\n",

"\u251c\u2500\u2500 HPODatasets.py\n",

"\u251c\u2500\u2500 MLWorkflow.py\n",

"\u251c\u2500\u2500 serve.py\n",

"\u251c\u2500\u2500 train.py\n",

"\u2514\u2500\u2500 workflows\n",

" \u251c\u2500\u2500 MLWorkflowMultiCPU.py\n",

" \u251c\u2500\u2500 MLWorkflowMultiGPU.py\n",

" \u251c\u2500\u2500 MLWorkflowSingleCPU.py\n",

" \u2514\u2500\u2500 MLWorkflowSingleGPU.py"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# %load train.py"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# %load workflows/MLWorkflowSingleGPU.py"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" **2. Build Estimator** "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

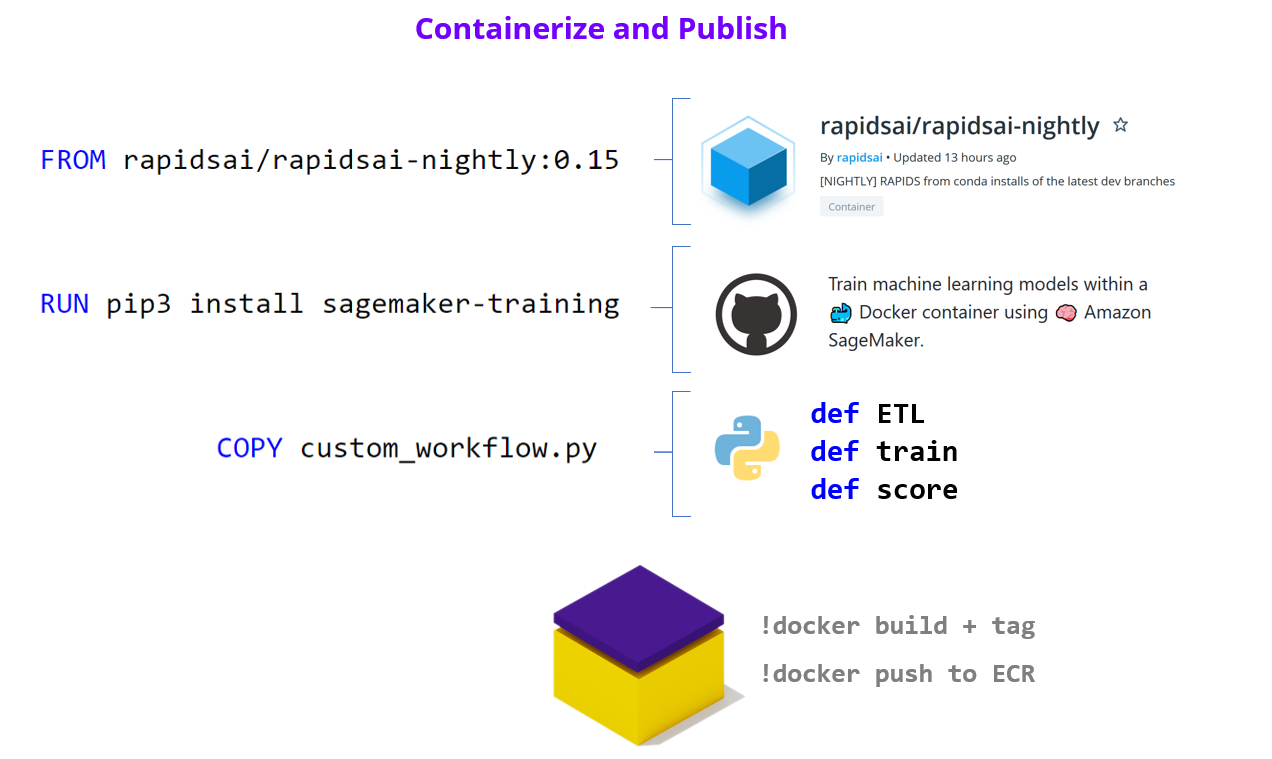

"As we've already mentioned, the SageMaker Estimator represents the containerized software stack that AWS SageMaker will replicate to each worker node.\n",

"\n",

"The first step to building our Estimator, is to augment a RAPIDS container with our ML Workflow code from above, and push this image to Amazon Elastic Cloud Registry so it is available to SageMaker.\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 2.1 - Containerize and Push to ECR "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Now let's turn to building our container so that it can integrate with the AWS SageMaker HPO API."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Our container can either be built on top of the latest RAPIDS [ nightly ] image as a starting layer or the RAPIDS stable image.\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"rapids_stable = \"rapidsai/rapidsai:21.06-cuda11.0-base-ubuntu18.04-py3.7\"\n",

"rapids_nightly = \"rapidsai/rapidsai-nightly:21.08-cuda11.0-base-ubuntu18.04-py3.7\"\n",

"\n",

"rapids_base_container = rapids_stable\n",

"assert rapids_base_container in [rapids_stable, rapids_nightly]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's also decide on the full name of our container."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"image_base = \"cloud-ml-sagemaker\"\n",

"image_tag = rapids_base_container.split(\":\")[1]"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"ecr_fullname = f\"{account[0]}.dkr.ecr.{region[0]}.amazonaws.com/{image_base}:{image_tag}\""

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"ecr_fullname"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 2.1.1 - Write Dockerfile "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We write out the Dockerfile to disk, and in a few cells execute the docker build command. \n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's now write our selected RAPDIS image layer as the first FROM statement in the the Dockerfile."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"with open(\"Dockerfile\", \"w\") as dockerfile:\n",

" dockerfile.writelines(\n",

" f\"FROM {rapids_base_container} \\n\\n\"\n",

" f'ENV DATASET_DIRECTORY=\"{dataset_directory}\"\\n'\n",

" f'ENV ALGORITHM_CHOICE=\"{algorithm_choice}\"\\n'\n",

" f'ENV ML_WORKFLOW_CHOICE=\"{ml_workflow_choice}\"\\n'\n",

" f'ENV CV_FOLDS=\"{cv_folds}\"\\n'\n",

" )"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Next let's append write the remaining pieces of the Dockerfile, namely adding the sagemaker-training-toolkit, flask, dask-ml, and copying our python code."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%writefile -a Dockerfile\n",

"\n",

"# ensure printed output/log-messages retain correct order\n",

"ENV PYTHONUNBUFFERED=True\n",

"\n",

"# delete expired nvidia keys and fetch new ones\n",

"RUN apt-key del 7fa2af80\n",

"RUN rm /etc/apt/sources.list.d/cuda.list\n",

"RUN rm /etc/apt/sources.list.d/nvidia-ml.list\n",

"RUN wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64/cuda-keyring_1.0-1_all.deb && dpkg -i cuda-keyring_1.0-1_all.deb \n",

"\n",

"# add sagemaker-training-toolkit [ requires build tools ], flask [ serving ], and dask-ml\n",

"RUN apt-get update && apt-get install -y --no-install-recommends build-essential \\ \n",

" && source activate rapids && pip3 install sagemaker-training dask-ml flask\n",

"\n",

"# path where SageMaker looks for code when container runs in the cloud\n",

"ENV CLOUD_PATH \"/opt/ml/code\"\n",

"\n",

"# copy our latest [local] code into the container \n",

"COPY . $CLOUD_PATH\n",

"\n",

"# make the entrypoint script executable\n",

"RUN chmod +x $CLOUD_PATH/entrypoint.sh\n",

"\n",

"WORKDIR $CLOUD_PATH\n",

"ENTRYPOINT [\"./entrypoint.sh\"]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Lastly, let's ensure that our Dockerfile correctly captured our base image selection."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"validate_dockerfile(rapids_base_container)\n",

"!cat Dockerfile"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 2.1.2 Build and Tag "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The build step will be dominated by the download of the RAPIDS image (base layer). If it's already been downloaded the build will take less than 1 minute."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"!docker pull $rapids_base_container"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"!docker build . -t $ecr_fullname -f Dockerfile"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 2.1.3 - Publish to Elastic Cloud Registry (ECR) \n",

"\n",

"Now that we've built and tagged our container its time to push it to Amazon's container registry (ECR). Once in ECR, AWS SageMaker will be able to leverage our image to build Estimators and run experiments.\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Docker Login to ECR"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"docker_login_str = !(aws ecr get-login --region {region[0]} --no-include-email)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"!{docker_login_str[0]}"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Create ECR repository [ if it doesn't already exist]"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"repository_query = !(aws ecr describe-repositories --repository-names $image_base)\n",

"if repository_query[0] == '':\n",

" !(aws ecr create-repository --repository-name $image_base)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's now actually push the container to ECR\n",

"> Note the first push to ECR may take some time (hopefully less than 10 minutes)."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"!docker push $ecr_fullname"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 2.2 - Create Estimator "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Having built our container [ +custom logic] and pushed it to ECR, we can finally compile all of efforts into an Estimator instance."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# 'volume_size' - EBS volume size in GB, default = 30\n",

"estimator_params = {\n",

" \"image_uri\": ecr_fullname,\n",

" \"role\": execution_role,\n",

" \"instance_type\": instance_type,\n",

" \"instance_count\": 1,\n",

" \"input_mode\": \"File\",\n",

" \"output_path\": s3_model_output,\n",

" \"use_spot_instances\": use_spot_instances_flag,\n",

" \"max_run\": max_duration_of_experiment_seconds, # 24 hours\n",

" \"sagemaker_session\": session,\n",

"}\n",

"\n",

"if use_spot_instances_flag == True:\n",

" estimator_params.update({\"max_wait\": max_duration_of_experiment_seconds + 1})"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"estimator = sagemaker.estimator.Estimator(**estimator_params)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 2.3 - Test Estimator "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Now we are ready to test by asking SageMaker to run the BYOContainer logic inside our Estimator. This is a useful step if you've made changes to your custom logic and are interested in making sure everything works before launching a large HPO search. \n",

"\n",

"> Note: This verification step will use the default hyperparameter values declared in our custom train code, as SageMaker HPO will not be orchestrating a search for this single run."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"summarize_choices(\n",

" s3_data_input,\n",

" s3_model_output,\n",

" ml_workflow_choice,\n",

" algorithm_choice,\n",

" cv_folds,\n",

" instance_type,\n",

" use_spot_instances_flag,\n",

" search_strategy,\n",

" max_jobs,\n",

" max_parallel_jobs,\n",

" max_duration_of_experiment_seconds,\n",

")"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"job_name = new_job_name_from_config(\n",

" dataset_directory, region, ml_workflow_choice, algorithm_choice, cv_folds, instance_type\n",

")"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"estimator.fit(inputs=s3_data_input, job_name=job_name.lower())"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" **3. Run HPO** "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"With a working SageMaker Estimator in hand, the hardest part is behind us. In the key choices section we already defined our search strategy and hyperparameter ranges, so all that remains is to choose a metric to evaluate performance on. For more documentation check out the AWS SageMaker [Hyperparameter Tuner documentation](https://sagemaker.readthedocs.io/en/stable/tuner.html)."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"

"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"As we've already mentioned, the SageMaker Estimator represents the containerized software stack that AWS SageMaker will replicate to each worker node.\n",

"\n",

"The first step to building our Estimator, is to augment a RAPIDS container with our ML Workflow code from above, and push this image to Amazon Elastic Cloud Registry so it is available to SageMaker.\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 2.1 - Containerize and Push to ECR "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Now let's turn to building our container so that it can integrate with the AWS SageMaker HPO API."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Our container can either be built on top of the latest RAPIDS [ nightly ] image as a starting layer or the RAPIDS stable image.\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"rapids_stable = \"rapidsai/rapidsai:21.06-cuda11.0-base-ubuntu18.04-py3.7\"\n",

"rapids_nightly = \"rapidsai/rapidsai-nightly:21.08-cuda11.0-base-ubuntu18.04-py3.7\"\n",

"\n",

"rapids_base_container = rapids_stable\n",

"assert rapids_base_container in [rapids_stable, rapids_nightly]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's also decide on the full name of our container."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"image_base = \"cloud-ml-sagemaker\"\n",

"image_tag = rapids_base_container.split(\":\")[1]"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"ecr_fullname = f\"{account[0]}.dkr.ecr.{region[0]}.amazonaws.com/{image_base}:{image_tag}\""

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"ecr_fullname"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 2.1.1 - Write Dockerfile "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We write out the Dockerfile to disk, and in a few cells execute the docker build command. \n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's now write our selected RAPDIS image layer as the first FROM statement in the the Dockerfile."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"with open(\"Dockerfile\", \"w\") as dockerfile:\n",

" dockerfile.writelines(\n",

" f\"FROM {rapids_base_container} \\n\\n\"\n",

" f'ENV DATASET_DIRECTORY=\"{dataset_directory}\"\\n'\n",

" f'ENV ALGORITHM_CHOICE=\"{algorithm_choice}\"\\n'\n",

" f'ENV ML_WORKFLOW_CHOICE=\"{ml_workflow_choice}\"\\n'\n",

" f'ENV CV_FOLDS=\"{cv_folds}\"\\n'\n",

" )"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Next let's append write the remaining pieces of the Dockerfile, namely adding the sagemaker-training-toolkit, flask, dask-ml, and copying our python code."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%writefile -a Dockerfile\n",

"\n",

"# ensure printed output/log-messages retain correct order\n",

"ENV PYTHONUNBUFFERED=True\n",

"\n",

"# delete expired nvidia keys and fetch new ones\n",

"RUN apt-key del 7fa2af80\n",

"RUN rm /etc/apt/sources.list.d/cuda.list\n",

"RUN rm /etc/apt/sources.list.d/nvidia-ml.list\n",

"RUN wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64/cuda-keyring_1.0-1_all.deb && dpkg -i cuda-keyring_1.0-1_all.deb \n",

"\n",

"# add sagemaker-training-toolkit [ requires build tools ], flask [ serving ], and dask-ml\n",

"RUN apt-get update && apt-get install -y --no-install-recommends build-essential \\ \n",

" && source activate rapids && pip3 install sagemaker-training dask-ml flask\n",

"\n",

"# path where SageMaker looks for code when container runs in the cloud\n",

"ENV CLOUD_PATH \"/opt/ml/code\"\n",

"\n",

"# copy our latest [local] code into the container \n",

"COPY . $CLOUD_PATH\n",

"\n",

"# make the entrypoint script executable\n",

"RUN chmod +x $CLOUD_PATH/entrypoint.sh\n",

"\n",

"WORKDIR $CLOUD_PATH\n",

"ENTRYPOINT [\"./entrypoint.sh\"]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Lastly, let's ensure that our Dockerfile correctly captured our base image selection."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"validate_dockerfile(rapids_base_container)\n",

"!cat Dockerfile"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 2.1.2 Build and Tag "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The build step will be dominated by the download of the RAPIDS image (base layer). If it's already been downloaded the build will take less than 1 minute."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"!docker pull $rapids_base_container"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"!docker build . -t $ecr_fullname -f Dockerfile"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 2.1.3 - Publish to Elastic Cloud Registry (ECR) \n",

"\n",

"Now that we've built and tagged our container its time to push it to Amazon's container registry (ECR). Once in ECR, AWS SageMaker will be able to leverage our image to build Estimators and run experiments.\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Docker Login to ECR"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"docker_login_str = !(aws ecr get-login --region {region[0]} --no-include-email)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"!{docker_login_str[0]}"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Create ECR repository [ if it doesn't already exist]"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"repository_query = !(aws ecr describe-repositories --repository-names $image_base)\n",

"if repository_query[0] == '':\n",

" !(aws ecr create-repository --repository-name $image_base)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's now actually push the container to ECR\n",

"> Note the first push to ECR may take some time (hopefully less than 10 minutes)."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"!docker push $ecr_fullname"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 2.2 - Create Estimator "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Having built our container [ +custom logic] and pushed it to ECR, we can finally compile all of efforts into an Estimator instance."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# 'volume_size' - EBS volume size in GB, default = 30\n",

"estimator_params = {\n",

" \"image_uri\": ecr_fullname,\n",

" \"role\": execution_role,\n",

" \"instance_type\": instance_type,\n",

" \"instance_count\": 1,\n",

" \"input_mode\": \"File\",\n",

" \"output_path\": s3_model_output,\n",

" \"use_spot_instances\": use_spot_instances_flag,\n",

" \"max_run\": max_duration_of_experiment_seconds, # 24 hours\n",

" \"sagemaker_session\": session,\n",

"}\n",

"\n",

"if use_spot_instances_flag == True:\n",

" estimator_params.update({\"max_wait\": max_duration_of_experiment_seconds + 1})"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"estimator = sagemaker.estimator.Estimator(**estimator_params)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 2.3 - Test Estimator "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Now we are ready to test by asking SageMaker to run the BYOContainer logic inside our Estimator. This is a useful step if you've made changes to your custom logic and are interested in making sure everything works before launching a large HPO search. \n",

"\n",

"> Note: This verification step will use the default hyperparameter values declared in our custom train code, as SageMaker HPO will not be orchestrating a search for this single run."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"summarize_choices(\n",

" s3_data_input,\n",

" s3_model_output,\n",

" ml_workflow_choice,\n",

" algorithm_choice,\n",

" cv_folds,\n",

" instance_type,\n",

" use_spot_instances_flag,\n",

" search_strategy,\n",

" max_jobs,\n",

" max_parallel_jobs,\n",

" max_duration_of_experiment_seconds,\n",

")"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"job_name = new_job_name_from_config(\n",

" dataset_directory, region, ml_workflow_choice, algorithm_choice, cv_folds, instance_type\n",

")"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"estimator.fit(inputs=s3_data_input, job_name=job_name.lower())"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" **3. Run HPO** "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"With a working SageMaker Estimator in hand, the hardest part is behind us. In the key choices section we already defined our search strategy and hyperparameter ranges, so all that remains is to choose a metric to evaluate performance on. For more documentation check out the AWS SageMaker [Hyperparameter Tuner documentation](https://sagemaker.readthedocs.io/en/stable/tuner.html)."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 3.1 - Define Metric "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We only focus on a single metric, which we call 'final-score', that captures the accuracy of our model on the test data unseen during training. You are of course welcome to add aditional metrics, see [AWS SageMaker documentation on Metrics](https://docs.aws.amazon.com/sagemaker/latest/dg/automatic-model-tuning-define-metrics.html). When defining a metric we provide a regular expression (i.e., string parsing rule) to extract the key metric from the output of each Estimator/worker."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"metric_definitions = [{\"Name\": \"final-score\", \"Regex\": \"final-score: (.*);\"}]"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"objective_metric_name = \"final-score\""

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 3.2 - Define Tuner "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Finally we put all of the elements we've been building up together into a HyperparameterTuner declaration. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"hpo = sagemaker.tuner.HyperparameterTuner(\n",

" estimator=estimator,\n",

" metric_definitions=metric_definitions,\n",

" objective_metric_name=objective_metric_name,\n",

" objective_type=\"Maximize\",\n",

" hyperparameter_ranges=hyperparameter_ranges,\n",

" strategy=search_strategy,\n",

" max_jobs=max_jobs,\n",

" max_parallel_jobs=max_parallel_jobs,\n",

")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 3.3 - Run HPO "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"summarize_choices(\n",

" s3_data_input,\n",

" s3_model_output,\n",

" ml_workflow_choice,\n",

" algorithm_choice,\n",

" cv_folds,\n",

" instance_type,\n",

" use_spot_instances_flag,\n",

" search_strategy,\n",

" max_jobs,\n",

" max_parallel_jobs,\n",

" max_duration_of_experiment_seconds,\n",

")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's be sure we take a moment to confirm before launching all of our HPO experiments. Depending on your configuration options running this cell can kick off a massive amount of computation!\n",

"> Once this process begins, we recommend that you use the SageMaker UI to keep track of the health of the HPO process and the individual workers."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"tuning_job_name = new_job_name_from_config(\n",

" dataset_directory, region, ml_workflow_choice, algorithm_choice, cv_folds, instance_type\n",

")\n",

"hpo.fit(inputs=s3_data_input, job_name=tuning_job_name, wait=True, logs=\"All\")\n",

"\n",

"hpo.wait() # block until the .fit call above is completed"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 3.4 - Results and Summary "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Once your job is complete there are multiple ways to analyze the results.\n",

"Below we display the performance of the best job, as well printing each HPO trial/job as a row of a dataframe."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"hpo_results = summarize_hpo_results(tuning_job_name)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"sagemaker.HyperparameterTuningJobAnalytics(tuning_job_name).dataframe()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" For a more in depth look at the HPO process we invite you to check out the HPO_Analyze_TuningJob_Results.ipynb notebook which shows how we can explore interesting things like the impact of each individual hyperparameter on the performance metric."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 3.5 - Getting the best Model "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Next let's download the best trained model from our HPO runs."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"local_filename, s3_path_to_best_model = download_best_model(\n",

" model_output_bucket, s3_model_output, hpo_results, best_hpo_model_local_save_directory\n",

")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 3.6 - Model Serving \n",

"\n",

"With your best model in hand, you can now move on to [serving this model on SageMaker](https://docs.aws.amazon.com/sagemaker/latest/dg/how-it-works-deployment.html). \n",

"\n",

"In the example below we show you how to build a [RealTimePredictor](https://sagemaker.readthedocs.io/en/stable/api/inference/predictors.html) using the best model found during the HPO search. We will add a lightweight Flask server to our RAPIDS Estimator (a.k.a., container) which will handle the incoming requests and pass them along to the trained model for inference. If you are curious about how this works under the hood check out the [Use Your Own Inference Server](https://docs.aws.amazon.com/sagemaker/latest/dg/your-algorithms-inference-code.html) documentation and reference the code in `code/serve.py`.\n",

"\n",

"If you are interested in additional serving options (e.g., large batch with batch-transform), we plan to add a companion notebook that will provide additional details."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" 3.6.1 - GPU serving "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"endpoint_model = sagemaker.model.Model(\n",

" image_uri=ecr_fullname, role=execution_role, model_data=s3_path_to_best_model\n",

")"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"DEMO_SERVING_FLAG = False\n",

"\n",

"if DEMO_SERVING_FLAG:\n",

" endpoint_model.deploy(initial_instance_count=1, instance_type=\"ml.p3.2xlarge\")"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"if DEMO_SERVING_FLAG:\n",

"\n",

" predictor = sagemaker.predictor.Predictor(\n",

" endpoint_name=str(endpoint_model.endpoint_name), sagemaker_session=session\n",

" )\n",

" \"\"\" Below we've compiled examples to sanity test the trained model performance on the Airline dataset.\n",

" The first is from a real flight that was nine minutes early in departing, \n",

" the second example is from a flight that was 123 minutes late in departing.\n",

" \"\"\"\n",

" if dataset_directory in [\"1_year\", \"3_year\", \"10_year\"]:\n",

" on_time_example = [\n",

" 2019.0,\n",

" 4.0,\n",

" 12.0,\n",

" 2.0,\n",

" 3647.0,\n",

" 20452.0,\n",

" 30977.0,\n",

" 33244.0,\n",

" 1943.0,\n",

" -9.0,\n",

" 0.0,\n",

" 75.0,\n",

" 491.0,\n",

" ]\n",

" late_example = [\n",

" 2018.0,\n",

" 3.0,\n",

" 9.0,\n",

" 5.0,\n",

" 2279.0,\n",

" 20409.0,\n",

" 30721.0,\n",

" 31703.0,\n",

" 733.0,\n",

" 123.0,\n",

" 1.0,\n",

" 61.0,\n",

" 200.0,\n",

" ]\n",

" example_payload = str(list([on_time_example, late_example]))\n",

" else:\n",

" example_payload = \"\" # fill in a sample payload\n",

"\n",

" print(example_payload)\n",

" predictor.predict(example_payload)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Once we are finished with the serving example, we should be sure to clean up and delete the endpoint. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"if DEMO_SERVING_FLAG:\n",

"\n",

" predictor.delete_endpoint()"

]

},

{

"cell_type": "markdown",